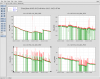

Attached is a 180 day minute trend that shows the decay of the output power of the 4 HPO pump diode boxes. Everything looks as expected except for one thing: the decay for diode box #1 (H1:PSL-OSC_DB1_PWR) seems to have accelerated since Peter's adjustment of the HPO diode currents on October 6 (alogged here). Just doing a quick by eye comparison, the diode box lost ~0.9% over 55 days (from 05-25-2016 to 07-18-2016). More recently it has lost the same ~0.9%, this time over 35 days (from 10-12-2016 to 11-14-2016). This is likely simply due to the age of the diode box; I can find no entry in the LHO alog where any of the HPO diode boxes were swapped (and we have not performed any HPO diode box swaps since I joined the PSL team), so it is likely these are still the original diode boxes installed with the PSL in 2011. Will keep an eye on this.

Looking at the overall trends I think we should be good for the duration of ER10/O2a; the HPO diode box currents will have to be adjusted before the start of O2b.

These are the original diode boxes from the H2 installation (October 2011). The oscillator was running for quite some time in the H2 PSL Enclosure before being moved to the H1 PSL Enclosure (which was a consequence of the 3rd IFO decision).