Ibrahim A, Jeff K

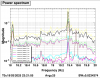

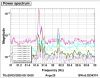

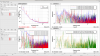

Re-measurement of the BBSS Bounce and Roll modes following changes to the BBSS parameters. Measurements show that these still closely match the new model.

The differences from the original BBSS FDR are:

I have linked this alog to the SWG Logbook in the case these results are needed for BRD development for the BBSS. These modes are not significantly influenced by being in-air vs. in a vacuum, which may be useful information in BRD development,

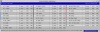

Measurement template can be found X1 (Test Stand) Network: at /ligo/svncommon/SusSVN/sus/trunk/BBSS/X1/BS/Common/Data/ under name: 2025-03-19_1200_X1SUSBS_M1M2_BounceRoll_VR_0p011to40Hz.xml

Initial Measurement:

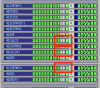

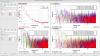

These values were found by taking a High BW Transfer Function using a noise injection into M1 Vertical (for Bounce) and Roll (for Roll in the 2Hz range in the neighborhood of the model values, reading at all BOSEMs in both the M1 and M2 stage. (19-20Hz for Bounce and 31-33Hz for Roll). We then found the most coherent peak in that region to be our measured mode. For Bounce, these only showed up in M1 in the channels we were exciting, which indicates very little cross coupling. For Roll, all M2 channels showed a peak.

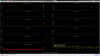

Mechanical Mode Confirmation:

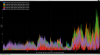

After this, I ran an exponential averaging measurement and injected a sine wave at the found frequencies one-by-one, ringing up our modes for 5 averages before stopping the injection. We then watched the ASD and confirmed that given the number of averages it took to percieve a gradual ring-down at those frequencies (over 20 averages of for each at 0.02BW assuming a Q-Factor of ~1000, which is conservative), that the readout was indeed mechanical, and that these were indeed our Bounce and Roll modes.