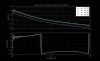

LP_v_H4 shows the laser output power and the head 4 flow rate. At this point in time the flow rate trip

0.2 lpm. The drop in laser power seems to coincide with the increase in flow rate.

XC_v_H4 shows the output of the crystal chiller and the head 4 flow rate. The chiller clearly switches

off after the spike in the head 4 flow rate.

InletP_v_H4 shows the inlet pressure versus head 4 flow rate. The oscillations in pressure take place

after the spike in the flow rate. The same is true for the outlet pressure.

The sudden increase in the flow rate perhaps suggests that something in the flow sensor may have

been dislodged but I (PK) would expect that the flow rate would not drop to zero but to some other

non-zero value. Unless there was a following object that promptly jammed things up. But things started

up okay for the subsequent startup.

The various filters in the system are clean from a visual inspection.

Jason/Peter

The trip Peter discusses above took place at around 6:17am PDT on Sunday, 10/9/2016.

The laser did trip off on Saturday (first reported by Patrick here) at 21:06:02 PDT (04:06:02 UTC, Sunday 10/9/2016). As noted by Kiwamu the reason for the trip was due to a trip of the Laser Head 1-4 Flow interlock.

PSL_Heads_Flow_2016-10-8.png shows the 4 laser head flow sensors around the time of the trip; head 4 is once again the most likely culprit. As seen in the plots posted above by Peter for the early Sunday morning trip, head 4 sees a spike in flow rate before falling off to zero. Interestingly, when compared to the actual interlock (PSL_Head4_v_HeadIL_2016-10-8.png) the interlock trips just prior to the spike in flow rate; from the plot it can be seen that the interlock starts to trip just before the spike in head 4 flow begins. The trip threshold at this time was set to 0.2 lpm, which none of the flow sensors were below at the time the interlock tripped; I can't explain why the interlock tripped.

PSL_Head4_v_XChil_2016-10-8.png shows the crystal chiller turning off several seconds after the interlock trips (5 seconds after in this case). I've noted this before (here).

PSL_Head4_v_InletP_2016-10-8.png shows the head 4 flow rate versus the inlet pressure in the PSL water manifold. The oscillation in the pressure occurs after the interlock tripped and has been seen before (noted above by Peter and here).