Let the saga commence...

Jenne called me this morning and told me that while checking the status of the IFO before she came in today she noticed that the PSL was off. I logged in remotely to confirm and drove out to investigate. I found the laser and the crystal chiller off, diode chiller still running, and the crystal chiller flow, FE flow, and NPRO OK interlocks all tripped. Trends indicate this happened on 8/28/2016 at 01:19:38 UTC (8/27/2016 at 18:19:38 PDT).

Attempt #1

I turned the crystal chiller on, and it turned off within 5 seconds, this time with a head 1-4 flow error (H1:PSL-OSC_FLOWERR) interlock trip. The cause here was the HPO laser head 4 flow meter was not getting above the minimum threshold of 0.4 lpm. I called Peter to consult, and we decided to lower the threshold to 0.2 lpm. I then attempted to restart the crystal chiller and everything came up fine. The flow for head 4 was sitting at ~0.38 lpm, and over the next 10 or so seconds it increased to 0.51 lpm. After a minute or so it dropped to ~0.46 lpm and then slowly worked its way back to ~0.53 lpm (maybe something working its way through the head 4 laser head water circuit?). I let the chillers run for a few minutes to ensure everything was working properly. Trends indicate that this was NOT the original cause of the PSL trip.

Attempt #2

I then restarted the HPO, and everything came up OK so I let it warm up for a few mintues. After about 7 minutes or so the laser turned off again, this time with the HPO DB heatsinks overtemp (H1:PSL-OSC_DBHTSNKOVRTEMP) interlock tripped. Looking at the chiller screen, the temp of the water in the diode chiller had slowly increased from its setpoint of 20 °C to ~28 °C, which caused the interlock to trip. Checking on the diode chiller, the front panel was showing a 'F-3 error' error message. I don't know what this message means, but coupled with the fact the water had gotten so warm, I'm assuming something with the chiller cooling system stopped working (fans maybe? F-3 could be fan 3? Will look into it more tomorrow). I had to power cycle the diode chiller to clear the error. Restarting the diode chiller, and everything appeared to work fine. Trends indicate that this was also NOT the original cause of the PSL trip.

Attempt #3

I restarted the HPO and let things sit for a while to warm up. The front end laser came up without issue, and the system injection locked as expected so I let things warm up for a bit before activating the PSL stabilization subsystems.

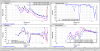

While waiting for everything to warm up, I trended every crystal chiller based interlock we monitor at the time of the trip to try to see which one killed the laser first (see first attachment). As can be seen, the only interlocks that tripped were H1:PSL-IL_XCHILFLOW (crystal chiller flow interlock), H1:PSL-AMP_FLOWERR (Front End flow error interlock), and H1:PSL-AMP_NPROOK (is the NPRO running interlock). They all tripped within the same second, so my best guess at this point is that something happened with the Front End flow that tripped the interlock. What that could be, I'm not sure; trending the humidity over the same period shows no evidence of a water leak.

While the injection locked system was warming up the laser tripped out again, once again with the HPO Head 1-4 Flow Error interlock tripped. Full data trends show no reason why the interlock would trip, none of the channels appear to drop below 0.2 lpm (see 2nd attachment). The MEDM version of the Beckhoff interlock screen also showed that the HPO Diode temp guard interlock had tripped, although the Beckhoff screen did not indicate a trip of this interlock and a full data trend also showed no trip of this interlock. The discrepancy is unclear at this time.

Attempt #4

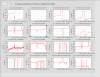

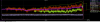

I turned the chiller back on and let it run, all OK. I brought up the HPO (no issues), the front end (also no issues), and turned on the injection locking (also also no issues). While waiting for the system to warm up, I set up a StripTool of the 4 laser head flows and sat here and watched it. Less than 10 minutes later the laser tripped out again, same HPO Head 1-4 Flow Error interlock tripped. I happened to be looking at the StripTool when it happened and noticed the flow for laser head 4 drop, come back up, and then the laser tripped. Zooming in on the spot (see 3rd attachment) shows this. It doesn't show the flow drop below the threshold of 0.2 lpm, but I don't think StripTool is fast enough to catch it. It appears we have an issue with the water circuit for the 4th laser head in the HPO. Humidity trend doesn't indicate a leak, so something is likely going wrong with the flow sensor.

I've left the laser and the crystal chiller OFF. We will go in to the PSL tomorrow and attempt to figure out what the problem is. Please do not try to restart the laser.

As an aside

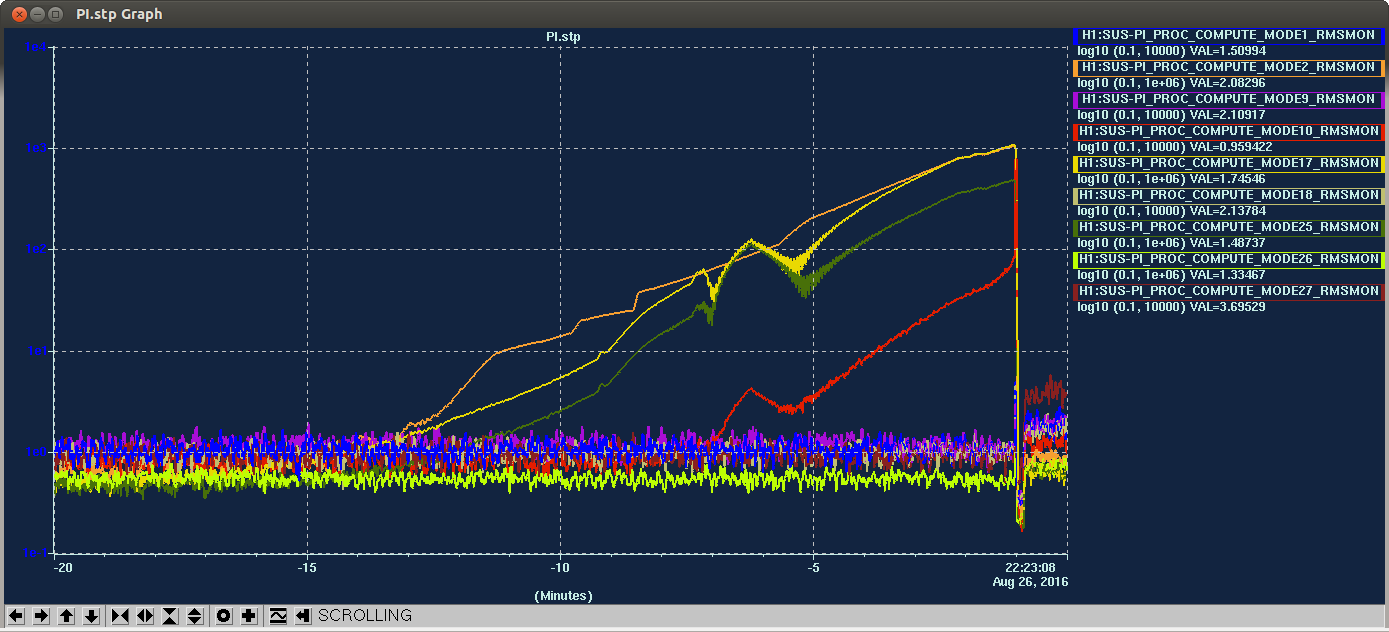

I took the opportunity while the laser was down to watch the current for HPO diode box 3 (DB3). We have had an issue where the current shows 100.2A drawn, which is impossible as the power supplies max out at ~60A (originally reported here and FRS 5955). I watched the reported current for HPO DB3 during the PSL restart process to see if it always reports 100A or if there is a spot during the startup procedure where it changes. See the below table:

|

PSL State |

HPO DB3 Reported Current Draw (A) |

|

HPO off |

0.0 |

|

HPO on |

50.2 |

|

FE on |

50.2 |

|

Injection Locked |

50.2 |

Unfortunately I couldn't get any farther in the startup procedure (see above), but there was no point today where the reported current draw of HPO DB3 was 100A. Will monitor this the next time we restart the laser to see if there's a point where the reported current draw changes.