Daniel and Vern asked for a list of H1 models which are using the cdsEzcaRead and cdsEzcaWrite parts to transfer data to remote IOCs. This is following my discovery that the h1psliss model is attempting to send data to LLO EPICS channels (which also do not exist at LLO, presumably obsolete channels).

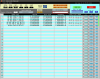

To make the list, I created a list of front end model core mdl files and grep within each file (grep -B 2 cdsEzCaWrite */h1/models/${model}|grep ":")

cdsEzCaRead:

h1ioppemmx.mdl *

Name "H1:DAQ-DC0_GPS"

h1ioppemmy.mdl *

Name "H1:DAQ-DC0_GPS"

h1sushtts.mdl

Name "H1:LSC-REFL_A_LF_OUTPUT"

h1pslpmc.mdl

Name "H1:PSL-OSC_LOCKED"

h1tcscs.mdl

Name "H1:ASC-X_TR_B_SUM_OUTPUT"

Name "H1:ASC-Y_TR_B_SUM_OUTPUT"

Name "H1:TCS-ETMX_RH_LOWERPOWER"

Name "H1:TCS-ETMX_RH_UPPERPOWER"

Name "H1:TCS-ETMY_RH_LOWERPOWER"

Name "H1:TCS-ETMY_RH_UPPERPOWER"

Name "H1:TCS-ITMX_CO2_LASERPOWER_ANGLE_CALC"

Name "H1:TCS-ITMX_CO2_LASERPOWER_ANGLE_REQUEST"

Name "H1:TCS-ITMX_CO2_LASERPOWER_POWER_REQUEST"

Name "H1:TCS-ITMX_CO2_LSRPWR_MTR_OUTPUT"

Name "H1:TCS-ITMX_RH_LOWERPOWER"

Name "H1:TCS-ITMX_RH_UPPERPOWER"

Name "H1:TCS-ITMY_CO2_LASERPOWER_ANGLE_CALC"

Name "H1:TCS-ITMY_CO2_LASERPOWER_ANGLE_REQUEST"

Name "H1:TCS-ITMY_CO2_LASERPOWER_POWER_REQUEST"

Name "H1:TCS-ITMY_CO2_LSRPWR_MTR_OUTPUT"

Name "H1:TCS-ITMY_RH_LOWERPOWER"

Name "H1:TCS-ITMY_RH_UPPERPOWER"

h1odcmaster.mdl

Name "H1:GRD-IFO_OK"

Name "H1:GRD-IMC_LOCK_OK"

Name "H1:GRD-ISC_LOCK_OK"

Name "H1:GRD-OMC_LOCK_OK"

Name "H1:PSL-ODC_CHANNEL_LATCH"

* mid station pem systems do not have IRIG-B timing, cdsEzCaRead is used to remotely obtain starting GPS time.

EzCaWrite:

h1psliss.mdl *

Name "L1:IMC-SL_QPD_WHITEN_SEG_1_GAINSTEP"

Name "L1:IMC-SL_QPD_WHITEN_SEG_1_SET_1"

Name "L1:IMC-SL_QPD_WHITEN_SEG_1_SET_2"

Name "L1:IMC-SL_QPD_WHITEN_SEG_1_SET_3"

Name "L1:IMC-SL_QPD_WHITEN_SEG_2_GAINSTEP"

Name "L1:IMC-SL_QPD_WHITEN_SEG_2_SET_1"

Name "L1:IMC-SL_QPD_WHITEN_SEG_2_SET_2"

Name "L1:IMC-SL_QPD_WHITEN_SEG_2_SET_3"

Name "L1:IMC-SL_QPD_WHITEN_SEG_3_GAINSTEP"

Name "L1:IMC-SL_QPD_WHITEN_SEG_3_SET_1"

Name "L1:IMC-SL_QPD_WHITEN_SEG_3_SET_2"

Name "L1:IMC-SL_QPD_WHITEN_SEG_3_SET_3"

Name "L1:IMC-SL_QPD_WHITEN_SEG_4_GAINSTEP"

Name "L1:IMC-SL_QPD_WHITEN_SEG_4_SET_1"

Name "L1:IMC-SL_QPD_WHITEN_SEG_4_SET_2"

Name "L1:IMC-SL_QPD_WHITEN_SEG_4_SET_3"

h1pslpmc.mdl

Name "H1:PSL-EPICSALARM"

* all writes to L1 channels will be removed on next restart of PSL ISS model.

Interestingly h1tcscs is cdsEzCaRead'ing some of its own EPICS channels. Looks like a copy-paste issue, I'll work with Nutsinee when she gets back.