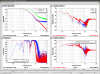

I took advantage of today's maintenance period to do some investigation of the PCalX clipping issue. I dithered the ETM independently in pitch and yaw: leaving yaw in the original 'aligned' position while dithering pitch, and vice versa. See attached pic if you care about the individual data points. In summary, I found that changing ETM yaw had a much greater effect on the power received by the receiver photodiode (RxPD). This seems to indicate that we can narrow down our search for clipping to the receiver side of the ETM.

Original 'aligned' values:

Pitch: -16.0 urad

Yaw: 78.8 urad

RxPD: 2.090 Volts

Greatest power for pitch dither:

Pitch: 150.0 urad

Yaw: 78.8 urad

RxPD: 2.315 Volts

Greatest power for yaw dither:

Pitch: -16.0 urad

Yaw: 400.0 urad

RxPD: 3.15 Volts

Combined dither:

Pitch: 100.0 urad

Yaw: 400.0 urad

RxPD: 3.16 Volts

The next chance we have to go to the end station, we'd like to try adjusting the steering mirrors in the transmitter module again (we've tried this once, but with limited success).