I just received a call from Cheryl in the control room saying the chilled water pump at EY was in alarm. I looked at the screen and chilled water pump #1 had tripped. I started pump 2.

I just received a call from Cheryl in the control room saying the chilled water pump at EY was in alarm. I looked at the screen and chilled water pump #1 had tripped. I started pump 2.

Summary:

Jason worked on the PSL DBB in the morning and various commissioning activities have been going on during the day. Have had it at DC Read Out for last few hours during the afternoon for ISS work.

If winds pick up, Jim asked Commissioners to give him a call to take the End Station ISIs to a certain state.

H1 Locking Notes:

After a lockloss, there were some FSS issues (resolved by toggling Autolocker). Kiwamu also noticed an oscillation with the ISS Diffracted Power (on the plot on the ISS medm, the max/min/mean signals are all separated from each other by quite a bit). To remedy this,

Day's Activities

Since the new 64k to 16k decimation filter of RCG3.0.X has about 12us smaller delay for duotone, I changed two duotone scripts:

/ligo/svncommon/CalSVN/aligocalibration/trunk/Common/MatlabTools/timing/scienceFrameDuotone.m and scienceFrameDuotoneStat.m.

If you specify gps time larger than 1144501217 (Apr/12/2016 13:00:00 UTC) for L1, or 1146322817 (May/03/2016 15:00:00 UTC) for H1, the new decimation filter is used to assess the expected delay in ADC.

For O1 data (first attachment), the old scripts (left) and the new one (right) generate the same results except a negligible offset of 0.047us (first attachment). The difference comes from the fact that the decimation delay was hard coded in the old script.

I also confirmed that the scripts generate correct timing information that is consistent with that of O1 (second attachment right).

Ross, Tega

We have been using the new h1omcpi and h1susetmxpi models to look at mechanical modes in OMC DCPD which should be related to parmatetric instabilities. Only the OMC DCPDA is used as subtracting the A and B signals seemed to remove the lines from our spectrum i.e. they must be completely in phase with each other. This signal is being used instead of the QPD transmission signal as it has a much higher SNR for these modes.

Starting in the 64kHz h1omcpi model, the signal (H1:OMC-PI_DOWNCONV_DEMOD_SIG_IN1) is first down converted to DC using a local oscillator set at the frequency of the particular mechanical mode. The I and Q phase signals are then passed via PCIE to the 16kHz h1omc model where they are low passed with a 64Hz corner frequency, multiplexed and sent across to the 16kHz h1pemex model using RFM. The signals are then demuxed and sent to the 64kHz h1susetmxpi model. At this point these signals are low passed again to avoid imaging and then upconverted back to the frequency of the mode. The I and Q phase signals are then summed which effectively leaves the original signal with a bandpass due to the lowpassed signal being upconverted (H1:SUS-ETMX_PI_OMC_UC1). We are then able to use our linetracking tool iwave to track the particular line you are interested in (H1:SUS-ETMX_PI_MODE_OUT).

This was process was used to track a selection of lines around 15000 Hz which should be related to some of the mechanical modes. The hope is that we can now use the iwave tracked signals to damp these modes using ESD assuming we know what lines relate to each test mass.

![]()

![]()

![]()

Slightly more details:

A little further investigation shows that the power deviation during the second set of measurements started at 58th measurement where 19 W jumped to nearly 20 W and 54 W jumped to 56 W (first attachment). This jump did not correspond to the measured angles which stayed consistant throughout the measurement (second attachment). Third attachment shows the histogram of power of the first set of measurement (going back and forth between 0W and 3W) that didn't appear to have any power jump.

Richard changed PID settings on CP 5 & 6 today. Current settings: CP5 Gain: 7 Integral: 460 Set Pt: 90 CP6 Gain: 6 Integral: 360 Set Pt: 88 Set points are set lower than nominal 92% so PID experiments can continue Monday.

1:30pm local 1/2 turn open on LLCV bypass --> took 33:17 min. to overfill CP3. Raised LLCV from 20% to 21%.

Kiwamu, Darkhan,

We updated the ETMY L1 stage (UIM) compensation filters FM2, FM3, FM4, FM7, FM8, FM9 of ETMY_L1_ESDOUTF_{UL,LL,UR,LR} with zeros and poles more accurately fitted to the most recent measurements by Jeff K. from ER8 (LHO alogs 20846, 21283). We have not updated the acquisition circuit zero-pole pair, because the measurements do not include the response of this circuit (for details see LHO alog 21142).

We've also updated the ETMY L2 stage (PUM) compenstation filters FM1, FM2, FM3, FM6, FM7, FM8 of ETMY_L2_ESDOUTF_{UL,LL,UR,LR} to their fitted values (LHO alogs 20846, 21232).

Python scritps were used to load new vailes into the Foton file, the scripts are based on Kiwamu's script for updating filter in the L3 stage (ESD) (LHO alog 27150). The scripts are committed to CalSVN at

Runs/PreER9/H1/Scripts/SUS/setH1SUSETMY_UIMDriver_compensations.py

Runs/PreER9/H1/Scripts/SUS/setH1SUSETMY_PUMDriver_compensations.py

Notes for CAL FOLKS:

J. Kissel, K. Izumi, D. Barker Dave noticed that these changes to the foton filter file never were pushed to the H1SUSETMY front-end with a LOAD COEFFICIENTS, so we 've loaded them just now. While at it, we've made the necessary update to the CAL-CS actuation compensation, which involved simply turning OFF (and subsequently deleting to avoid future confusion) FM6 of the H1:CAL-CS_DARM_ANALOG_ETMY_L2 and H1:CAL-CS_DARM_ANALOG_ETMY_L3 filter banks called "mis_coil" that were originally installed before O1 (see LHO aLOG 21275).

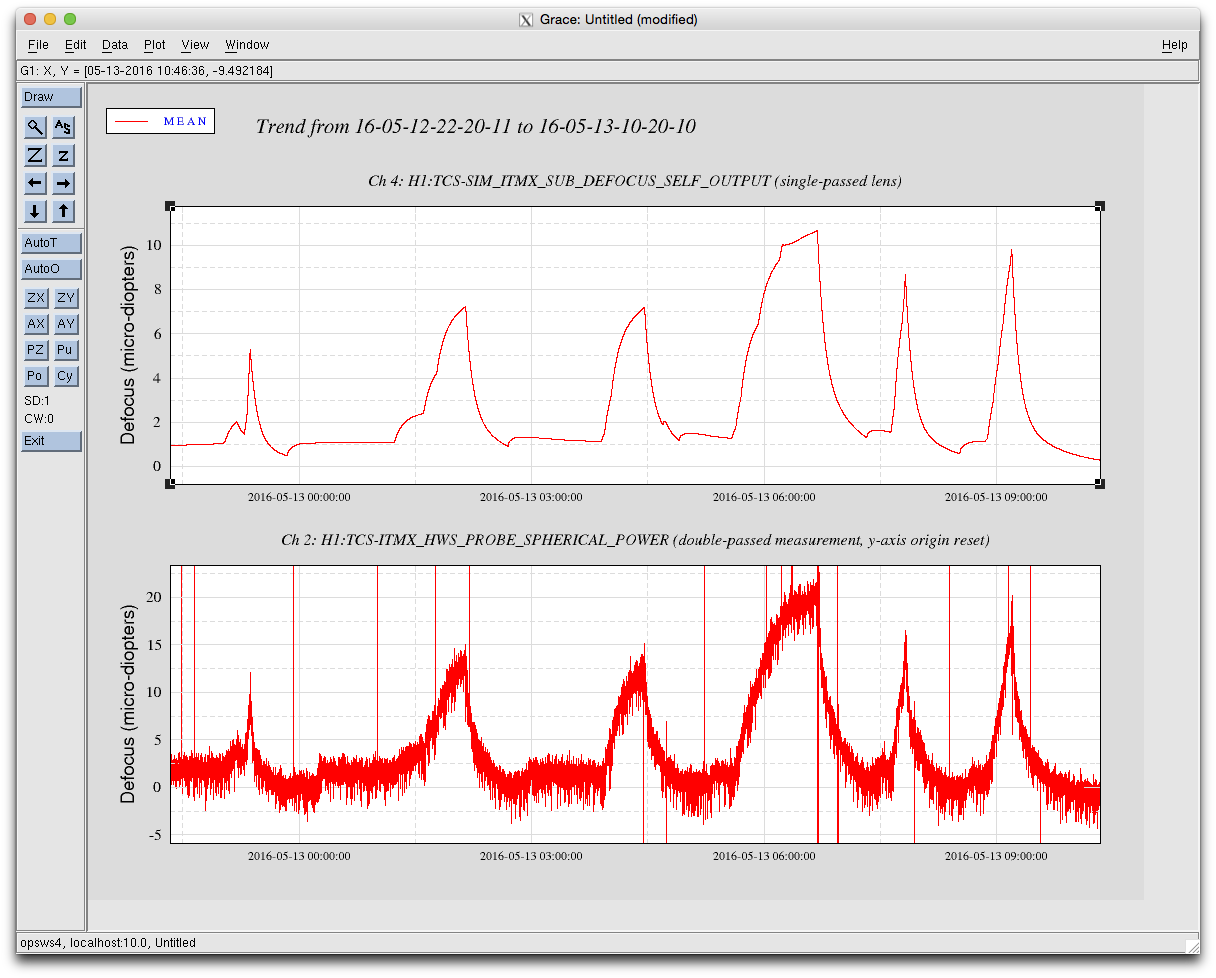

I checked the output of the HWS vs the expected thermal lens from the online simulation code. The results are posted below. As you can see the time series agree very well with each other, certainly within the error bars of the HWS measurement ... at least just looking in dataviewer. (I rescaled the time series from diopters into micro-diopters and reset the HWS value to match the SIM value at t=0).

The HWS measurement is double-passed through the lens and while the simulation predicts the single-pass value: hence a factor of 2 difference between them.

The HWSY data is extremely noisy and shows almost no correlation with the simluation data.

The circulation fan on the staging building chiller is broken. The bearing support on the drive side has fractured due to metal fatigue. The circulation fan on this unit has been an ongoing issue for some time because of a poor design originally. I have removed the fan and the part of the housing that supports the above mentioned bearing. This fan typically runs continually to ensure a positive pressure in the staging building. I have the booster fan running so there is still adequate positive pressure in the building. The AAON unit is running and keeping the building at a reasonable temperature given the current outdoor conditions. One of the two condenser fans has also stopped operating. This chiller only affects the assembly area and the upstairs area excluding the mezzanine lab.

Tega, Ross, Dave:

We rebuilt and restarted h1susitmpi and h1susetmxpi models. They now use the iwave light function in IWAVE.c to see if some CPU time can be recovered. We found that h1susitmpi is still running long (12-17uS) when the code is unbypassed for all 16 nodes. Tega is looking to see if parts can be removed from the model, and we'll also test this on a faster computer on the DTS.

I made a new version of the Matlab iopdownsamplingfilters.m script for use by the calibration group to apply the RCGv3.0.2 digital AA filter. The new file for use is stored at:

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O2/DARMmodel/Scripts/iopdownsamplingfilters.m

Minor adjustments were made to the h1dustlvea EPICS database file to try to solve some oddities in the raw count channels. The h1dustlvea IOC was restarted several times, we are done for now and it should run over the weekend. Also killed the h1dustpsl IOC as it's no longer connected to dust monitors. The PSL dust monitors were connected to the LVEA network last week, and are now controlled by the h1dustlvea IOC.

I also uncommented TCS related lines in DOWN (Ln 417, 418) and COIL_DRIVERS (ln 2755, Ln 2756)

It looks like we need to do something about the triple coil drivers that we switch in lock, especially PRM M3. We have lost lock a good fraction of the times that we have tried to switch in the last month or so. Screenshot is attached, I also filed an FRS ticket hoping that someone might make an attempt to tune the delays while people are in the PSL tomorrow morning. FRS ticket #5489

Is the DAC spectrum post-switch using up a large fraction of the range? If the noise in the PRC loop has change a little bit it could make this transition less risky.

Here's the PRM drive from last night's lock, in which we just skipped the PRM M3 BIO switching leaving the low pass off (BIO state 1). It seems like we should have plenty of room to turn the low pass on without saturating the DAQ.

FRS Ticket #5489 closed in favor of a long-term fix Integration Issue #5506 (which is now also in the FRS system) and for an eventual ECR. Work permit #5880 indicates we'll install the fix tomorrow. See LHO aLOG 27223.

I forgot to de-energize the Pfeiffer turbo controller sitting on the floor -> someone from the Vacuum group can do this the next time they are in the LVEA.

Done

C. Cahillane I have generated corner plots of the time-dependent kappas to check their covariance. There are six kappa values: 1) Kappa_tst Mag 2) Kappa_tst Phase [Rads] 3) Kappa_pu Mag 4) Kappa_pu Phase [Rads] 5) Kappa_C 6) Cavity Pole F_CC We do get some values of covariance that are comparable with variance... However, all values, including variance, are sub-percent. I anticipate that if propagated into the uncertainty budget, all covariant terms would be negligible. We cannot be sure, however, without doing the work. For the LLO Kappa Triangle Plots, please see LLO aLOG 26134

J. Kissel, C. Cahillane A couple of notes from a discussion between Craig and I about these results: (1) The "Var" and "Covar" results reported on the plots are actually the square root of the respective element in the covariance matrix, multiplied by the sign of the element. Thus the reported "Var" and "Covar" value is directly comparable to the uncertainty typically reported, but the off-diagonal elements retain the sign information of the covariance. (2) Lest ye be confused -- the (co)variances (which are actually uncertainty values) are report at the *top* of each sub-plot, and the upper most variance value (for |k_TST|) is cut off. The value is 0.00234. (3) In order to compute the statistical uncertainty of these time-dependent systematic errors for the C02 uncertainty, Craig computed a running median (where the width of the median range was 50, once-a-minute data points; +/- 25 points surrounding the given time's data point) over the entire O1 time series, subtracted that median from every given data point, and took the standard deviation of residual time-series assuming that what remains is representative of the Guassian noise on the measurement. See original results in LHO aLOG 26580, though that log reports a range of 100 (+/- 50) once-a-minute points which has since been changed to the above-mentioned +/- 25 points for a more physical time-scale (but only changes the results at the 5% of the reported already ~0.3-0.5% uncertainty, i.e. the reported relative uncertainty of 0.002 change by 0.00005). For these plots, where he went a step further and computed the full covariance matrix on all time-dependent factors in order to address the question "is there any covariance between the statistical uncertainties, given that they're computed using -- in some cases -- the same calibration line?"). In doing so, he'd first taken the exact same median-removed time-series for each time-dependent factor, but found that the data set (i.e. the 2D and 1D histograms) was artificially biased around zero. We know now this was because, often, the median *was* the given time's value and residual was zero. Thus, instead, he recomputed the running median, disallowing the given time's value to be used in the median calculation. Graphically, x x x x x x x x x x x x x x x x x x x x x x x x x x [------------\_/-------------] x Raw data \_/ Given time's central value to which a median will be assigned and subtracted. [---] Median Window As one can see in his attached plots, that successfully de-biases the histogram. (4) The following time-dependent systematic error uncertainties *are* indeed covariant: (a) kappa_TST with kappa_PU (in magnitude and phase) -- the actuation strength of the PUM and TST mass stages. (b) kappa_C with f_cc -- the IFO optical gain and cavity pole frequency We know and expect (b), as has been shown in Evan's note T1500533. What's more is that it's *negatively covariant, so what estimation of the uncertainty we have thus far been making -- ignoring the covariant terms -- is actually an over estimate, and all is "OK." We also expect (a), because of how the two terms are calculated (see T1500377) and because they're also calculated from the same pair of lines. Sadly, we see that sqrt(covariance) is *positive* and of comparable amplitude to the sqrt(variance). But -- said at little more strongly than Craig suggests -- for these terms that have positive covariance comparable to the variance, the absolute value of both variance and covariance corresponds to an uncertainty (i.e. sqrt(variance)) on the 0.3-0.5% level, which is much smaller than other dominant terms in the uncertainty, as can be seen for example in pgs 3 and 4 of the attachment "05-Jan-2016_LHO_Strain_Uncertainty_C03_All_Plots.pdf" in LHO aLOG 24709. As such, we deem it sufficient to continue to ignore all covariant terms in the statistical uncertainty of these time-dependent parameters because they're either negative or, where positive, contribute a negligible amount to the overall statistical uncertainty budget compared with other, static statistical uncertainty terms.

00:51:52UTC - pump 1 shuts off, temperatures climb

01:13:24UTC - pump 2 turned on, temperatures take a small dip, but continue to climb

02:03:32UTC - pump 1 turned on again, temperatures for chilled water start to fall

02:15:35UTC - EY VEA temperature starts to fall, after reaching a peak of 69.5F, from an average of 68F

02:27:15UTC - EY VEA temperature reaches 68.1F, and chilled water supply/return have leveled out at 43F/48FF