Craig, Sheila, Jenne, Travis

There is still at least one problem with the rotation stage, that sometimes it goes the wrong way when it first starts moving.

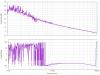

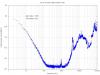

The attached screenshot shows our first attempt at increasing the power since the vent, the rotation stage velocity and request are correctly set to 22 Watts and a velocity of 10, before the rotation stage starts moving. When the rotation stage starts moving, you can see a drop in the input power (which happens faster than the normal rotation stage motion), the normalization (PSL-POWER_SCALE_OFFSET) is lowered by the guardian to adjust for this, but we loose lock anyway. After the lock is lost the rotation stage proceeds at a reasonable pace to 20Watts, and the normalization follows correctly.

This is not a new bug, we've had random locklosses like this for some time, but it is a bug that remains after last week's rotation stage fix.

[Travis, Craig, Jenne, Ross]

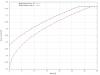

Somehow using the faster rotation stage velocity (RS_VELOCITY=100) is making things funny, so when we try to change the laser power after using the faster velocity, we have this problem where the laser power dips before increasing. So, we've changed the PSL power guardian so that the "fast" velocity is the same RS_VELOCITY=10 as the "slow" velocity. This seems to have fixed things.

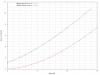

It seems like the rotation stage still has the problem that the minimimum power angle drifts, tonight we have seen it change by 2 degrees after being rotated several times, and then change again after only being rotated once.