Michael, Krishna, Hugh, Jeff

This morning, we took out the piezo stacks from under the BRS-2 platform. We then turned on the ion pump. The current initially went up to 20 mA and slowly dropped down to ~300 microamps in ~1 hr. We then disconnected the Pump station. When we checkd it later after about 4 hrs, the pump current was ~200 microamps (P ~ 1e-6 torr) and steadily dropping.

Michael and I then hooked up the rest of the electronics, wrapped the vacuum can in several sheets of foam, and finally set up the thick-foam-box around the instrument.

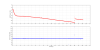

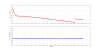

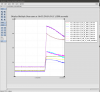

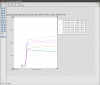

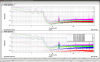

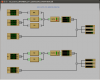

Hugh had set up the EY_GND medm screen showing the BRS-2 data coming into the ISI frontends (similar to EX_GND). After correcting a cable connection mistake, we are now getting the BRS-2 angle data into CDS. Currently we are getting the following channels in to ISI AA Chasis:

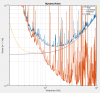

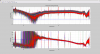

ADC0, channel 27: Raw Tilt Signal : Calibration - to be worked out soon. This is a high-passed value of the raw angle measured by the autocollimator, high pass: 2 pole at ~0.5 mHz.

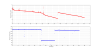

ADC0, channel 28: Drift Signal : Calibration: 58.33 nrad/ct. This is a scaled down version of the raw angle signal.

ADC0, channel 29: Ref Signal : Calibration: - to be worked out soon. This is the angle of the reference mirror. It is useful as a measure of the autocollimator noise. Unlike BRS-1, this signal has a higher noise floor and is useful only at very low frequencies, below ~ 5 mHz.

ADC0, channel 30: Status Signal : This is currently zero. The plan is to use this as an indication of the health of BRS-2.

The Tilt channel is currently noisy and we will investigate further tomorrow.