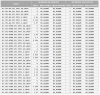

H1 has 30 sender RFM channels on each arm, of which only 26 have corresponding receiver(s). So 4 are being sent and no model is using the data.

L1 has 29 senders on the X-ARM (of which there are 26 receivers), and 30 senders on the Y-ARM (of which there are 26 receivers).

So the two sites are very close in number of sending channels.

Analysis details: The base number of potential senders was derived from the main IPC file, looking for RFM0 and RFM1 ipc types. This resulted in 30 for H1-X and H1-Y, and 42 for L1-X and L1-Y. Because the ipc file is only appended to during compilation, if it has not been cleanly regenerated recently it may overcount the number of sending channels.

For each channel, I searched the models' RCG generated IPC_STATUS.adl medm file for the channel name (e.g. H1LSC_IPC_STATUS.adl). Assuming that no two ipc channels share the same name, if I found the channel name in the adl file this means it is a running sender with a receiver. For the remaining possible senders without receivers (H1-X=4, H1-Y=4, L1-X=16, L1-Y=16) I looked for the channels in the top level simulink source files (e.g. /opt/rtcds/userapps/release/*/l1/models/l1*.mdl). This showed that all four channels on H1-X and H1-Y do have sending models, and for L1-X 3 of the 16 had sending models, and for L1-Y 4 of the 16 had sending models.

If we can possibly remove some of the RFM channels which are not being received, additional RFM channels can be added to the loop with no risk.

For H1 the sending channels with no receivers are:

-

H1:OAF-PEME[X,Y]_RFM

-

H1:ASC-[X,Y]_TR_A_SUM_RFM

-

H1:ALS-[X,Y]_ARM_RFM

-

H1:ALS-[X,Y]_REFL_B_LF_RFM

Update as of 21:12UTC (13:12PT)