Kiwamu, Nutsinee

------------------------------------------------------------------------------------------------------------------------------

Code added to line 115 - line 136

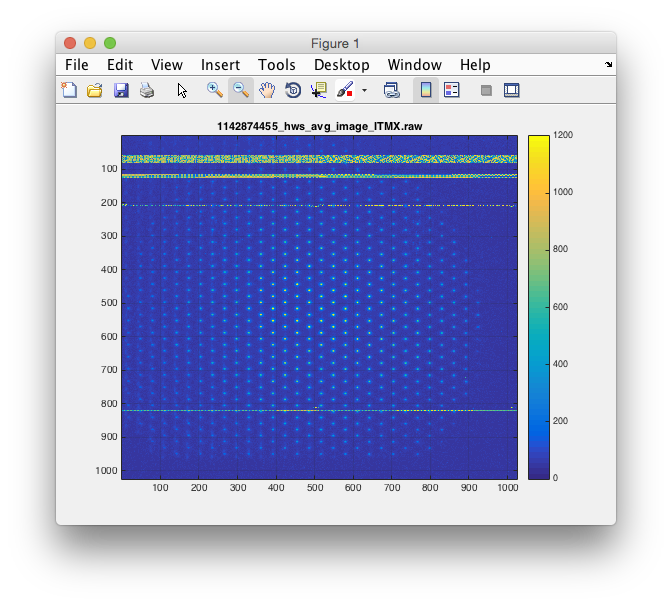

First test checks if the number of "peaks" measured by the HWS code makes sense. If below 800 -- not enough return SLED beam. If above 4096 (12 bit), something's wrong.

Second test checks if HWS code time stamp is correct compared to the actual GPS time. If |dt| > 15 seconds then the code must have stopped running.

------------------------------------------------------------------------------------------------------------------------------

@SYSDIAG.register_test

def TCS_HWS():

#Make sure # of pixels are good

pkcnts_X = ezca['TCS-ITMX_HWS_TOP100_PV']

pkcnts_Y = ezca['TCS-ITMY_HWS_TOP100_PV']

#If below 800 -- not enough return SLED light

#If above 4096 -- above theoretical number (12 bit) -- something's wrong

if not (pkcnts_X > 800) and (pkcnts_X < 4096):

yield "HWSX Peak Counts BAD!!"

if not (pkcnts_Y > 800) and (pkcnts_Y < 4096):

yield "HWSY Peak Counts BAD!!"

#Check if HWS GPS time agrees with current GPS time

a = subprocess.Popen(['tconvert','now'], stdout = subprocess.PIPE)

gpstime, err = a.communicate()

gpsfloat = float(gpstime.strip('

'))

#Fetch HWS GPS time

liveGPS_X = ezca['TCS-ITMX_HWS_LIVE_ACQUISITION_GPSTIME']

liveGPS_Y = ezca['TCS-ITMY_HWS_LIVE_ACQUISITION_GPSTIME']

if np.abs(gpsfloat - liveGPS_X) > 15 :

yield "HWSX Code Stopped Running"

if np.abs(gpsfloat - liveGPS_Y) > 15:

yield "HWSY Code Stopped Running"