svn up at .../SusSVN/sus/trunk/QUAD/Common/MatlabTools/QuadModel_Production/

Updates:

1) Added an option for optical lever damping that actuates at the PUM (L2) stage. Like top mass damping, this can be imported from the sites, or added in locally.

2) Added options for violin modes at all stages. Previously this was only available for the fibers. You can choose how many modes you want at each stage, doesn't have to be the same number.

3) Added an option to load damping from a variable in the matlab workspace. Previously this could only be done from a saved file or imported from the sites.

Detailed instructions fpr generate_QUAD_Model_Production.m are commented into the header. See G1401132 for a summary of the features, and some basic instructions on running the model.

I am tagging this to the svn now as

quadmodelproduction-rev7995_ssmake4pv2eMB5f_fiber-rev3601_h1etmy-rev7915_released-2016-03-01.mat

...the file is large (386 MB) so it is slow to upload.

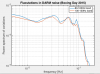

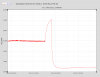

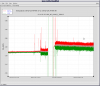

The tagged model includes 25 violin modes for the fibers, 20 for the uim-pum wire, 15 for the top-uim wire, and 10 for the top-most wire. For the 25 fiber violin modes, the first 8 are based on measured frequencies from h1etmy, the remainder are modeled frequencies. All metal wire modes are modeled values. The oplev filters are turned off in this model as well (I imported the filters from LHO, and they were turned off at the time).

For reference, this is a summary of the history of model revisions in the svn:

* generate_QUAD_Model_Production.m

rev 7359: now reads foton files for main chain and reaction damping

rev 7436: Changed hard coded DAMP gains to get the correct values for LHO ETMX specifically.

rev 7508: Restored damping filter choice for P to level 2.1 filters as opposed to Keita's modification. Cleaned up error checking code on foton filter files, and allowed handling of filter archive files and files with the full path.

rev 7639: renaming lho etmy parameter file

rev 7642: Adding custom parameter file for each quad. Each one is a copy of h1etmy at this point, since that one has the most available data.

rev 7646: added ability to read live filters from sites, and ability to load custom parameter files for each suspension

rev 7652: updated to allow damping filters from sites from a specific gps time (in addition to the live reading option)

planned future revision - seismic noise will progate through the damping filters as in real life. i.e the OSEMs are relative sensors and measure the displacement between the cage and the suspension.

rev 7920: big update - added sus point reaction forces, top OSEMs act between cage and sus, replaced append/connect command with simulink files

rev 7995: added oplev damping with actuation at the PUM (L2); added options for violin modes at all stages, rather than just for the fibers; added option to load damping from a variable in the workspace, in addition to the existing features of loading damping from a previously saved or importing from sites.

* ssmake4pv2eMB5f_fiber.m

no recent (at least 4 years) functional changes have been made to this file.

* quadopt_fiber.m

- rev 2731: name of file changed to quadopt_fiber.m, removing the date to avoid confusion with Mark Barton's Mathametica files.

- rev 6374: updated based on H1ETM fit in 10089.

- rev 7392: updated pend.ln to provide as-built CM heights according to T1500046

- rev 7912: the update described in this log, where the solidworks values for the inertias of the test mass and pum were put into the model, and the model was then refit. Same as h1etmy.m.

* h1etmy.m

- rev 7640: created the H1ETMY parameter file based on the fit discussed in 10089.

- rev 7911: the update described in this log, where the solidworks values for the inertias of the test mass and pum were put into the model, and the model was then refit. Same as quadopt_fiber.m.

ITMY mis-alignment issue tracked down to offset, now fixed.

ITMY drivealign_P2L_gain change from 1.05 to 0.6 accepted after the OK by Sheila, had been 0.6 for at least 10 days.

The Front End watchdog reset takes place in the LASER Diode Room, not in the LVEA/PSL enclosure. :)