A busy maintenance day.

ISI-HPI EY BRS model change WP5798

Hugh, Jeff:

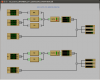

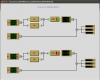

The h1isietmy and h1hpietmy models were changed to use the newly installed BRS in EY, same as what was done at EX.

SEI End Station SUSPOINT and GND-STS transmission to OAF WP5799

Hugh, Jeff, Joe B, Dave:

The suspoint and gound STS channels were transmitted from the end station ISI models to the corner OAF model using an additional RFM channel per arm. The two channels were MUXed into a single RFM channel. The path is: both channels sent from h1isietm[x,y] to h1peme[x,y] via two Dolphin channels. The two channels are filtered and then mux'ed inside h1peme[x,y] and sent out via a single RFM channel. The OAF model demux'es the RFM into two channels, assuming a single cycle delay in getting the data.

SEI HAM SUSPOINT addition WP5796

Hugh, Jeff:

The suspoint code was added to all the HAM ISI models.

SUSAUX upgrades WP5804

Jeff, Betsy:

Latest susaux model changes made.

Guardian PSL node added WP5797

Jamie, TJ:

new node added. Still needs to be added to DAQ?

NAT router OS upgrade WP5800

Ryan, Carlos:

Upgraded lhocds nat router to latest version. Discovered some issues, old system was reverted. Will invesitgate offline.

Machine reboots for security patching

Carlos:

machines which required rebooting for OS patching were rebooted.

DAQ frame writer instability, move wiper from writer to solaris server

Dave, Jim:

On monday we moved the h1fw1 wiper from h1fw1 to h1ldasgw1, running hourly at 10 minutes in the hour. Tuesday we did the same for h1fw0, running on the hour.

Accidental restarts

cast of many:

We had two accidental restarts.

Around 9am the timing slave on h1lsc0 IO Chassis was powered down. Initially we restarted all the models, but later in the day Evan reported bad ADC channels, we we then did a full computer+IOChassis power cycle in the afternoon.

Around 4pm the h1psl0 IO Chassis was accidentally glitched. We did a full computer+IOChassis power cycle to recover following our earlier LSC lesson learnt.

EX Beckhoff vacuum gauges moved from slow-controls to vac-controls

Richard, Dave, Patrick:

Patrick found the problem when we tried this last week, so this week the X4,5,6 BPG vacuum gauges were moved from their temporary slow-controls location to the final beckhoff VAC controls at EX.

The channel names were changed in the process. DAQ minute trends were changed to preserve old trend data (older archives still need moving).

DAQ Restart

Jim, Dave:

The DAQ was restarted several times over the day to support the above changes. We had two bad starts:

On one start all front ends resynced except for h1susaush56 which required a start_streamers, which fixed it.

On one start many frontends did not resync and required a start_streamers, during this h1psl0 went out of sync and many start_streamers did not fix it. We eventually did a second DAQ restart and all was good.