Nutsinee, Aidan, Den

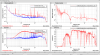

We have looked at the temperature data during O1 with the goal to estimate coupling of the temperature fluctuations to DARM. Coupling mechanisms include radiation pressure, thermal expansion of the optic and coating. We took numbers from Stefan Ballmer's thesis and Aidan's notes for these coupling mechanism. The total coupling coefficient is

DARM = 2 × 10-10 RTN / f, where RTN is relative temperature noise.

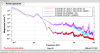

We measured in-vac and in-air temperature noise using TCS sensors. Attached plot shows the result of the measurement. Above 10mHz sensors are limited by electronics noise. We used spectrum analyzer to avoid ADC noise but this did not help much, electronics noise of the readout circuits still limit the measurement at high frequencies.

In-vac sensors are limited by 1/f electronics noise. But for an upper limit we can assume temperature noise of 5 × 10-1 (10-4 / f) K/Hz1/2 . This projects as 1/f2 noise in DARM with ASD of 2 × 10-20 m/Hz1/2.

The actual coupling coefficient is probably lower since one might assume that in vacuum temperature fluctuations should be smaller compare to in-air fluctuations. On a 24 hour scale in-vac flucuations are a factor of 2 smaller, compared to in-air fluctuations. In-air sensors have less noise and at 1mHz measured fluctuations are a factor of 3 lower compared to in-vac measurement. Tacking this into account we can assume that vacuum sensors overestimates temperature fluctuations by a factor of 6 above 0.01mHz.

The basis for the temperature fluctuation transfer function is outlined in the attached calculation.