I caput H0:VAC-EX_CP8_505_DEWAR_LEVEL_CAP_GAL to 1.44e+04 which was the value of HVE-EX:CP8_DEWARGAL in /ligo/cds/lho/h0/burt/2016/03/07/00:00/h0veex.snap.

I caput H0:VAC-EX_CP8_505_DEWAR_LEVEL_CAP_GAL to 1.44e+04 which was the value of HVE-EX:CP8_DEWARGAL in /ligo/cds/lho/h0/burt/2016/03/07/00:00/h0veex.snap.

WP 5775, install of new /opt/rtcds NFS file server.

Reminder that starting at 11:00am PDT today LHO CDS and DAQ services will be intermittent for several hours as the /opt/rtcds file system is moved from h1boot to the new file server h1fs0.

VAC - taking down the corner station. WIll remaine valved out at least until Friday. WP5772 CDS - will be executing WP5775. There will be a re-boot of all FE, DAQ and Work Stations SUS - Nothing to report LVEA and EX are LASER SAFE SEI - Model restarts for BSCs

15:45 All SUS parked in "SAFE" mode without incident 15:58 All SEI set to "OFFLINE" mode. ITMY watchdog tripped. Unable t reset at this time. Jim and HUgh looking into this. HAM2&3 watchdog tripped. was able to reset.

TITLE: 03/14 day Shift: 16:00-00:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Planned Engineering

OUTGOING OPERATOR: None

CURRENT ENVIRONMENT:

Wind Avg: 15 mph

Primary useism: 0.07 μm/s

Secondary useism: 0.53 μm/s

QUICK SUMMARY:

I notice that the TCS Y laser is off and reporting a flow alarm. The flow meter shows that the chiller is currently running. I don't think this laser was in use but was running and it would be better not to leave it in this alarm state. Either the power supply should be turned off if the laser is not needed, or it should be reset by turning the controller key on/off and pressing the gate button on the controller.

1320 - 1335 hrs. local -> To and from Y-mid. LN2 at exhaust 1 min 30 seconds for 1/2 turn open. Next overfill to be Tuesday, March 15th before 4:00pm hrs. local

I appear to be alone at the moment. I need to gather a few more items from the outbuildings for Monday's activities. Afterwards, I should be in the old Vacuum Prep and Bake Oven labs testing the to-be-installed vacuum gauge heads (forgot to do this last week). I'll make a comment to this entry when I leave the site.

1825 hrs. local -> Kyle leaving site now

Krishna

BRS-2, the ground-rotation sensor for installation at EndY, will be delivered by us on March 23rd. The installation and commissioning is expected to take ~2-3 weeks. The aim is to reduce the impact of wind on the ISI and consequently DARM. This post is to describe the components and the current plan.

Refer to T1500596 for the wiring diagram. Refer to the following SWG logs for more info on BRS-2: 11355, 11362. Here is a check-list of major components:

1. BRS-2 Instrument including the autocollimator, platform and foam box. Also includes the 30-meter optical fiber and light source.

2. An Ion pump and pump controller.

3. The Satellite controller box is done (pic attached) - this is the same as in the diagram except for the AI filters and preamp which are not needed. There is no pressure gauge on the vacuum can, but there is a 2-3/4" CF port to which one can be added. The RTDs are included.

4. The ISI interface chasis (not yet built). We will bring the EP4374 and EP4174 but we need help building the chasis. Specifically, the AI filter, the SE to Diff Amplifier, the Front Panel adapter board and associated wiring.

5. The Beckhoff computer and the C# and PLC code. The C# code reads the camera at 2 kHz and can send data out at ~200 Hz to the PLC, which is currently running at 250 Hz and writing out the following signals: Tilt, Drift and Ref signals and the Status bits.

6. We will bring the Piezo stacks and Piezo amplifiers to measure the tilt-transfer function.

7. Spare flexures, and additional hardware for the assembly process.

Rob, Stefan,

THe pictures are in /ligo/home/controls/sballmer/20160312/LIGO

Logged in remotely to check that indeed all vioin modes damped down to the normal range.

Tried once to go to 20W, but failed. more tomorrow.

State of H1: locked at Increase Power at 5.2W

Work tonight on:

Before locking tomorrow:

Stefan has H1

We are daming them overnight, sitting at 5W. Also wrote the adjustDamperGain script below. It measures the peak count output of a fliter module, and scales the gain to set a target peak level. (Or the maximal allowable gain.)

We left the guardian script calling this function for the modes that were problematic toinight:

ISC_LOCK.py, line 2362

# the following violins were saturating on March 11 2016 with above gains - turn them down

time.sleep(10)

adjustDamperGain('SUS-ETMY_L2_DAMP_MODE5',-100,1.5e5)

adjustDamperGain('SUS-ETMX_L2_DAMP_MODE6',100,1.5e5)

adjustDamperGain('SUS-ITMY_L2_DAMP_MODE3',100,1.5e5)

def adjustDamperGain(FM,maxgain,targetCounts):

"""read the FM output and scale the gain to match targetCOunts"""

dmax=numpy.max(numpy.abs(cdsutils.getdata(FM+'_OUTPUT',1).data))

oldgain=ezca[FM+'_GAIN']

newgain=oldgain*targetCounts/dmax

if (abs(newgain/maxgain)<1.0):

ezca[FM+'_GAIN'] = newgain

else:

ezca[FM+'_GAIN'] = maxgain

We took a fine-resolution, high-frequency spectrum using the IOP channels of the DCPD outputs in this state, to try to identify the harmonics of the violin modes so we know them for posterity. The dtt files are in /ligo/home/robert.ward/Documents/ExcitedViolinModes/

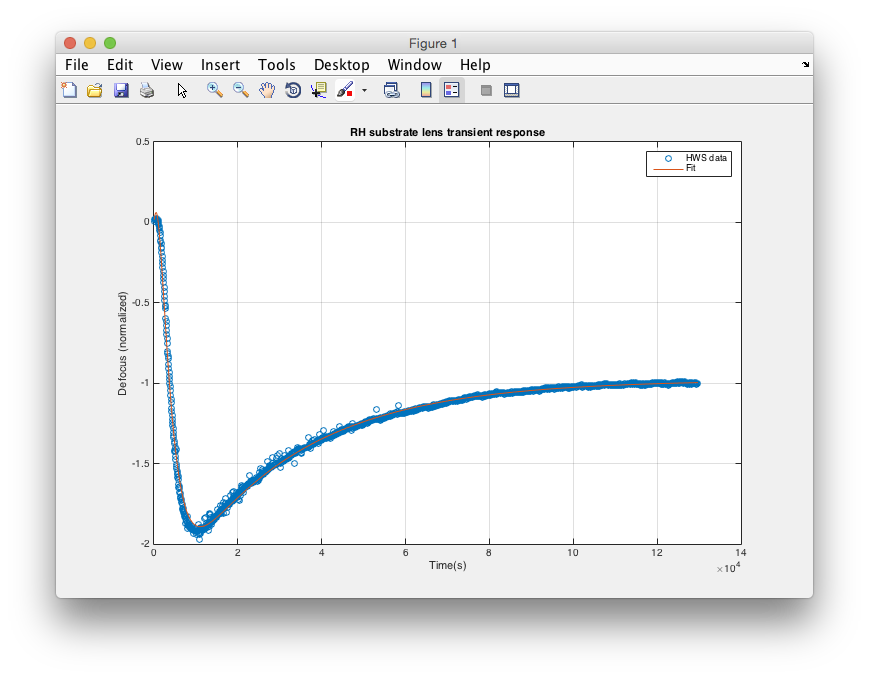

I've created a new filter for the RH substrate lens in TCS SIM. I'm testing it in H1:TCS-SIM_ETMY_SUB_DEFOCUS_RH. The filter bank is called "realRH".

The poles and zeros are derived from a fit of a series of exponentials to HWS data for the measured RH spherical power at LLO.

The (normalized) behaviour of the HWS measurement can be reproduced with the following sum:

defocus = -1 + sum(a_i * exp(-t/tau_i) )

| a_i |

tau_i (s) |

| 0.1127 | 397 |

| -3.96937 | 1363 |

| 6.37486 | 2636 |

| -0.0666803 | 14998 |

| -1.45155 | 29306 |

Each exponential acts as a low-pass filter with a pole frequency of 1/(2*pi*tau), so the transfer function is the sum of each of these LPFs weighted by the coefficients a_i. Math then can convert that into a ZPK filter with 4 zeros and 5 poles and the desired response should ensue. That's what I'm testing at the moment: see H1:TCS-SIM_ETMY_SUB_DEFOCUS_RH_OUTPUT from 1141778267.

I think I've put the wrong sign in the filter bank ...

This didn't work. I failed to notice that Foton substituted some of the very low frequency poles and zeros (order a few microHz) with ones that were a factor of 2 or 3 different. Plots to follow.

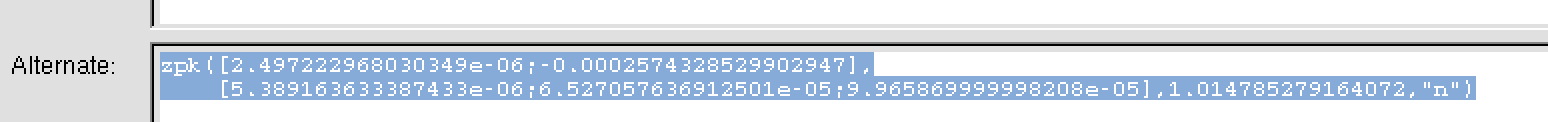

I've just added a simpler filter with three poles and two zeros.

poles: 9.965869E-5, 6.5094046E-5, 5.5656E-6

zeros: 2.45637E-6, -2.5739E-4

# DESIGN SIM_ETMY_SUB_DEFOCUS_RH 5 zpk([2.45637e-06;-0.000257392],[9.96587e-05;6.50941e-05;5.56564e-06],1,"n")gain(0.969)

Kiwamu, Stefan

Looking at the cross-power plot in alog 25768, we see a coherent noise floor following the shot noise a factor 3.3 below.

Looking at alog 21167, this seems cosistent with our old firend the excess 45.5 MHz noise in DARM.

Shot noise of 20mA: 8e-8mA/rtHz: a factor of 3.3 below that: 2.4e-8mA/rtHz. This is roughly consistent with the residual coherence seen in alog 21167.

Kiwamu will make an all-O1 plot to nicely resolve that noise. We need to add this noise to the mystery noise projection in alog 25106 (this plot).

Here is a cross-spectrum with more number of averaging (over 867 hours using the data between Oct-21-2015 to Jan-17-2016 with some glitchy durations excluded).

I looked at some few-hour stretches of O1 data and took the coherence between the DCPDs.

Above 1 kHz (where the DARM OLTF is −55 dB or less), the coherence goes as low as 1×10−4. See attachment for an example; FFT BW is 2 Hz and number of averages is >50,000. That would imply a correlated DCPD sum noise that is a factor of 7 below the shot noise [since the correlated noise ASD in each PD should be (1×10−4)1/4 = 0.1 relative to the uncorrelated (shot) noise ASD].

I suppose it is possible that the secular fluctuations in the nonlinear 45 MHz noise are enough to push the overall O1 coherence up to 2×10−3, which is what is required to achieve a correlated noise that is a factor of 3.3 below the shot noise in the DCPD sum.

To test this, I propose we look at the variation over O1 of some kind of BLRMS of the 45 MHz EOM driver control signal (or perhaps just the dc level of the control signal), similar to what Kiwamu has already done for some of the suspension channels.

During this same time period, the excess of sum over null above 1 kHz is about 0.1×10−8 mA/Hz1/2. Assuming 8×10−8 mA/Hz1/2 of null current therefore implies the correlated excess is a factor of 6 to 7 below shot noise.

(The slope in the data is probably from the uncompensated AA filtering).

The second attachment shows the conversion of the sum and null into equivalent freerunning DARM. From the residual alone, the limit on the coating Brownian noise seems to be a factor of 1.6 above nominal. (I quickly threw in a 5 kHz zero when undoing the loop in order to compensate for the AA filtering).

Finally, I add some mystery noise traces to this residual, where the slopes and amplitudes have been arrived at by careful numerology. The addition of a 1/f2 noise and a mystery white sensing noise (similar to 26004, but tuned to the residual during this time period) reduces the possible coating Brownian excess factor to 1.45 or so.

Here is an updated version of the cross spectrum using the O1 data. I have fixed a bug which previously overestimated the cross specctrum and have extended the analysis to high frequencies above 1 kHz.

As pointed out by Evan, my previous analysis overestimated the correlated noise. This turned out to be due to a bug in my code where I summed the absolute value of the segmented cross spcetra when averaging them. This is apparently wrong because the cross spectra by nature can have negative value (and imaginary number). I fixed the analysis code and reran the analysis again. The result looks consistent with Evan's targeted cross spectrum -- the kink point of the cross correlation happenes at around 1 kHz with the noise floor touching 1e-20 m/sqrtHz.

An instruction on how to restart TCS chiller and controller box can be found here.

Both TCSX and TCSY chillers were found to be tripped with an error messgage 'Low Temp'. I have power cycled the two units. And cycled the key on the X and Y CO2 laser controllers. They are now back up and functioning.

Thanks Kiwamu. The LOW TEMP warning will almost certainly have occured because the DAC at some point has been restarted and caused a zero voltage out to the chillers. This problem is meant to be getting fixed soon with an electronics fix.

It looks like the lasers are now ready to lase, and now you've reset the warnings with the key to get them running you probably just need to press the gate (on/off) button on the front of the controller. I'm not sure you need them right now though.