Top pannel is IM2 OSEMS. Bottom pannel is select OSEMs from IM1, IM3, and IM4.

Top pannel is IM2 OSEMS. Bottom pannel is select OSEMs from IM1, IM3, and IM4.

State of H1: locking and getting Engage ASC, Sheila working on the ASC

Site Activities:

LVEA and OSB optics lab access:

Currently:

I made a folder called beckhoff in /ligo/lho/h0/ve/medm and copied the new medm screens for the Beckhoff vacuum controls into it. I then changed the 'VE EX' link in the 'VE/FMC' drop down menu on the sitemap to point to /ligo/lho/h0/ve/medm/beckhoff/H0_VAC_EX_CUSTOM.adl. I committed the change to the sitemap into svn. /ligo/lho/h0/ve/medm/beckhoff/H0_VAC_MENU_CUSTOM.adl is a mini sitemap for just these screens. The screens that do not have CUSTOM in the name are generated from a script. The files necessary to generate these screens are in the slowcontrols svn repository in trunk/TwinCAT3/Vacuum/MEDM/LHO.

Kyle, Patrick The new Beckhoff control parameters still need "tuning". As of this writing, the control of CP8's level is via the Proportional term only. Initial attempts resulted in overfilling which then resulted in a closed LLCV which then resulted in a warm transfer line. Patrick and I opened the exhaust check valve bypass valve to relieve the back pressure and then cooled the transfer line via the manual LLCV bypass. With the transfer line cold and the pump level responding to changes in the LLCV, I manually filled the pump to 95% full, closed the exhaust check valve bypass valve am leaving the LLCV manually set at 50% open overnight which is its recent nominal value to maintain the pump level. Jim Batch set up and included two MEDM screens so the X-end status can be monitored from home. Patrick and Cheryl also are leaving an MEDM screen in the Control Room which can be monitored from home. 2135 hrs local -> I'm leaving now and will monitor CP8's level from home (every 2 hours)

I changed the end X channel names to match the new Beckhoff channel names in /opt/rtcds/userapps/release/cds/h1/alarmfiles/ve.alhConfig. There are no new Beckhoff channel names corresponding to HVE-EX:GV19_CCOFFALARM and HVE-EX:GV19_OVERPRESSURE so I commented these out of the file. I will have to see if there are other channels that can be alarmed on instead of these. I committed the changes to svn in cds_user_apps/trunk/cds/h1/alarmfiles/ve.alhConfig. I restarted the alarm handlers on alarm0 to use the updated file.

The Beckhoff control system upgrade was performed at X-end today and I just wanted confidence for this value -> Both values agreed to with 1%.

Kyle. Gerardo, Chandra Gerardo -> Installed 12" CFF at HAM10 D8 and gauge pair isolation valve on BSC4 Chandra -> R&R LVEA Turbo levitation batteries Kyle -> Drilled and tapped holes, mounted scroll pump local to Diagonal Turbo and upgraded gauge controller on Diagonal Turbo Kyle, Chandra -> Ran QDP80s, YBM, XBM and Diagoanl Turbos and vent/purge air supply (KOBELCO). The KOBELCO displayed a "HIGH LUBE OIL TEMP" Warning after having run for a while. Chandra noticed that the chilled water booster pump was off and that the output pressure once on was only 60psi (this may be the result of a reduced frequency running of the chilled water circulating pump - nominally this would be 90 psi - we need to investigate) The measured dewpoint after > 1 hour of run time was <-30C. Gerardo noticed that the Diagonal volume was still left connected to the purge air header from when it had last been purged, the isolation valve was then closed. NOTE: The XBM Turbo spun up to full RPM but tripped off during the braking phase. This Turbo has a history of high vibration - typically during the accelerating phase.

The Turbo pump controllers are being left energized overnight in the LVEA to allow the Turbo rotors to come to a full stop

No FRS required. Lower than expected pressure in output from booster pump in chilled water system is likely due to difference in building chilled water pressure (variable speed pumps).

A line at 47.683 Hz has appeared in the output of the stochastic O1 (time shifted) analysis. We have used the coherence tool to search for look for the source of this line in H1 and L1. The line at 47.683 Hz appears very clearly in H1 in the h(t) coherence with H1:PEM-EY_MAG_EBAY_SEIRACK_X_DQ (week 13 example attached) and H1:PEM-EY_MAG_EBAY_SEIRACK_Y_DQ, in weeks 12, 13, 14, 16. It also appears clearly in the L1 h(t) coherence, apparently associated with some other lines (eg, 47.42Hz) in weeks 5-10, 14, and 16 in these channels: L1:SUS-ETMX_L1_WIT_P_DQ (week 5 example attached) L1:SUS-ETMX_L1_WIT_L_DQ L1:SUS-ETMX_L1_WIT_Y_DQ L1:PEM-EX_MAINSMON_EBAY_3_DQ (week 5 example attached) L1:PEM-EX_MAINSMON_EBAY_QUAD_SUM_DQ L1:PEM-EY_MAG_EBAY_SUSRACK_X_DQ

These lines are also visible in the run-averaged spectra for H1 and L1. See attached figures (ignore the S6 labels in the legend, corresponding to traces that are off-scale). Can anyone think of a device, common to both observatories, that produces such a frequency, e.g., a flat-screen monitor?

Finished the wiring and installation of the electronics for the new CPS timing distribution at EX, EY, and LVEA. CPS interface units need to be modified before switching to the new timing distribution.

I was only able to take the ETMY charge measurements today. I've processed the results, and the last 2 weeks of data that others have taken.

Plots pending...

Per workpermit 5758 installed the new Beckhoff based vacuum control chassis and computer. There were a couple of hardware issues. First was the polarity of the 4-20ma inputs. One set of 4 for the fan vibration monitors were fine but we had to swap the 3 for vacuum LN2 gauges. Also plugged the Watchdog shorting connector in backwards which prevented the LN2 control valve from operating. Once we fixed those issues the hardware and Beckhoff software worked fine. The next problem was the gateway computer. Some files needed to be modified to allow the channels to pass. I will let other comment on this. For now the system is running and screens are being modified so the users will not see much difference.

I wrote a quick script that will change all of the gains in the appropriate degrees of freedom for the SEI Corner Station sensor correction. The gains should be 0 or OFF when recentering the STS, and then turned back ON after (assuming the chambers are up). I imagine there will be more guidence for operators to do this in the future.

The script can be found in (userapps)/isi/h1/scripts/Toggle_CS_Sensor_Correction.py

thomas.shaffer@opsws10:/opt/rtcds/userapps/release/isi/h1/scripts$ ./Toggle_CS_Sensor_Correction.py -h

usage: Toggle_CS_Sensor_Correction.py [-h] ON_or_OFF

positional arguments:

ON_or_OFF 1 or 0 to turn the gain ON or OFF respectively

optional arguments:

-h, --help show this help message and exit

WP 5765 See T1600062 SEI log 897

Changed data storage rate for BSC:

1) ISI-optic_STi_MASTER_H(V)j_DRIVE & ST2_BLND_dof_GS13_CUR_IN1

This change means that for the next week or so, inquiries of these channels for times from ~5 days ago to before the restart will need to be via nds2. And, one must avoid selecting a request or data that spans the change. Middle of a maintenance day...maybe that should just be avoided anyway.

2) Increased allowable saturations on L4Cs, GS13s & ACTs to reduce unwarranted tripping.

3) Replaced some utility channels with lower case characters with upper case.

4) Replaced a momentary with LONG_PULSE for STS2 mass centering function.

5) Added SUS Point calculation blocks (detailed in 25913.) ISI will take the overhead of calculating this to ease the load of the SUS model.

6) Added Stage2 senscorr DQ channels for T240 & CPS.

The LVEA main crane rail shimming as suggested by the survey crew from Duane Hartman & Associates Inc. has been completed. A copy of the shim changes will be entered in the DCC soon.

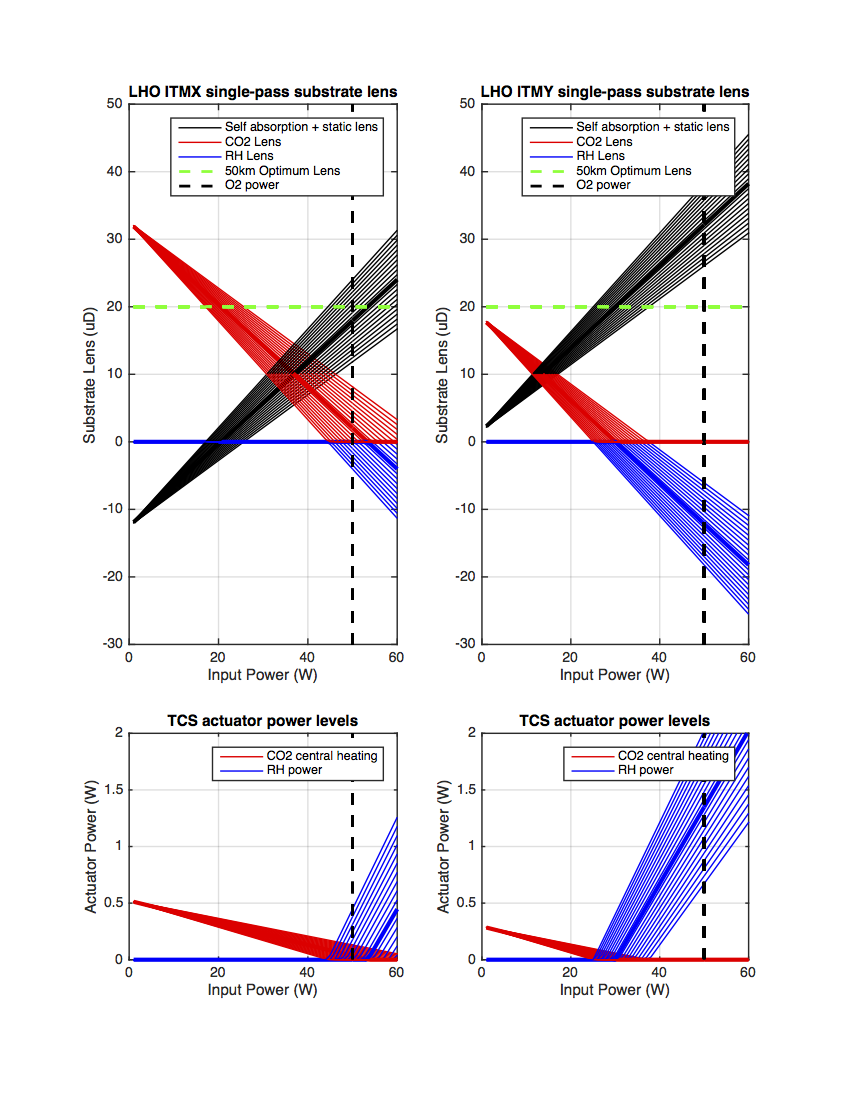

The attached plot (and script) shows the nominal TCS power levels required for O2 to correct for just the ITM substrate lenses.

The following values are assumed:

The bottom line is that we will cease to need central heating around 45W on ITMX and 30W on ITMY and will need to start using the RHs to compensate for the thermal lenses. No consideration is yet given to HOM correction with annular CO2 heating.

Subject: Re: RC as-built designDate: May 14, 2015 at 7:54:37 PM EDT

Hi All,

The value of the thermal lens that was always used when adjusting the cavities is 50 km.

The issue which caused all of the confusion last summer was one of definition; i.e. what does it mean to have a 50 km thermal lens. The plots Muzammil put together, on which the decision to include the thermal lens was based, modeled the thermal lens as being inside the ITM. The model we used to adjust the optic positions had the 50 km thermal lens immediately in front of the ITM. The effective focal length in the two cases differs by a factor of n (the index of refraction), with it being stronger in the model which was used to calculate the positions. Because of this the positions were tuned to have a slightly stronger thermal lens than was originally decided on based on Muzammil's plots.

Fortunately, Lisa and I discovered that this is essentially a non-issue since the length changes needed to tune for the two different thermal lenses are less than the length changes needed to compensate for tolerances in the measured radii of curvature of the optics.

Thank you Aidan for working on this.

Speaking of the thermal lensing, we (the LHO crews) have been discussing a possible TCS pre-loading strategy. Here are some summary points of our (future) strategy:

Any comments/questions are welcome.

Sheila, Jenne, Matt, Rob, Evan, Lisa Several things happened today, more details will be posted later:

Higher-than-before jitter coupling seems to have made a lot of excess noise peaks. See these high coherence peaks between DARM and various IMC WFS signals (only WFS DC signals are shown).

It doesn't look like the intensity noise caused by the jitter (look at low coherence between DARM and ISS second loop signal, also IM4_TRANS_SUM coherence is low even when IM4_TRANS_PIT coherence is high).

Something seems to be excited on the PSL table (look at the coherence between DARM and PSL table accelerometer).

What happened in the PSL since Feb 26 2016 16:30 UTC when the IFO was in good low noise lock?

Lisa, Evan

We repeated the DARM-to-OMC coupling test (25852) at full power.

Tentatively, the change in the violin mode heights with the OMC locked and unlocked suggests only a 75 % coupling into the OMC (i.e., a 25 % loss).

We are not sure why the violin mode at 502.8 Hz is fatter with the OMC locked, so we would like to repeat this test with a calibration line.

This 502.8Hz mode seems one of the violin mode for ITMX, accoding to the wiki https://awiki.ligo-wa.caltech.edu/aLIGO/H1%20Violin%20Mode

If that's true, just to be sure, you should use one of the ETM violin modes, or ETM cal line as you mentioned.

When I was doing a beacon scan using some of the violin modes, I found that the 45MHz modulation sidebands also had finite response to the violin modes.

Assuming this came from the motion of the ITMs, I learned that I needed to use the ETM motions in order to correctly figure out the signal mode matching.