This is just a quick listing of the tests that each IFO has in their DIAG_MAIN Guardians and their docstrings.

LHO

ALS: ALS checks, This can be seen when the beatnote drops down to 0-2 MHz and when (in dBm) it gets above 5. Checks to see if the ALS SHG Temperature Control Servo is on Nominal is 'ON'

BEAM_DIVERTERS: The Beam divereters should be closed after the ISC_LOCK state of CLOSE_BEAM_DIVERTERS #550. 1 == closed, 0 == open.

BECKHOFF: Beckhoff status check

Check some random Beckhoff channels to see if the system in running.

COIL_DRIVERS: Coil drivers functioning, If they stay at 0, it will bring up a notification

ESD_DRIVER: ESD driver status

HWS_SLEDS: HWS SLEDS are on

HW_INJ: Hardware injections running

ISI_RINGUP: ISI ring up motion as seen in alog24245, Only looking at the ETMs and non rotational dofs for now.

MC_WFS: MC WFS are engaged

OMC_PZT: The OMC PZT2 high voltage should be on, otherwise the OMC_LOCK Guardian will fail at the FIND_CARRIER step

OPLEV_SUMS: Optical lever sums nominal, Check only when the suspension is aligned.

PEM_CHANGE: Major environmental changes

PMV_HV: PMC high voltage is nominally around 4V, power glitches and other various issues can shut it off.

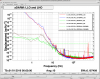

PSL_FSS: PSL FSS not oscillating

PSL_ISS: ISS diffracted power

PSL_NOISE_EATER: PSL noise eater engaged

RING_HEATERS: End station ring heaters on

SEI_STATE: SEI systems nominal, Also checks if the WD saturation counts are are greater than somepercent of the limit.

SEI_WD: SEI watchdog not tripped

SERVO_BOARDS: These are Beckhoff controlled channels and cannot be monitored by SDF yet.

SHUTTERS: Monitor the Beckhoff controlled shutters

SUS_PUM_WD: RMS watchdogs

SUS_WD: SUS watchdogs and OSEM input filter inmon on the quads

TCS_LASER: TCS laser OK, Switched ON, Power above 50W, No Flow Alarm, No IR alarm, Only for TCS X for now.

TIDAL_LIMITS: Tidal limits within range

LLO

ALS_LASER: ALS laser power above threshold

ALS_PLL: None

ALS_SERVO_POLARITY: ALS servo polarity monitors

BECKHOFF: BECKHOFF system monitor

BSOPLEV: Beamsplitter OpLev Monitor

IOP_DACKILL_MON: Monitors IOP DACKILL status and reporting faults

ISS_DIFFRACTED_POWER: ISS Diffracted Power monitor

OMC_PZT_HV: OMC PZT HV monitor

PARAMETRIC_INSTABILITY: PI not rung up

PRC_GAIN: PRC gain above threshold

SEI_ISI_T240_SATMON: SEI ISI T240 saturation monitors

SUS_QUAD_ESD_BMON: QUAD BIO ESD driver digital monitors

SUS_QUAD_ESD_DMON: QUAD ESD driver digital monitors

SUS_QUAD_L3_BIO_CHECK: QUAD L3 BIO switch consistency check

SUS_QUAD_PUM_WD: QUAD PUM RMS watchdog

static_vars: None

To get these I opened a python shell and imported inspect and the desired module, then...

for name, obj in inspect.gemembers(module_name):

if inspect.isfunction(obj):

print '{}: {}'.format(name, inspect.getdoc(obj))