Still chasing the SRC gouy phase. On Saturday I was looking for the beam reflected back from the SRM through the SRC, transmitted through the ITM towards the ETM. I was trying to see the beams on the ETM baffles. The bottom line is that after trying to move multiple optics including BS, ITMY, SR3, SRM, I was not able to see any beam on the ETM baffle PDs except for the propt reflection from the BS towards ETMY (which doesnt travel through the SRC).

Method:

ITMx, ETMx, ETMy, PRM were misaligned for this measurement. To split the beams travelling through the SRC an optic in the SRC must be misaligned. I could look at the beams on gige cameras ASair and cam17 and the analogue camera looking at Ham 5. I saw no beams on the ETMy gige camera, is this working currently?The baffle Pds are 35cm or 3 beam diameters apart, so was aiming the split the prompt reflection from the BS to ETMy from the beam that travels once through the SRC by at least 1.5 beam diameters.

First tried moving single optic, eg BS or SR3, by applying excitaions of different frequencies in pitch and yaw, and then looking for a signal on the baffle PDs. I saw no signal that was not the prompt reflection, which was present whther or not the SRM was aligned. ThenI tried moving BS to center the prompt reflected beam onto a baffle PD, moving it slightly off to the side and then moving the and moving SRC optics with an excitaion in pitch and yaw, one at a time.

Then tried moving SRC/ITMY optics, with no BS misalignment. I saw no beams on the baffle PDs when moving the SRC optics, anr only the prompt reflection when moving the ITM and the BS.

I could see a beam inside the SRC on the SR3 baffle, which I think was the second trip around the SRC. So I think the first trip around the SRC was making it through the ITM and it wasn't clipped, but I couldn't get it on the ETMy baffle.

As a side note:

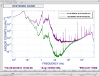

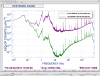

Funnily enough, while doing this misalignment business I noticed three beams on the gige camera 'cam_17' on ISCT_6. I think these is the straight shot, the one round-trip and two-round trip beams through the SRC. The angle between these beams also tells us the SRC gouy phase. Stefan and I tried to look at these beams last year on the AS_air camera with no success, and we concluded that we couldn't split these beams without clipping on an optic. But the cam_17 is a new camera in a different part of the AS_air beam waist. And it looks like we are seeing all three beams. Interesting.