I reset the PSL 35W FE power watchdog at 17:55 UTC (9:55 PST).

I reset the PSL 35W FE power watchdog at 17:55 UTC (9:55 PST).

BS had saturation count of 712 & was RESET.

H1 was down overnight.

Observatory Mode was Preventative Maintenance when I arrived.

A few activities were already started (beamtube sealing, dust monitor work, & crane work). We also transitioned to Laser SAFE.

Now we other activities are starting to roll in.

All time in UTC

00:12 Kyle fill out CP3, then head to EY

00:20 Elli & Nutsinee to HAM6 to adjust AS Air camera

00:44 Robert to EY debugging seismometer

00:51 Kyle back from EY

01:18 Robert back

01:20 Robert head back to EY

07:50 Lost lock trying to engage ISS 2nd loop. Too tired to relock. Leaving the ifo DOWN for the night. Evan switched RF45 modulator to OCXO.

Note for Day Shift Op: There were two relocks during my shift, roll modes were high both times. Engage DC READOUT with caution. Maybe it's a good idea to hang out at BOUNCE_VIOLIN_MODE_DAMPING for a while?

I remeasured the RFAM-to-DARM TFs for the 9 MHz and 45 MHz sidebands.

The 45 MHz measurement agrees with the previous result of ~0.1 mA/RAN. However, the 9 MHz measurement is also ~0.1 mA/RAN, which is a factor of 10 higher than what was measured previously. Note that the previous "9 MHz" RFAM measurement was really a simultaneous measurement of 9 MHz and 45 MHz RFAM, since we had no 45 MHz RFAM stabilization in place.

For the 45 MHz measurement, I injected into the error point of the 45 MHz RFAM stabilization servo and measured the TF from the OOL RFAM stabilization detector (which is already calibrated into RAN) to the DCPD sum.

For the 9 MHz measurement, I temporarily replaced the OXCO with an IFR running at 9.1 MHz and +10 dBm. Then I used the spare DAC channel to inject into the IFR modulation port, which was set to 10 % deviation, dc-coupled (which means a RAN of 0.071 for 1 V of input, though I did not measure this directly). The signal from the spare DAC is buffered by an SR560 and sent back into one of the spare ADC channels. Then I measured the TF from the spare ADC channel to the DCPD sum. This measurement relies on the 45 MHz RFAM servo suppressing the resulting fluctuations in the 45 MHz sidebands before they are applied to the EOM; looking at the OOL readback, this seems to be satisfied below 1 kHz. Above 1 kHz, there is a RAN increase of <2 compared to no 9 MHz injection.

Templates live in my folder under Public/Templates/Osc/(45|9)_RFAM_2016-02-08.xml.

In addition, I took noise measurements of the 9 and 45 MHz RFAM spectra.

The 45 MHz measurement is straightforward, since we already have a calibrated, dequeued RFAM monitoring channel. (Actually I used the faster, undequeued IOP channel, calibrated it, and undid the AA filter.) the noise between 50 Hz and 1 kHz is a few parts in 109 / Hz1/2.

We don't have a similar readback channel for the 9 MHz RFAM close to the EOM, so I made a mixer-based measurement by taking an output from the ISC 9 MHz distribution amp, splitting it, and driving both sides of a level-7 mixer. I had 9 dBm into the LO and −3 dBm into the RF, so the LO was being driven hard and the RF was below the mixer's compression point. The mixer IF was terminated and then bandpassed with a 1.9 MHz filter. The IF dc was −135 mV or so.

To read out the noise, I took one of Rai's low-noise preamps (measured to have <2 nV/Hz1/2 input-referred noise) and ac-coupled the input with a 20 µF capacitor (giving a high-pass pole at <0.1 Hz). Then I read out the noise with an SR785. I have not yet verified that the signal is above the noise floor of the mixer measurement.

Finally, I also include the RFAM-to-DARM coupling TFs with the DARM loop undone.

1615 - 1650 hrs. local -> To and from Y-mid + Y-end Next over-fill to be Wed, Feb. 10th before 4:00 pm

[Keita, Jenne, Daniel]

We looked into the electronics for the new AS 90 MHz WFS, and found 2 mixups. One we fixed, the other we ask Fil to fix tomorrow during maintenence.

The cable for the binary I/O for AS B 90 was plugged into BIO 2, chassis 6. However, according to E1300079 it belongs on BIO 4, chassis 4. We made this swap, and the AS B 90 channels now look good, and respond to changes in the whitening state.

Half of the AS A 90 channels are still not good. The problem seems to be that instead of the "normal" D1002559 whitening chassis, the "split variant" whitening chassis was installed. These have the same DCC number, but the input panels and input adapter boards are totally different. For the normal version, all 8 channels are connected to the single input connector. For the split variant (which is designed for use with the OMC DCPDs) 4 channels are on each of the 2 input connectors. So, Since all 8 of our WFS signals are on one cable, 4 of those signals (I3, Q3, I4, Q4) are just going nowhere. Anyhow, if Fil / Richard could put the normal variant of the whitening chassis in tomorrow during maintenence, we should be good to go with trying out our new AS90 centering loops.

Also, AS B Q1 signal looked dead, but after pushing the whitening cable in more, it is now fine.

(All Times in UTC)

H1 locked for 12+hrs when I walked in & in Observe for Intention Bit & "Logging" for Observatory Mode

Made a few attempts at taking H1 to NLN, but dropped out due to an earthquake. Sheila also checked on PRMI transitions.

Per FAMIS request 4391, attached are oplev 7-day trends.

Everything here looks normal, nothing out of the ordinary. All active oplevs are within acceptable operating ranges.

Robert, Dave:

During O1 the spare ADC channels in the end station PEM models were zeroed out so their science frame data payload was be compressed to zero. During the current inter-run commissioning period, all 6 ADC channels at each end station are now active. The SDF files h1peme[x,y]_OBSERVE.snap were updated to zero the SDF diffs and permit observation mode.

Elli, Cao

I have moved the AS_Air camera 30cm along the beampath, to optimise this camera location for an SRC gouy phase measurement. See attached photo. The camera is now 20cm in front of the beam waist, instead of 10cm behind it. We now measure 10.4cm between the beamsplitter (to CAM_17) and AS_Air.

I have left some clamps in place to mark the original position of this camera, so we can move it back quickly if necessary.

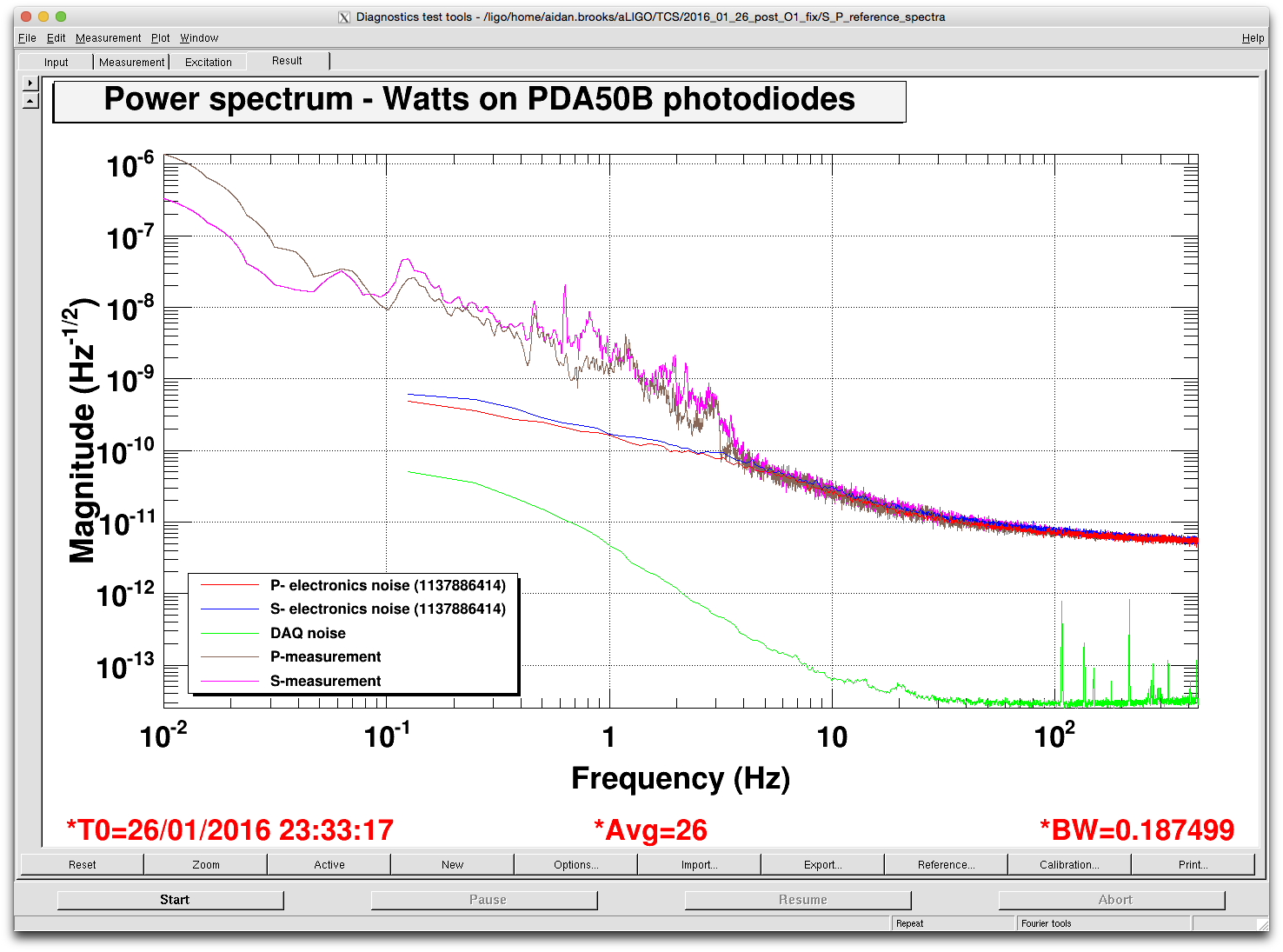

I have analyzed some initial measurements from the fast polarization photodetectors (from a lock-stretch on Sat 31st Jan: centered around GPS = 1138230306). The channels H1:TCS-IFO_POLZ_P_HWS_OUT and S_HWS_OUT are the respectively powers measured by the P- and S- photodiodes (in Watts - accounting for the dewhitening and response of the photodetectors).

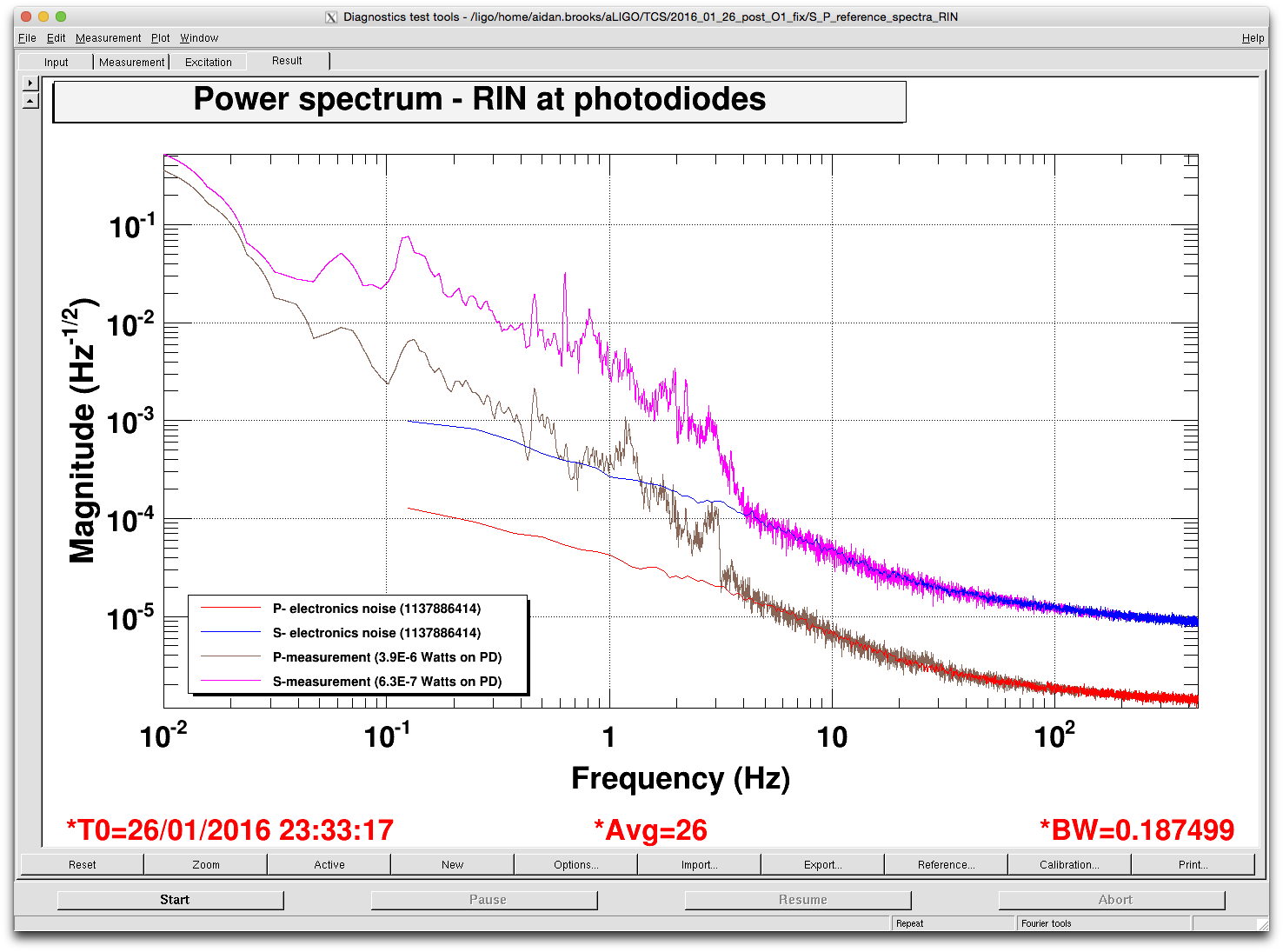

The absolute level of noise of S and P on the table is roughly the same, albeit with distinct differences in the spectra. The relative intensity noise, on the other hand, is an order of magnitude larger in the S- polarization than in P.

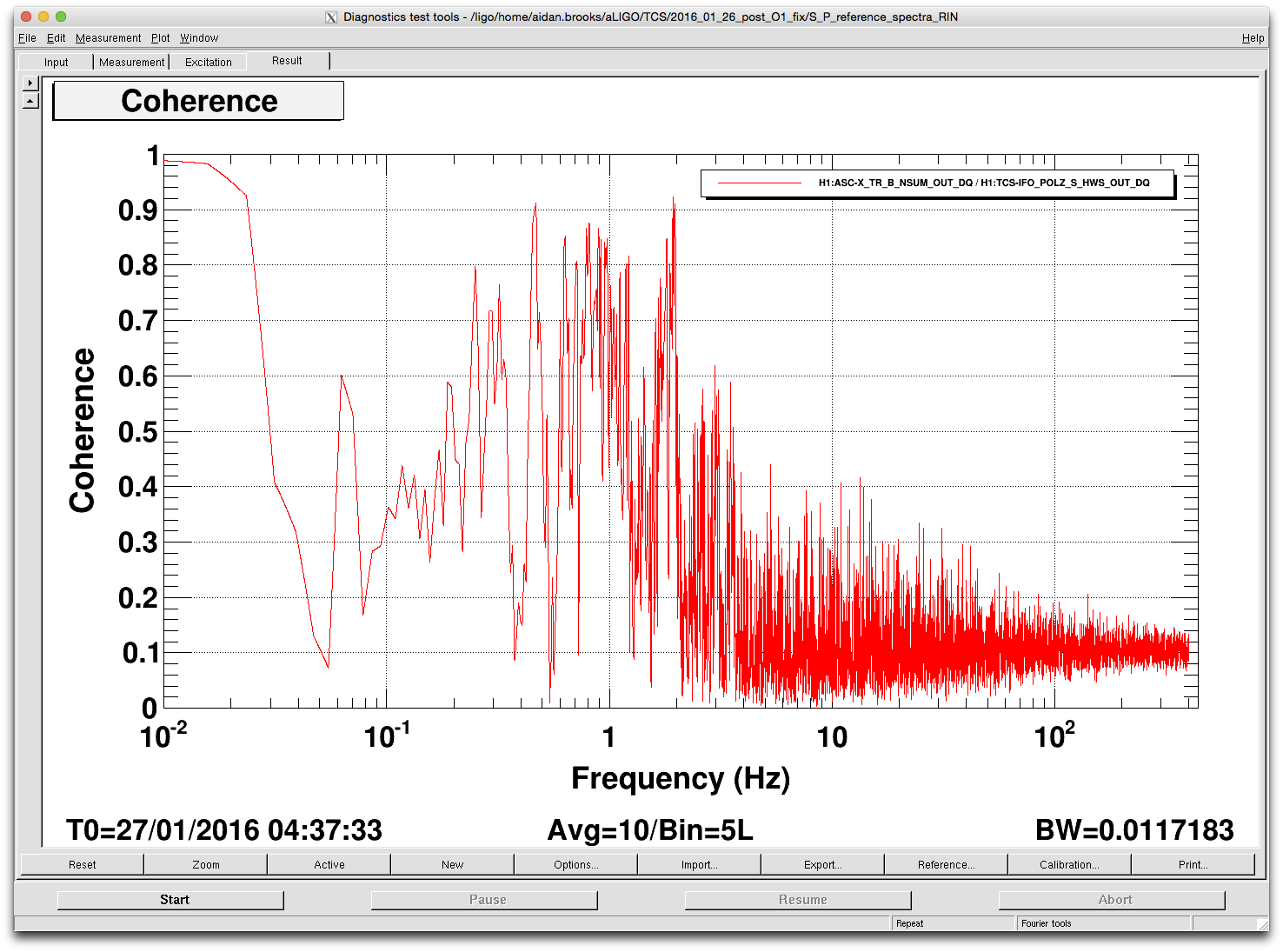

Also shown is the coherence between S and P. The fact that the coherence isn't very large indicates that something (either in the interferometer or the output chain after the BS_AR reflection) is injecting more noise on the S- polarization than on the P-polarization. Obviously, this needs a more detailed investigation.

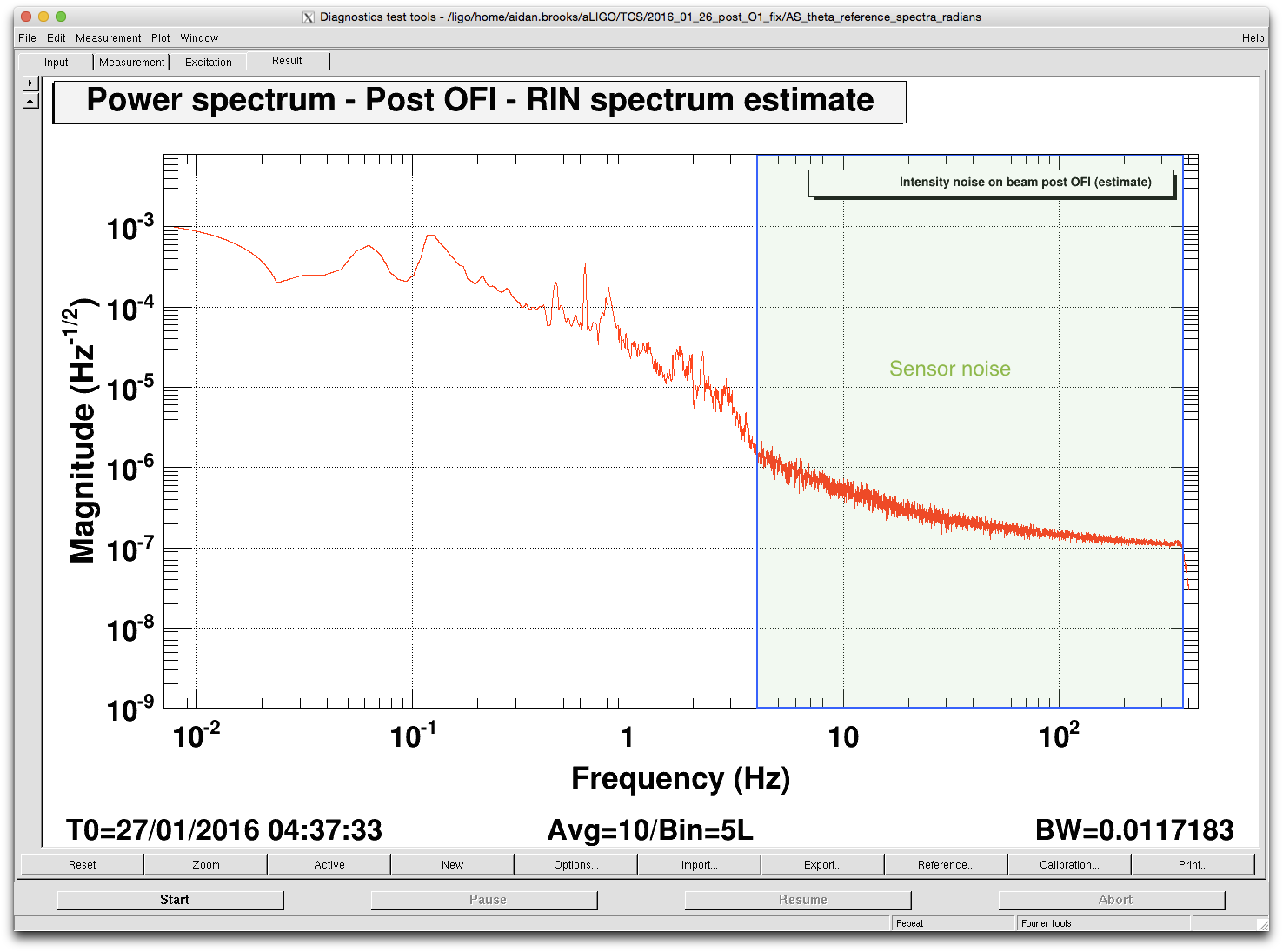

Lastly - the model includes an estimate of the noise in the polarization angle at the AS port (AS_RN) - this is important because it is close to what the OFI will see and convert to intensity noise at the output of the OFI. In the horizontal axis, we're limited by sensing noise above 4Hz.

The DC level of polarization rotation at the OFI, theta_DC, is around 0.09 rad (5.2 deg) in the middle of the lock-stretch. The estimated intensity noise on transmission through the OFI is 2*theta_DC*AS_RN and is shown in the attached spectra.

Power

RIN

Coherence

Polarization induced RIN after the OFI

[Jenne, Sheila]

We measured the drive to the 90MHz distribution amplifier, and gave it a 2dB attenuator so that we are now driving the distribution amp with 10dBm rather than 12dBm. We re-measured the outputs of the distribution amplifier, and now they were a little over 12dBm. So, each output of the distribution amplifier got a 2dB attenuator, so each of the demod boards is getting the 10dBm that they want for their local oscillators.

Subsystem Status

Reviewed work permits.

Items for Tues Maintenance

On the Alarm Handler, there were alarms for:

Appears to have happened ca 2016-02-07 21:00:00 Z.

Stopping and restarting h1cam18 did not fix the problem.

Filed FRS 4351 for this.

Cao, Ellie, Dave O and Aidan

From llo alog 21927, Aidan found that the ratio between s an p polarizations is higher than expected. The polarization at the output port has been observed to change over time during lock stretches. In particular the s polarization reduces by 30-50% over the course of 1-2 hours and p polarization slowly increases. Due do various polarization-depenent optical elements in the interferometer, the s-polarization pick up different phase shifts. Excess of p-polarized light may couple to DC readout and introduces excess of noise at the output. Given the time dependent behaviour of the s-polarisation we would expect the DARM noise to change with time if polarization noise was contributing in a major way to the DARM spectrum. The DARM power spectra were inspected a number of times during post power up to investigate the dependency of the noise on the polarization the state of the interferometer.

DARM power spectra from 19Dec15, 26Dec15 and 9Jan16 lock stretches show no apparent changes over time after power up. These lock stretches were during the O1 run and were chosen because the interferometer went to observing mode very quickly after the power was increased. Power spectra are recorded at 0.19 Hz bandwidth, 10 averages. The interferometer went to observing mode at 7, 5 and 2 minutes after power up on 19Dec15, 26Dec15 and 9Jan16 respectively.

There is no clear correlation between the polarization drift and the DARM spectra. Whereas there is a 30%-50% decrease in s-polarization over the course of 1-2 hours after power up, the DARM noise spectra remains stable in the 10 to 70 Hz range.

The DARM spectra also indicates no effect due to thermal lensing as the DARM spectra is very stable during self-heating of the interferometer. Thermal lensing relaxation time is approximately 20 minutes after power up. DARM power spectra after stabilization (occurring at minimum 2 minutes after power up) remains constantst during thermal lensing relaxation period and beyond.

We will be looking into the same problem at Livingston, which has a stronger polarization drift.

The time evolution of the self-heating is given here: aLOG 14634

More generally, the TCS actuator couplings are given here: T1400685

Again Masayuki.Nakano reported with Stefan's account

Kiwamu, Masayuki

We measured spectrum of the OMC DCPD signals with a single bounce beam. It would help a noise budget of a DARM signal.

1. Increase the IMC power

IMC power was increased up to 21W. Also H1:PSL-POWER_SCALE_OFFSET was changed to 21.

2. Turn of the guardian of isc-lock

Requested 'DOWN' to the isc-lock guardian to not do anything during the measurement.

3.Miss align the mirrors

For leading the single bounce beam, all of mirrors were misaligned by requesting 'MISALIGN' to guardians of each mirrors except for ITMX.

4.Aligned the OM mirrors

When we got single bounce beam from IFO, there was no signal from ASC-AS-A, B, C QPDs initially. We aligned OM1,OM2,OM3,OMC suspensions with the playback data of OSEM signals

5.Locked the OMC

The servo gain, 'H1:OMC-LSC_SERVO_GAIN', was set to 10 and master gain of the OMC-ASC was set to 0.1.

The DCPD output was 34 mA.

6.Measurement (without a ISS second loop)

The power spectrum of below channels are measured. Measurement frequency was 1-7kHz and BW was 0.1 Hz. The measured channel was as below.

H1:OMC-DCPD_SUM_OUT

H1:OMC-DCPD_NULL_OUT

H1:PSL-ISS_SECONDLOOP_SUM58_REL_OUT

H1:PSL-ISS_SECONDLOOP_SUM58_REL_OUT was used as the out-of-loop sensor of the ISS.

7.Closed the ISS second loop

The ISS second loop was closed. The sensors used to gain error signal was PD1-4.

8.Measurement (with a ISS second loop)

Same measurement as step5. In addition to that, the coherence function between DCPD-SUM and SECONDLOOP_SUM was measured.

I scaled out-of-loop sensor signals of ISS, i.e. the residual intensity noise after the ISS second loop, to the same unit as OMC-DCPD signals. The scaling factor was estimated by dividing the H1:OMC-DCPD_SUM_OUT spectrum (without ISS) by H1:PSL-ISS_SECONDLOOP_SUM58_REL_OUT spectrum (also without ISS) at 100Hz.

I scaled those spectrum both (hereafter 'both' means with and without closing ISS) by same scaling factor.

You can see the DCPD-SUM spectrum, DCPD-NULL spectrum and scaled second loop ISS out of loop sensor signals in attached plots.

The both NULL signals agree with the shot noise of a PD with 34mA signal (cyan curve) above 30Hz, and below that it would be limited by ADC noise.

About the SUM signals, it seems to consistent with the scaled intensity noise above 300 Hz. Also they have some coherence between the intensity noise and the OMC PD signal upper than 300Hz(see another plot). On the other hand, there seems to be some unknown noise below 300 Hz when the second ISS loop was closed.

Possibly this unkown noise might come from the length motion of the OMC. I attached another plot. This plot is the one of same channel(upper) and the OMC error signal with a different servo gain of OMC LSC loop. The error signal and DCPD-SUM signal seem to have similar structure around 100Hz. I haven't any analysis yet because these plots are measred after whitening filter had some trouble and we are planing to do same measurement again with whitening filter.

As Masayuki reported above, we see unexplained coherent noise on DCPDs in 10-200 Hz frequency band. However, according to an offline analysis with spectrogram, they appear to be somewhat non stationary. This indicates the existence of uncontrolled (and undesired) interferometry somewhere.

We should repeat the measurement with a different misalignment configuration.

Later, we concerned about noise artefact which can be introduced by not-quite-misaligned mirrors making scattering shelf or some sort in this measurement. To test this theory, we looked back the data in spectrogram and searched for non stationary behavior. It seems that we had two different non-stationary components; one below 10-ish Hz and the other between 10 and 200 Hz. The attached are the spectrograms produced by LIGODV web for 20 sec where we had 20 W PSL, OMC locekd with a gain of 10 and ISS closed using the PDs 1 through 4 as in-loop sensors.

In DCPD-SUM, it is clear that the component below 10 Hz was suddenly excited at t = 13 sec. Also, the shelf between 100 and 200 Hz appear to move up and down as a function of time.

Also, here are two relevant ISS signals which did not show obvious correlation with the observed non stationary behavior.

ITMx ISI taken to DAMPED due to being tripped for Genie lift craning.