(TJ writing as Travis)

Reminder to Operators: We are NOT adding a second stage of whitening anymore. We are calibrrated for only one stage, and a rare case of zero.

(TJ writing as Travis)

Reminder to Operators: We are NOT adding a second stage of whitening anymore. We are calibrrated for only one stage, and a rare case of zero.

The SSD RAID attached to h1tw0 (and h1nds0) has failed. Until the problem can be diagnosed and fixed, minute trend data won't be available from h1nds0.

late entry for yesterday's CDS maintenance

DAQ frame writer install

Dave, Jim:

The second fast frame writer was connected to the LDAS Sataboy-raid system. All frame writers were then renamed, details in Jim's alog

Adding Duotone Channels to the Science Frame

Keita, Dave:

The h1omc, h1calex and h1caley models were modified to all DUOTONE timing signals from all three buildings to the science frame at 16kHz. These signals are being readout by a filtermodule, to capture the raw signal before any filtering/gain/offset could be applied, we are using the IN1 channel.

H1CALEX.ini:[H1:CAL-PCALX_FPGA_DTONE_IN1_DQ]

H1CALEY.ini:[H1:CAL-PCALY_FPGA_DTONE_IN1_DQ]

H1OMC.ini:[H1:OMC-FPGA_DTONE_IN1_DQ]

Loading all the filters

Sheila, Dave:

SUS PRM and SRM had partially loaded filters, we performed a full load of these systems.

Restarted External Alerting System

Dave:

I restarted the external alert system which had stopped between 6pm and 7pm PDT Monday. I'm looking into a mechanism to restart this system.

ER8 Day 23. Maintenance day. No unexpected restarts. DAQ completion of frame and trend writers. OMC and CAL changes to add duotone channel to science frame.

model restarts logged for Tue 08/Sep/2015

2015_09_08 09:21 h1tw0

2015_09_08 09:57 h1tw1

2015_09_08 12:12 h1omc

2015_09_08 12:15 h1calex

2015_09_08 12:17 h1caley

2015_09_08 13:10 h1broadcast0

2015_09_08 13:10 h1dc0

2015_09_08 13:10 h1nds0

2015_09_08 13:11 h1broadcast0

2015_09_08 13:11 h1nds0

2015_09_08 13:12 h1nds1

2015_09_08 13:12 h1tw0

2015_09_08 13:12 h1tw1

2015_09_08 13:18 h1dc0

2015_09_08 13:25 h1dc0

2015_09_08 13:26 h1nds0

2015_09_08 13:26 h1nds1

2015_09_08 13:28 h1broadcast0

2015_09_08 13:28 h1tw0

2015_09_08 13:28 h1tw1

2015_09_08 13:58 h1nds1

ER8 Day 22. No restarts reported

8:34 Noticing that LLO was down, and determining that the OMC whitening hadn't been engaged, I turned on the whitening. This took the IFO out of Observing mode for 2 minutes, but gained us a few MPCs.

9:18 Big glitch, ETMy saturation

10:42 lockloss, ITMx saturation. I reloaded ISC_DRMI guardian per Sheila's instructions.

11:25 lockloss @ CARM_ON_TR, PRM saturation. I also fixed a typo in the ISC_DRMI guardian that started throwing errors.

11:36 lockloss @ PREP_TR_CARM. PRM, SRM, ETMx, BS, SR2, and MC2 saturation.

11:49 began initial alignment after seeing things looked a little wonky.

12:05 started locking

12:45-12:52 SUS OMC SW watch dog tripped 8 times

13:47 lockloss REDUCE_CARM_OFFSET

14:02 lockloss @ PREP_TR_CARM. PRM, SRM, ETMx, BS, SR2, and MC2 saturation.

14:10 lockloss REDUCE_CARM_OFFSET

14:16 lockloss REDUCE_CARM_OFFSET

14:26 lockloss @ PREP_TR_CARM. PRM, SRM, ETMx, BS, SR2, and MC2 saturation.

14:34 lockloss @ DRMI_LOCKED, PRM, SRM saturation.

14:56 lockloss @ REDUCE_CARM_OFFSET.

Pretty tough relocking this morning for no obvious reason; wind and seismic are all calm. The couple times I tried locking PRMI, it would always lock on split modes which required touching up both BS and PRM. Afterwards, it would lock DRMI OK, but the next time it required touch up again. Only once was I able to make the PRMI->DRMI transition trick work.

Yesterday the grout forms were placed and secured around the HEPI piers at HAM 1 with the intention of pouring the grout on the next maintenance day 09/15. I also checked the nitrogen flow at all of the LTS containers. I was also able to crane the TCS chiller that I craned over the tube last week for Jodi back over the tube for Jeff B.

As Jeff reported yesterday (alog 21280), we have completed the assessment of the suspension scaling factors. In order to finish up the suspension side of the calibration, all we needed to do is to update the CAL CS filters that should accurately simulate the latest suspension transfer functions. So I implemented and tested the new filters today. It seems good so far -- no strange specral shape or drastic change in the DARM strain curve were seen as expected. The filters are ready to go.

The screen shot attached below is a quick comparison of the calibration -- the blue curve is with the old ER7 suspension filters engaged and the red curve with the new ER8 suspension filters engaged. As shown in the plot, there is no big difference as expected. Unfortunately since the low frequency components below several 10 Hz seemed to be sufficiently non-stationary that it does not allow us to make accurate comprasion between the old and new filters in this way.

(Overview and implementation process)

The implementation of the suspension filters has been done in the order of

autoquack to implement the discretized models into CAL CS.Step 1, 2 and 3 are done by a single matlab script which can be found at:

aligocalibration/trunk/Runs/ER8/H1/Scripts/CALCS/quack_eyresponse_into_calcs.m

The script calles H1DARMOLGTFmodel_ER8.m at first in order to collectively obtain all the up-to-date parameters. Subsequently it extracts the necessary suspension responses and convert them into discretized filters. The way we handle the suspension filters are described in the next section. Once the filters are in the discrete format, then the script runs autoquack to directly edit the foton file of CAL CS.

Step 4 is done by a different script;

aligocalibration/trunk/Runs/ER8/H1/Scripts/CALCS/copySus2Cal.py

It simply copies the relevant parts of H1SUSETMY.txt to H1CALCS.txt. There is one exception that is FM1 of ETMY_L1_LOCK_L which caused a trouble before (alog 20700 and alog 20725). The script is now coded such that it modifies this particular filter and gets rid of the integrator. I followed our latest approach that is to replace the filter module with zpk([0.3], [0.01], 30, "n") as described in alog 20725. Optionally, one can run a python script which turns on the necessary switches and filters in CAL CS. The script can be found at

aligocalibration/trunk/Runs/ER8/H1/Scripts/CALCS/setCalcsEtmy.py

Right now, both python scripts edit the ITMY part of CAL CS instead of the ETMY part so that we can make a comparison between them -- the ITMY signal paths represent the new suspension filters while the ETMY paths still uses the old ER7 filters.

(Accuracy of the suspension responses)

One of the tricky parts in implementing the suspension filters into a front end model is that we have to pay attention to how accurately the front end can mimic the suspension responses. There are practical limitations associated with this issue. For example, a single filter module can not handle more than 10 SOS segments. Also, the distortion of the filter is inevitable (see for example G1501013 ) because of the finite sampling rate. So it is extremely important to check if the installed filters are accurate.

In order to asses the accuracy, I made a comparison between the discrete suspension responses and the state-space full suspension model. The plot shown right below is a plot of the transfer functions only in magnitude. The dashed lines are the full state space models that we are trying to mimic and the solid lines are the resultant direcrete models. As one can see, the TST stage looks accurate as the dashed and solid curves are almost completely on top of each other. On the other hand the PUM and UIM stages have a big difference as they go to high frequencies. This is due to compromize for the extremely high-q violin modes and I will explain this in the next section. Apart from the violin modes, UIM had the largest decrepancy at around the mechanical resonances, in 0.3 - 1 Hz. By the way the y-axis is meant to be m/N.

If we take ratio of (discrete transfer function) / (full state space model), it is going to look like this:

As mentioned earlier, the TST stage is very accurate across the entire frequency band. On the other hand, the PUM and UIM deviate from the full state space models as they go to high frequencies. Even though they both deviate from the full ss models by 2.4 % at 100 Hz and 0.2 % at 30 Hz in magnitude, this is already good enough. PUM is the leading-actuator in a band of 1-30 Hz and that is the band where the PUM is accurate at a sub-pecent level. UIM takes care frequencies below 1 Hz and does not really matter above 1 Hz, although I made a slight hand tweak as described in a subesequency section in this alog. Overall, this result is satisfying.

(Violine modes' Q are intentionally lowered)

The current full ss suspension model assumes Q of the violine modes to be on the order of 1e9. Regardless of whether it is accurate or not, it is not a good idea to directly implement such filters in a front end. If they were implemeted with such a high Q, their impulse respones would last forever. Also, the resonance is so tall that any kind of numerical precision error would ring it up. Anyway, I did not like the high-Q filters and therfore artificially lowered their Q's to 1e3 such that they damp on a time scale of a few seconds. At the beggining, I tried completely getting rid of the violin modes, but this turned to be a bad idea because they produced a large descrepancy in PUM by 1 or 2 % at 30 Hz where the accuracy matters. So instead, I decided to keep the vilone modes but with a much lower Qs.

I edited quack_eyresponse_into_calcs.m such that it automatically detects high-Q components and lowers the Q to a user-specified value. Also, the code still internally keeps the high-Q version of the filters and therefore switching back to high-Q is trivial (if necessary in future). This lower-Q modification is applied in PUM and UIM.

(A compromise factor in UIM)

There was another trick. Since UIM needed more poles and zeros than TST and PUM do, it was difficult to simulate the UIM mechanical response in a single filter module. I could have split it into multiple filter banks, but as UIM does not really impact on the calibration, I decided to go with a single filter module. I decreased the number of zeros and poles by running matlab's minreal with a high tolerance and it resulted in frequency-dependent inaccuracy as shown in the above plots. In addition to the frequency dependent components, the response in a band of 1-30 Hz became lower than that of the full ss by roughly 2%. Even though this does not matter, I decided to raise the discrete response by multiplying an extra scale factor such that the UIM is accurate in 1-30 Hz band for completeness.

(A coarse comparison between ER7 and ER8 suspension filters)

This is a screenshot of foton comparing the suspension filters from ER7 and the ones for ER8. The black solid, blue solid and green solid lines are the new UIM, PUM and TST filters in meters/counts respectively. The dashed lines are the old ER7 suspension filters. There are some remarks:

(Implemented filters)

Here is a screen shot of the filter modules for the new suspension responses in CAL CS. Currently they are installed in the ITMY filter modules for convenience. The suspension filters are split into multiple modules as described in the followings.

I calculated the time varying calibration parameters kappa_tst, kappa_pu, kappa_A, kappa_C and Cavity pole for the lock stretch beginning from September 1st using the new ER8 DARM model. All the data used for this analysis had guardian state vector greater than 600 (NOMINAL LOW NOISE). The plots are attached.

Details:

The equation used in this calculation are obtained from the T1500377 document which describes the time varying parameters in details.

For the displacement produced due to pcal, I used the following script to advance the pcal signal by 21 us (LHO alog 21320) , take out the AA filter and and dewhitened the signal.

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/S7/Common/MatlabTools/xpcalcorrectionER8.m.

I was not able to get kappa_tst closer to 1, which is the expected value of the all kappa's. However, the variation in Kappa_tst is within 1% which is a good news. I am not sure where the problem is. I will look into it further. For the rest of the plots I assumed kappa_tst to be 1. This assumption only effects kappa_pu because it is the only factor that depends on kappa_tst.

Plots:

The first plot shows the real part of kappa_tst, kappa_pu and kappa_A. Kappa_pu and kappa_A are close to 1 and the variation of these parameters during this time is less than a 1%. kappa_tst under investigation.

The second plot is the imaginary part of the parameters in plot 1. All these values are expected to be 0 or closer to zero. Again, kappa-pu and kappa_A are indeed very close to zero but kappa_tst is not.

The third plot includes kappa_C (change in optical gain) and cavity pole. Optical gain within a lock stretch seems to be very stable but it varies by few percent between locks. One particular stretch it varied by almost 20% (not sure why, could be commissioning activities). Also, there is evident of some transient at the beginning and end of the locks. Will try to sort the data by intent bit for future analysis. The cavity pole shows some trend and variation between lock stretches but nothing crazy.

The script used to analyze this is committed to the svn:

/ligo/svncommon/CalSVN/aligocalibration/trunk/Projects/PhotonCalibrator/drafts_tests/ER8_Data_Analysis/Scripts/plotCalparameterFromSLMData.m

For the record, this analysis was performed on all data above guardian lock states above "600," (which I think is DC readout) i.e. more than just lock stretches that have the observation intent bit on.

Jeffrey K, Kiwamu I, Darkhan T

Summary

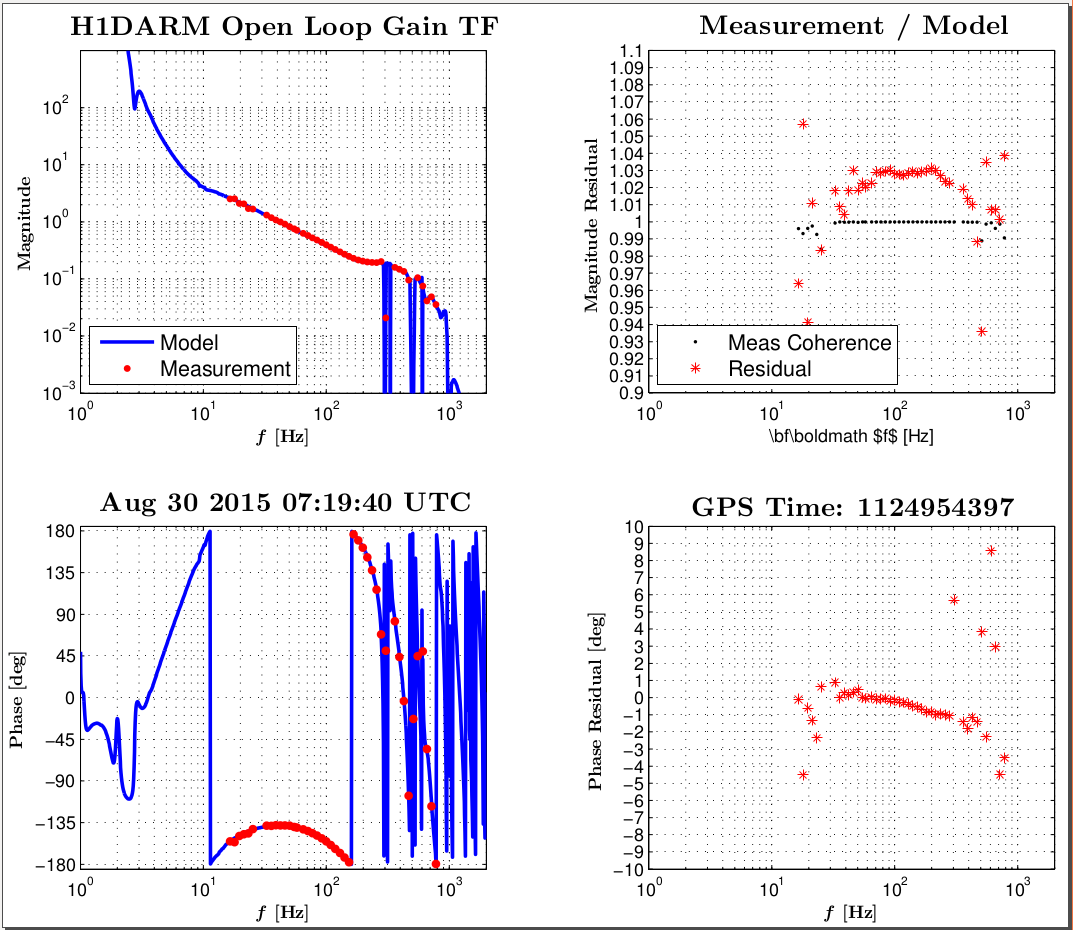

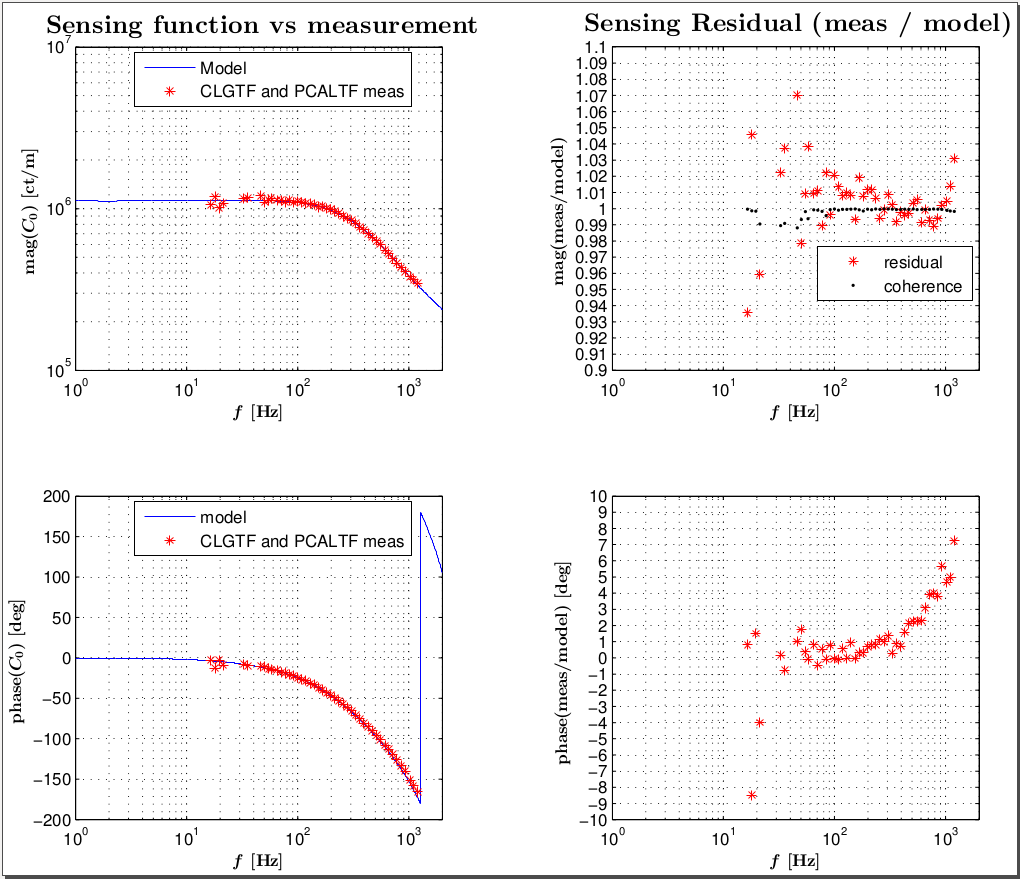

We implemented most of the analysis results from recent calibration measurements into the H1 DARM OLGTF model for ER8/O1 (see details below). Sensing, actuation and the digital filters' TF models that make up a DARM OLG TF model are used for calculating the interferometer strain h(t), tracking and possibly correcting temporal variations in the DARM parameters.

The comparison of the H1 DARM OLGTF model vs. a DARM OLGTF measurement taken on 2015-08-30 07:19:40 UTC gave a residual of about +/- 3% in magnitude and +/- 10 degrees in phase [~30, ~500] Hz.

The measurements of a DARM OLGTF and a PCALY to DARMTF that we used for estimating the parameters of the model were taken within the same lock stretch (LHO alog 21023). The sensing function obtained from combining both of these TF measurements gave a residual of under +/- 4% in magnitude and under +/- 8 degrees in phase when compared to the DARM OLGTF model in the frequency range of [~20, ~1100] Hz. We see an unresolved trend in the phase of the sensing function residual.

Details

This model is based on the earlier version of this script, that has been described in LHO alog 20819, however earlier model agreed with the DARM OLGTF measurements to a lesser degree: about +/-10% in magnitude and +/-5 degree in phase up to 200 Hz.

Below we list major improvements / changes to the DARM OLGTF model that were implemented since then (if one needs to track changes all the way from ER7 model, he/she can check LHO alog 20819 for the list of prevous changes).

Calibration team at LHO managed to take large number of measurements that were used for estimation of parameters of the actuation function, see LHO alogs 20846, 21023. Over the last two weeks calibration team analyzed these measurements (see LHO alogs 21015, 21142, 21189, 21232, 21283). As a result we adapted the DARM OLGTF model to be able to handle a set of zero-pole TFs that differ for each of the quadrants of the drivers. Implementing these helped to significantly reduce the residual between the model and the measurement.

We are planning to work more on looking for sources of inaccuracies of the DARM OLGTF model.

The development is underway on separating OMC DCPD frequency dependencies for two of the DCPDs. In the existing DARM OLGTF model it's assumed that both of the DCPDs have the same zeros and poles, which, according to LHO alog 21126, is not entirely true, and could potentially introduce systematic errors.

The model was uploaded to calibration SVN:

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Scripts/DARMOLGTFs/H1DARMOLGTFmodel_ER8.m (r1341)

The parameter file associated with the measurement from Aug 30 2015 07:19:40 UTC (GPS: 1124954397) is in the same directory:

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Scripts/DARMOLGTFs/H1DARMparams_1124954397.m (r1339)

Plots produced by this model were committed to SVN into:

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Results/DARMOLGTFs/2015-09-08_H1DARM_*.pdf

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Results/DARMOLGTFs/2015-09-08_H1DARM_*.eps

Kiwamu, Hang, Nutsinee

After we made some modification to the new lockloss tool (the modification was made on my copy, not the original), we started to investigate the time of ETMY saturations with it. I randomly picked seven glitches out of twenty listed by the saturation alarm. Three glitches caused the range to drop no more than 50 Mpc, four caused the range to drop below 10 Mpc.

Range dropped below 10 Mpc

| Time (UTC) | First optic to Saturate | Time before saturation alarm(second) | BNS Range (Mpc) | 2.5+ Mag Earthquake within 30 minutes before saturation time |

|

Sep7 01:30:45 |

ETMY L3 | 0.427 | 6 | None |

| Sep7 00:48:01 | ETMY L3 | 0.608 | 0.5 | 2.8M in Alaska, 00:18:41 UTC |

| Sep6 17:57:06 | ETMY L3 | 0.583 | 17 | None |

| Sep6 17:03:24 | PR3 M3 (LR and UL) | 3.543 | 3 | None |

Range dropped no more than 50 Mpc

| Time (UTC) | First optic to Saturate | Time before saturation alarm (second) | BNS Range (Mpc) | 2.5+ Mag Earthquake within 30 minutes before saturation time |

| Sep7 04:46:19 | ETMY L3 | 0.694 | 57 | 5.1M in Solomon Islands, 04:23:02 UTC |

| Sep6 19:45:34 | ETMY L3 | 0.053 | 52 | 3.0M in Dominican Rep, 19:22:59 UTC |

| Sep6 19:31:36 | ETMY L3 | 0.296 | 58 | 3.0M in Dominican Rep, 19:22:59 UTC |

Most of the chosen glitches look pretty much the same (low frequency fluctuation, glitch, then ring down) and don't tell us anything, accept that all three small glitches happened within 30 mininutes after an earthquake. However, I find the plots from Sep 6 17:03:24 particularly interesting. PR3 M3 glitched ~3 seconds before ETMY. Kiwamu thought this might shade some light on the mysterious ETMY glitches.

One thing I noticed is that quite many times the ETMY saturations happened at least half a second before the saturation alarm caught them. Why is that?

As for the plots, the blue is the data of channel indicated, red shaded area is the RMS, and the low, mid, high band are defined as:

low: f>0.25 Hz

mid: 16 Hz < f < 128 Hz

high: f>128 Hz (until Nyquist frequency)

I have also attached the list of channels I've looked into (basically all ST1 and ST2 ISI and all the SUS MASTER OUT DQ channels). There are SO MANY MORE glitches to be analyzed. In case anyone interested in helping I saved the modified code in /ligo/home/nutsinee.kijbunchoo/Templates/locklosses/python

Evening Shift Summary LVEA: Laser Hazard IFO: UnLocked Intent Bit: Commissioning All Times in UTC (PT) 23:00 (16:00) Take over from TJ 23:00 (16:00) Continue locking the IFO after maintenance window 02:01 (19:01) IFO locked at NOMINAL_LOW_NOISE, 22.9W, 65Mpc 02:06 (19:06) Set Observatory Mode to commissioning 02:56 (19:56) Add 125ml water to crystal chiller 05:31 (22:31) Switch to Calibration mode for Kiwamu 06:43 (23:43) Switch to Observation mode 07:00 (00:00) Turn over to Travis Shift Summary & Observations: - Commissioners/Ops working on relocking IFO until about 02:00. - Set to commissioning mode – Commissioners working while LLO is down - Set to Observation mode 06:43 – Wind low, range @ 67Mpc - First half of the shift was spent recovering from maintenance and some commissioning work. After locking, commissioners continued to work on IFO. We have been locked since 02:00. Some glitches mostly due to commissioning/calibration work. - After clearing SDF, switched to Observing mode at 06:43 (23:43). NOTE: The LVEA was not swept before going into Observing mode. The decision was not to risk knocking the IOF out of lock by walking around in the LVEA. The lights are on. Do not know what other noise sources are present at this time.

J. Kissel, K. Izumi We haven't gotten these two measurements since the tail end of Calibration week, and we want to confirm that our model (that's under development) is still valid after 1.5 weeks, so we've taken new DARM OLG TFs and PCAL to DARM TFs. We'll analyze these in the next few days and confirm what we can determine from the calibration lines. Data lives here: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/ DARMOLGTFs/2015-09-08_H1_DARM_OLGTF_7to1200Hz.xml PCAL/2015-09-08_PCALY2DARMTF_7to1200Hz.xml For the record, this pair of measurements takes almost exactly 1 hour. However, to knock down the measurement time to that one hour, one needs to run the DARMOLGTF first, and start the PCAL TF once the DARM OLG TF hits the frequency points around 15 to 10 [Hz]. Note, calibration lines were turned OFF during these measurements. They're now back ON.

Paramter file:

aligocalibration/trunk/Runs/ER8/H1/Scripts/DARMOLGTFs/H1DARMparams_1125813052.m

And the analysis results in pdf format are attached. It seems that adjusting the optical gain and cavity pole such that match the Pcal measurement results in frequency-dependent descrepancy in the open loop model by 3% at 120 Hz. The residual in open loop seems similar to what Darkhan reported (alog 21318).

J. Kissel I haven't analyzed the data yet, but in order to put a nail on the coffin of the UIM actuation model-to-measurement descrepancy, and to nail down the output impedance network, I've measured the transfer function of the H1 SUS ETMY's UIM driver today in analog with an SR785. Driving at the DAC input of the coil driver, and measuring the response across the output pins of the driver on a break out -- noteably including the the real BOSEM, not just using a 40 [ohm] resistor as a dummy OSEM. This was done in each state of the driver for all four channels. From what I saw on the screen while the measurements were on going, there is indeed a zero in the transfer function at around 100 [Hz]. Have a little bit of low-frequency-measurement-time to think about it, I think this is the non-neglible inductance of the giant BOSEM coil (Rc = 42.7 [Ohm], Lc = 11.9 [mH]). Will confirm and give more details one the analysis is complete. This is not a priority, so I'll analyze the data in due time, but the data lives here: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/Electronics/2015-09-08_H1SUSETMY_UIM_Driver/TFSR785_08-09-2015_*.txt with a diary of which measurements are which here: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/Electronics/2015-09-08_H1SUSETMY_UIM_Driver/MeasurementDiary_20150908.txt (and attached for your convenience) The config file for the usual GPIB function is here: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/Electronics/2015-09-08_H1SUSETMY_UIM_Driver/TFSR785_UIMCoilDriver_Config.yml

Collected OBSERVE.snap files for use in full observing configuration monitoring.

With the SDF happy (green) and Guardian Nominal (Robust Isolated) and green, I made an OBSERVE.snap file with SDF_SAVE screen

choosing EPICS DB TO FILE & SAVE AS == OBSERVE

This saves the current values of all switches and maintains the monitor/not monitor bit into the target area, e.g. /opt/rtcds/lho/h1/target/h1hpietmy/h1hpietmyepics/burt as OBSERVE.snap

This file is then copied to the svn: /opt/rtcds/userapps/release/hpi/h1/burtfiles as h1hpietmy_OBSERVE.snap and added/commited as needed.

The OBSERVE.snap in the target area is now deleted and a soft link is created for OBSERVE.snap to point to the svn copy:

ln -s /opt/rtcds/userapps/release/hpi/h1/burtfiles/h1hpietmy_OBSERVE.snap OBSERVE.snap

Finally, the SDF_RESTORE screen is used to select the OBSERVE.snap softlink and loaded with the LOAD TABLE button.

Now, for the HEPIs for example, the not monitored channels dealt with by the guardian will be a different value from the safe.snap but, the not monitored channels are still not monitored so the SDF remains green and happy. And if the HEPI platform trips, it will still be happy and green because, the not monitored channels are still not monitored.

What's the use of all this you say? Okay, I say, go to the SDF_TABLE screen and switch the MONITOR SELECT choice to ALL (vs MASK.) Now, the not monitored channel bit flag is ignored and all records are monitored and differences (ISO filters when the platform is tripped for example) will show in the DIFF list until guardian has the platform back to nominal.

Notice too that the SDF_OVERVIEW has the pink light indicating monitor ALL is set. This should stay this way unless Guardian is having trouble reisolating the platform and then the operator may want to reenable the bit mask to make more evident any switches that guardian isn't touching more apparent.

But rather than rely on selecting ALL in the SDF_MON_ALL selection, I would suggest you actual set the monitor bit to True for all channels in the OBSERVE.snap. That way we don't have to do a two-step select process to activate it, and we can indicate if there are special channels that we don't monitor, for whatever reason.

Yes Jameson. That is why I selectied the ALL button allowing all channels to be monitored.

Hugh, I think the OBSERVE snaps should have the montor bit set for all channels. In some sense that's the whole point of having separate OBSERVE files to be used in this way, that we use them to define setpoints against which every channel should be moniotred.

We have large glitches when we switch the coil driver state for PRM in every example that I've looked at. This causes locklosses, about 5 over the weekend.

One thing that we can do to make this less painfull is to move the switch earlier in the locking process (for example, we can try doing this right after DRMI locks rather than waiting until DRMI on POP).

A real solution might be to change the front end model so that we can switch each coil separately.

We could try tuning the delay, as described for the LLO BS 16295

We didn't get a chance to look at the delay, but we have made a few gaurdian changes that should help mitigate this situation.

We are now increasing the PRM and SRM offlaoding after we transition to 3F before starting the CARM offset reduction. This gives us a bit more headroom when we siwtch the coil dirver. We also moved the coildriver switching sooner (it is now in ISC_DRMI in the LOCKED_3F state) so that we don't waste as much time if this breaks the lock.

Evan, Sheila, Jeff B Ed

Earlier today we were knocked out of lock by a 5.2 in New Zealand, the kind of thing that we would like to be able to ride out. The lockloss plot is attached, we saturated M3 of the SRM before the lockloss and PRM M3 was also close to saturating.

While LLO was down, we spent a little time on the offloading, basically the changes described in alog 21084. This offloading scheme worked fine in full lock for PRM, however we ran into trouble using it durring the acquisiton sequence. Twice we lost lock on the transition to DRMI, and twice we lost lock when the PRM coil state swtiched in DRMI on POP. Hpwever, we can acquire lock with the new filter in the top stage of PRM and SRM, but the old low gain (-0.02). We've been able to turn the gain up by a factor of 2 in full lock twice, so I've left the guardian so that it will turn of the gain in M2 (before the intergrator) in the noise tunings step.

If anyone decides they need to undo this change overnight, they can comment out lines 344-347 and 2508-2514 of the ISC_LOCK guardian.

Before we started this, the PRM top mass damping was using 10000 cnts rms at frequencies above a few hundred Hz, because of problems in the OSEMS (alog 21060 ). Evan put some low passes at 200 Hz in RT and SD OSEMINF which reduces this to 2000 cnts rms. Jeff B accepted this change in SDF.

The second attached screenshot shows the PRM drives, the references are in the minutes before the earthquake dropped us out of lock. The red and blue curves show the current drives, with the high frequency reduction in M1 due to Evan's low pass, and the new offloading on. The last attached screenshot shows SRM drives with the new offloading.

I don't think the 5.2 EQ is the cause of the lockloss.

According to your plot, the lockloss happened on Sep 08 at 00:31:07 UTC. The 5.2 EQ happened on Sep 07 at 20:24:56.84 UTC and hit the site at 20:38:21 UTC according to Seismon (so 4 hours earlier). The BLRMS plot confirm that statement (see attachment).

Around loss time, the ground seems as quiet as usual.

I was mistaken in identifying the earthquake, but the ground motion did increase slightly, which seems to be what caused the lockloss.

While Jeff and Darkhan have been trying to get the actuactor coefficients right for calibration, I worked on an orthogonal task which is to check out the latest optical gain of DARM.

Summary points are:

The plots below show the measured optical gain measured by Pcal Y with the loop suppression taken out by measuring the DARM supression within the same lock stretch.

I used the data from Aug 28 and 29th (alog 21190 and alog 21023 respectively). The parameters were estimated by the fitting function of LISO. I have limited the frequency range of the fitting to be avove 30 Hz because the measurement does not seem to obey physics. I will metion this in the next paragraph. The cavity pole was at around 330 Hz which claims a bit lower frequency than what Evan indendently estimated from the nominal Pcal lines (alog 21210). Not sure why at this point.

One thing we have to pay attentin is a peculiar behavior of the magnitude at low frequencies -- they tend to respond lesser by 20-30 % at most while the phase does not show any evidence of extra poles or zeros. I think that this behavior has been consistently seen since ER7. For example, several DARM open loops from ER7 show very similar behavior (see open loop plots from alog 18769). Also, a recent DARM open loop measurement (see the plot from alog 20819). Keita suggested makeing another DARM open loop measurement with a smaller amplitude, for example by a factor of two at a cost of longer integation time in order to detemine whethre if this is associated with some kind of undesired nonlinearity, saturation or some sort.

I did a similar fit that Shivaraj did at LLO (alog # 20146), to determine the time delay between PCAL RX and and the DARM_ERR. Both signal chain have one each of IOP (65 KHz), USER model (16 Khz) and AA filter between them. The expected time delay between the PCAL and DARM_ERR as shown in the diagram below should be about 13.2 us in total. I used the Optical gain as 1.16e+6 from the alog above and fitted for cavity pole and time delay. I got cavity pole estimate of 324 Hz, close to what Kimamu got from his fitting and time delay of 21 us. This is 7.8 us more than what we expected from the model.

C. Cahillane, D. Tuyenbayev I have updated the strain uncertainty calculator to use Darkhan's latest ER8 model along with ER8 data.

The ER8 model: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Scripts/DARMOLGTFs/H1DARMOLGTFmodel_ER8.m

The ER8 params: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Scripts/DARMOLGTFs/H1DARMparams_1124597103.m

The ER8 uncertainty calc: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/S7/Common/MatlabTools/strainUncertaintyER8.m

Again, there are slight discrepancies between Plot 1 and Plot 7. To reiterate, Plot 1 uses the full O1 calibration method and real data, while Plot 7 uses the Inverse Response TF with no data:

Plot 1:

dh = DARM_ERR / C_true + A_true * DARM_CTRL

------------------------------------

DARM_ERR / C_0 + A_0 * DARM_CTRL

Plot 7:

dh = (1 + G_true) / C_true

----------------------

(1 + G_0) / C_0

This time, I believe the discrepancies are a result of the as-of-yet incomplete ER8 model. I will rerun this test when Kiwamu, Jeff, and Darkhan have all finished updating the ER8 model.

Note that the ugly spikes in uncertainty have disappeared from Plots 1-6 (as opposed to aLOG 21065).

This is because now I do not have to interpolate my DARM_ERR and DARM_CTRL FFT frequency vector thanks to Darkhan's clever ER8 model functions par.{A,C,D,G}.getFreqResp_total(freq) where freq is a frequency vector I define. This is possible because each portion of the ER8 model is an LTI object. Thanks Darkhan.

I have updated these plots for the newly updated ER8 Calibration Model at GPS time 1125747803 (Sep 08 2015 11:43:06 UTC). Now I am getting better agreement between the data-based strain error plot (Plot 1) and the response function error plot (Plot 7). I am now getting a conspicuous spike in error at 37.3 Hz in Plots 1-6, which is exactly where the X_CTRL calibration line is. I cannot be sure why this line is accounting for massive errors in the strain calculation with only 10% changes in the kappa_tst, etc...