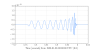

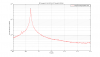

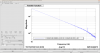

To ride out earthquakes better, we would like a boost in DHARD yaw (alog 21708) I exported the DHARD YAW OLG measurement posted in alog 20084, made a fit, and tried a few different boosts (plots attched).

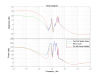

I think a reasonable solution is to use a pair of complex poles at 0.35 Hz with a Q of 0.7, and a pair of complex zeros at 0.7 Hz with a Q of 1 (and of cource a high frequency gain of 1). This gives us 12dB more gain at DC than we have now, and we still have an unconditionally stable loop with 45 degrees of phase everywhere.

A foton design string that accomplishes this is

zpk([0.35+i*0.606218;0.35-1*0.606218],[0.25+i*0.244949;0.25-i*0.244949],9,"n")gain(0.444464)

I don't want to save the filter right now because as I learned earlier today that will cause an error on the CDS overview until the filter is loaded, but there is an unsaved version open on opsws5. If anyone gets a chance to try this at the start of maintence tomorow it would be awesome. Any of the boosts in the DHARD yaw filter bank currently can be overwritten.

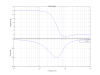

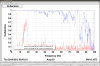

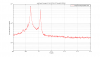

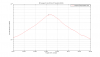

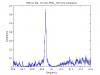

We tried this out this morning, I turned the filter on at 15:21 , it was on for several hours. The first screenshot show error and control spectra with the boost on and off. As you would expect there is a modest increase in the control signal at low frequencies and a bit more supression of the error signal. The IFO was locked durring maintence activities (including praxair deliveries) so there was a lot of noise in DARM. I tried on off tests to see if the filter was causing the excess noise, and saw no evidence that it was.

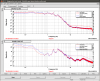

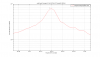

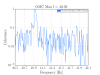

We didn't get the earthquake I was hoping we would have durring the maintence window, but there was some large ground motion due to activities on site. The second attached screenshot shows a lockloss when the chilean earthqauke hits (21774), the time when I turned on the boost this morning, and the increased ground motion durring maintence day. The maintence day ground motion that we rode out with the boost on were 2-3 times higher than the EQ, but not all at the same time in all stations.

We turned the filter back off before going to observing mode, and Laura is taking a look to see if there was an impact on the glitch rate.

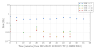

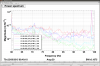

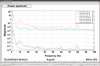

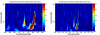

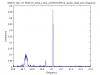

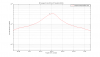

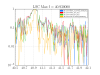

I took a look at an hour's worth of data after the calibration changes were stable and the filter was on (I sadly can't use much more time) . I also chose a similar time period from this afternoon where things seemed to be running fine without the filter on. Attached are glitchgrams and trigger rate plots for the two periods. The trigger rate plots show data binned in to 5 minute intervals.

When the filter was on we were in active commissioning, so the presence of high SNR triggers are not so surprising. The increased glitch rate around 6 minutes is from Sheila performing some injections. Looking at the trigger rate plots I am mainly looking to see if there is an overall change in the rate of low SNR triggers (i.e. the blue dots) which contribute the majority to the background. In the glitchgram plots I am looking to see if I can see a change of structure.

Based upon the two time periods I have looked at I would estimate the filter does not have a large impact on the background, however I would like more stable time when the filter is on to further confirm.