All times local PDT.

0728 IFO Dropped Lock

0755 Richard Starts on PRM -- Finished 1044 -- No obvious problem but Chassis changed.

0759 Hugh Installs STS2-A at HAM2 -- Finished 0825

0822 Keita to EndX for QPD checks-- Finished 1043--No obvious problem.

0833 Phil to EndX for UIM -- Upgraded breaker from 1 to 3Amps. Done 0935

0835 Hugh updates ODC MASTER for Auto Intent_Bit Reset ECR E1500352

0855 Ellie to TCS HWS Camera Power Supply, removes filter to EE Shop. No good, revert to no-filter configuration. Done 1033

0912 Nutsinee to TCSX for Temperature Sensor install--Finished 1158.

0930 Barker completes LSC/ASC/OAF/PEMEX Model updates for SciFrame changes & others (see Kawabi=LSC)

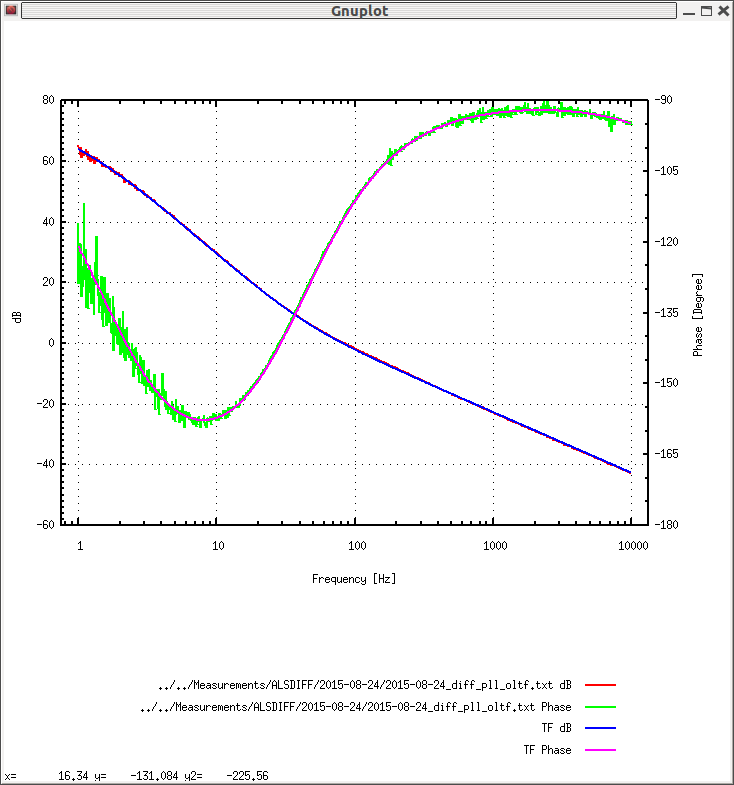

1031 Kiwamu doing ALS Diff Electronic Measurements. Done 1245

1000 SysDiag Guardian node down for rebuild finished 1723

1100 Leo starts ETM Charge Measurements. Complete 1239.

1105 Model Restarts--H1OAF fails from Epics problem. Halt model restarts.

1115 JimB fixes Epics problem. Model Restarts resume.

1124 DAQ Restart

1130 Daniel & Sheila at AS WFS Electronic response & dark noise Done 1240.

~1100 Phil Terminating PEM Cables in LVEA and CER. Installing 18bit DAC card. Done 1244.