Dan, Jim

The IFO was running smoothly in low-noise for almost four hours until a lockloss at 0923 UTC. Immediately prior to the lockloss, Jim and I noticed some motion of the AS beam spot. There were a few bursts of motion, a few minutes apart, and then we broke lock.

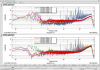

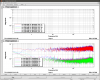

The first image is a 1-hour trend of the ASC-AS_C signals (channels 7 & 8). There are some bursts of noise in ASC-AS_C_PIT, correlated with similar noise in the M3 witness sensors of the SRC optics. SR3_WIT_P (channel 15) is the worst offender. This is the error signal used for the cage servo. The control signal is SR3_M2_TEST_P (channel 10), which has some excurions from the quiescent level at the time of the noise bursts.

So, it looks like something was kicking the SR3 OSEMs, and this got into the cage servo and broke the lock. Since nothing feeds back to SR3 it must have been an internal problem.

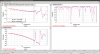

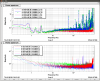

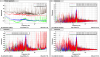

The second plot is a 1-hour trend of the SR3 OSEMs. M1_T2 stands out as a problem, and noise from this sensor would couple to pitch in the lower stage. Channel 16 is the T2 VOLTMON; clearly T2 is having issues. This is the same coil that Evan, Jeff and I had trouble with two weeks ago. Since then, the SR3 coil driver was swapped. The glitches don't have the same shape as the excusions back then.

Jim and I have been watching a dataviewer trend of the T2 voltmon and there are occasional large excusions that are correlated with the M3_WIT_P channel, so something is really moving. We held the ouput from the cage servo and saw a few more glitches, so it must be coming in from the top stage damping. We turned off the top stage damping and we *thought* we saw a few more, so it must be coming from the actuation electronics. The problem is very intermittent and we're not totally confident in the diagnosis.

Btw the T1 voltmon is still busted.