This is a follow up analysis of Robert's anthropogenic noise injections on August 12th. So far I don't see any convinving evidence that these activities were actually coupled into DARM. There were couple of injections ("human in optics lab" and "jumping in the change room") that seemed to coincide with DARM noise around 20-100Hz but it was unclear whether those injections were actually causing the noise. The noise *blobs* started prior to the injection and the PEM seismic sensor only coincided with one of them. Plus I would expect to see a coupling at much lower frequency if human jumping up and down the change room actually injects noise into DARM.

Note that the first injection time (15:05) is still unconfirmed. The time in the original table was wrong.

Adding SUS and SEI tags so I can locate entry. Seems like good news for the isolation crowd. (I note that just because a coupling only happens > 10 Hz does note mean SUS and SEI are off the hook. Robert and Anamaria have shown that loud drive at > 100 Hz can couple into HAM6 optics. So I would say this knocks SEISUS off the top of the list, but not off the list completely.)

Most injections lasted about 1s so the time series used for each tile should be about that long. These look like averages of time stretches almost an order of magnitude longer. I'll bet the signals will be more obvious if you zoom in in time on the individual injections instead of trying to see all 1s events in a singe 30 minute spectrogram.

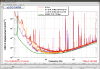

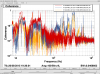

I can see the injected signal clearly after I zoomed in. Below are the spectrograms for the truck horn injection. The signal can't be seen in the PEM-CS_MIC_LVEA_VERTEX channel. I'm working on the rest.