The ASC control of the SRC hasn't been making sense. I did some calculations to see if the SRC might head towards an unstable condition if we run the IFO at high power (24W). We were interested in whether thermal lensing effects in the ITMs could cause a big enough gouy phase shift to cause us troubles.

At 24W there is 120kW of power in the arms and that the surface absorption of the ITMs is 0.5ppb (galaxy). The HR surface curvature of the ITMs changes by 1.06 udiopter/mW absorbed power (LLO alog 14634). This means we could expect a change in ITM curvature of 120e3*0.5e-6*1.06e3=65udiopters, or a change in the radius of curvature from 2000m to roughly 1750m as we go from cold state to 24W input power. I haven't considered substrate absorption, and G060155, slide16, indicates change in index of refraction due to bulk heating is roughly 10% of the surface absorption effect.

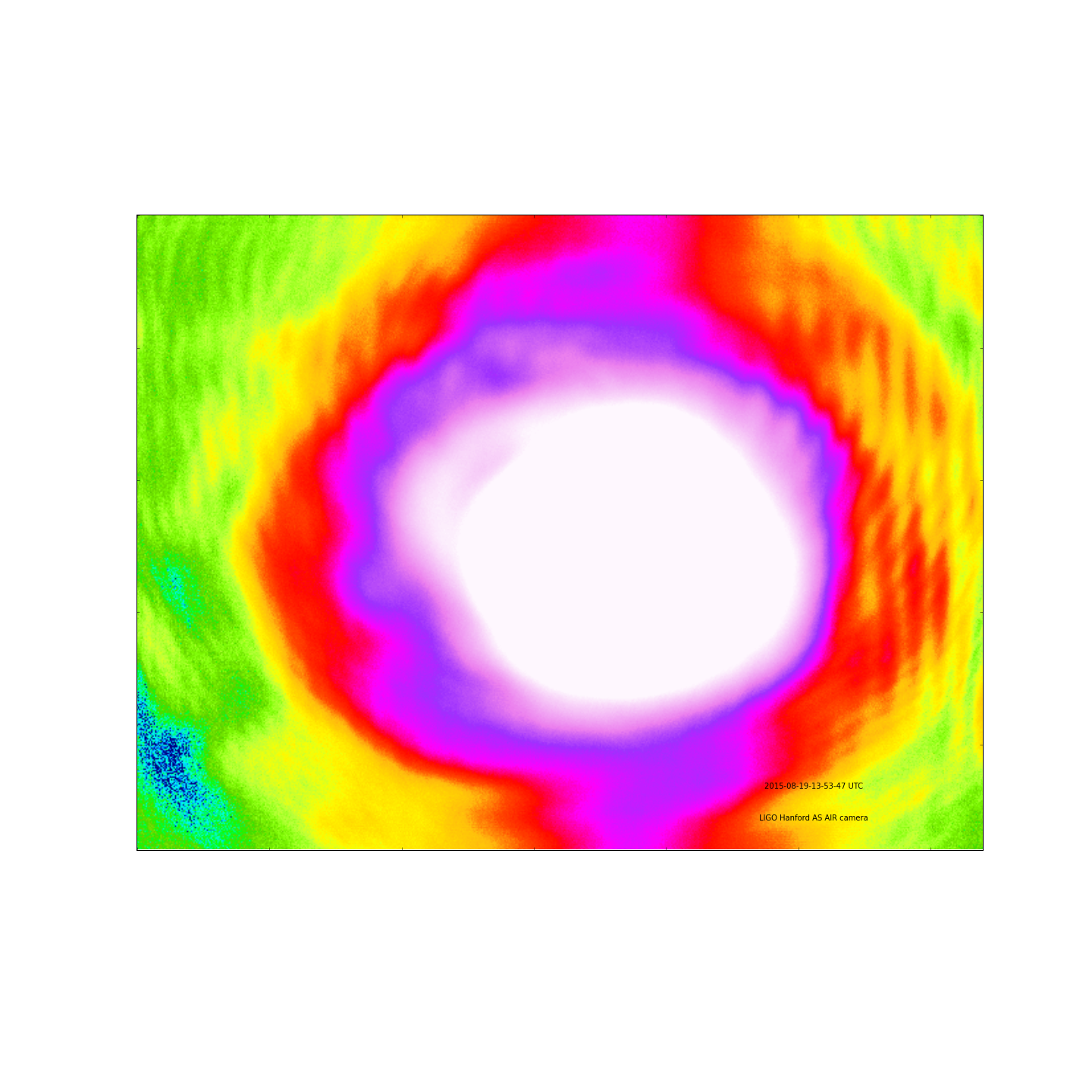

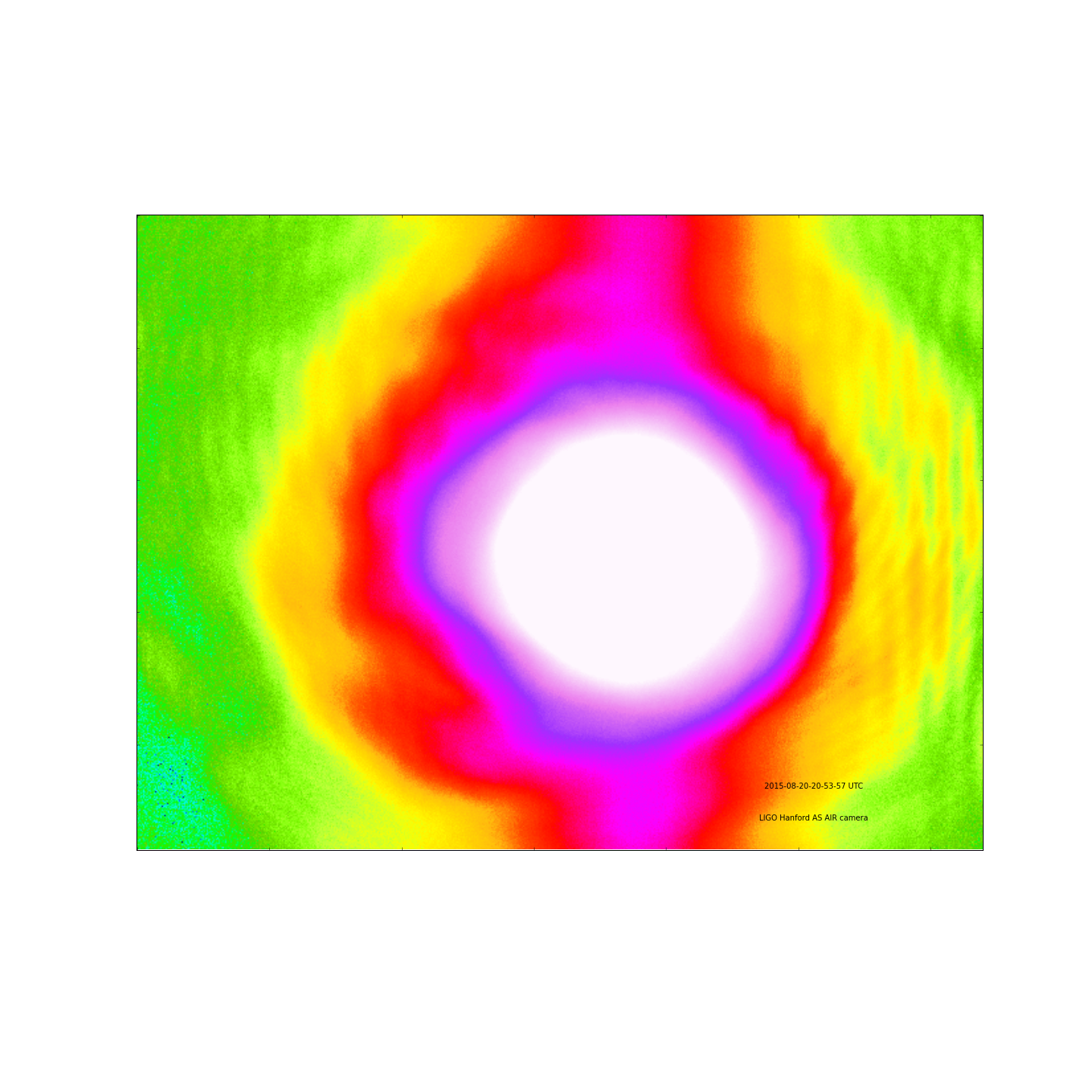

Putting this hot state ITM ROC into an aLaMode model of the interferometer (based on Lisa's model T1300960 with Dan's additions, alog 18915), the round trip gouy phase decreases from 32 to 22 degrees. Calculating the stability parameter for the SRC using the round trip transfer matrix, the cavity goes unstable. (A stable cavity occurs when -1<1/2*(A+D)<1. This parameter changes from 0.8 to 1.3.)

These numbers are just estimates, but the take away message is that as we increase the power, the SRC becomes less stable, and could be pushed into an unstable regime around about now. Turning on the ITM ring heaters would make the SRC more stable. (see comment) A ring heater on SR3 would also make the SRC more stable.

An additional note on transverse mode spacing: The SRC mode spacing is 240kHz in the cold state and 180kHz in the hot state. The cavity Finesse is 13.5, the free spectral range is 2.67MHz, so the full-width-half-maximum is 200kHz. So heating of the ITMs also pushes the SRC closer to degeneracy.