Kyle, Gerardo Removed 1st generation ESD pressure gauges from BSC9 and BSC10 and installed 4.5" blanks in their place -> Installed 1.5" metal angle valve and 2nd generation ESD pressure gauges on the domes of BSC5 and BSC6 -> Started rough pumping of X-end and Y-end (NOTE. dew point of "blow down" air measured < -3.5 C and < -5.5 C for X-end and Y-end respectively. This is much wetter than normal. I had found the purge air valve closed at the X-end today. It was probably closed to aid door installation yesterday but not re-opened(?). Purge-air can be throttled back in some cases but should never be closed off. Y-end explained in earlier log entry) Kyle Finished assembly of aLIGO RGA and coupled to 2.5" metal angle valve at new location on BSC5 -> Valved-out pumping for tonight -> will resume tomorrow and expect to be on the turbos before I leave tomorrow.

Durring ER7 we had 36 locklosses in the final stages of CARM offset reduction. Assuming that recovering from these locklosses takes about a half an hour (or more if we decide to redo inital alignment because of them), these locklosses were responsible for at least 18 hours of down time durring ER7, or about 8% of the run. Indirectly they probably caused even more down time than that.

32 out of 36 locklosses are the same mechanism

The first thing I noticed is that the majority of locklosses from the states REFL_TRANS, RESONANCE, and ANALOG_CARM are all actually occuring durring the state REFL TRANS, where we transition from the sqrt(TRX+TRY) signal to the REFLAIRI/sqrt(TRY) signal. They are not recognized by the gaurdian because we had some long sleeps in the guardian durring these steps. This is bad because we risk ringing up suspension modes we we loose lock but the guardian doesn't recognize it. I've removed sleeps from REFL_TRANS and RESONANCE and replaced them with timers.

33 of these locklosses all happen within a second or so of the end of the ramp from TR CARM to REFL 9/sqrt(TRY), although sometimes before and sometimes after. The remaining 4 happen latter, I have not investigated them.

Alignment is a factor

In this plot the build ups durring transitions that result in lockloss are in red, sucsessfull locks are in blue. I took means of the build in the first 10 seconds of this data (before any of the locklosses happened), and made histograms of sucsefull vs unsucsesfull attempts at transisioning. Here is an example for AS 90:

We do not suceed when POP18 was below 124 uW and AS90 is below 1275 counts; 12 of the locklosses fit into this category. A completely different set of 10 locklosses occured when REFL DC was above 6.5 mW at the begining of the attempt.

Bounce and Roll modes are not a factor

This plot shows that there is no apparent relationship between how rung up the bounce and roll modes are and locklosses, which had been one of our theories for why we were failing in this transition.

Radiation Pressure causes PItch motion at quad resonance

What is happening durring this transition is that radiation pressure moves the test masses in pitch. If our beams are not well centered on the test masses the light builiding up in the arms produces a radiation pressure torque on the masses. Since we are not yet on resonance in the arms, the radiation pressure can vary linearly with cavity length, and if we are not well aligned it can vary linearly with alignment as well. While we lock CARM on the sqrt(TRX+TRY) signal, the power in the arms is kept constant, so we have no instability. As soon as we transition to REFL 9 the power in the arms can fluctuate more, causing the optics to swing at the main pitch resonances of the quads.

This is not obvious if you are looking at locklosses, since it mostly appears that the pitch of the optics is a result of the lockloss. Attached is a plot of 35 sucsesfull transitions, showing sqrt(TRX+TRY) and the pitch of the three test masses that had functional optical levers durring ER7. All of these signals are rescaled and the mean of the oplev signals from the first 10 seconds is removed. From about 10 to 15 seconds the input matrix is ramping, and the carm offset is ramped down starting around 15 to 16 seconds. You can see that the oplev motion is well correlated with fluctuations in TR CARM, and that the oplevs move up in pitch as the CARM offset is reduced to zero.

In April we starting using oplev damping loops to avoid this type of lockloss, that was in use durring ER7, but this isn't enough to prevent the locklosses reliably. Right now I am inclined to think this is the main problem with this transition.

A few ideas for improving things:

I used Sheila's matlab script to plot PR3 Pitch as a function of IFO power.

The plots are from 12 days of data ending June 14th at 17:45UTC. This includes locks at 17W and 24W.

Locked = 'in lock, high power'

Data are locks with input power between 17W and 18W, or locks at 24W or more.

Unlocked = 'low power, locked or unlocked'

Data are from input power less that 3W.

PR3 Pitch position is unchanged locked vs unlocked at 3W.

I removed other data points as transitional power states.

First plot: PR3_PIT_IFO17W.png

Locked pitch position is distributed around -2.8urad.

Unlocked pitch position is distributed around -1.4urad.

Difference = -1.4urad

Second plot: PR3_PIT_IFO24W.png

Locked pitch position is distributed around -3.2urad.

Unlocked pitch position is distributed around -1.4urad.

Difference = -1.8urad

Summary:

| IFO power | pitch position | |

| PR3 pitch | 3W | -1.4urad |

| PR3 pitch | 17W | -2.8urad |

| PR2 ptich | 24W | -3.2urad |

The PR3 Pitch position in-lock is changing with the change IFO power.

Nutsinee & Hugh

The Counter weights on top of the WHAM6 ISI Optical Table expected to interfer with Shroud work have been removed. They are staged under the Support Tubes, on Stage0, and in the Spool at the Septum--Do be mindful of them and apologies for not spending more effort to get them out of the way.

The Five Cables attached to the OMC have been disconnected. The Cables are mark with foil, 1 2 & 3 strips and 1 & 2 strips bottom to top for the 3 on the -X side and for the 2 on the +X side of the OMC. They are coiled up, not that neatly, with the three together on the +Y side and the two together on the -Y side. Note that one of the 3 attached on the -X side comes from the -Y side of the table, the other two attached to the -X side of the OMC come from the +Y side of the table. The two cables attached on the +X side of the OMC come from the -Y side of the table. See first four attachments.

The M8 Mirror between the OMC and OM3 was removed, and bagged. I scrounged a narrow dog clamp from the OM3 which was only finger tight anyway to mark the +X side of the M8 fork. I then held M8 fork against this clamp and removed the one dog clamp holding M8 to the table and used it to mark the -Y side of the fork. See the last two attachment for 1) before any clamps where moved and 2) after the 'marks' are in place. Other than which side of M8 faces the OMC, I think this should serve pretty well. I'm sure it is deterministic which side of the M8 faces M9/OMC. There is a front and back side of the mount in which M8 is mounted.

Otherwise, the ISI is locked on A B & C Lockers and Robert is still making measurements.

Whilst performing the weekly DBB scans, Jason noticed that there was something amiss with the ISS.

Namely that the output of the ISS photodiodes was 0 and it had been that way since just after we

performed the Tuesday maintenance routines. It was noticeable that on the ISS MEDM screen the

reported DC value for photodiodes A and B were significantly less than the usual 10V. Oddly enough

all signs indicated that the loop was locked.

The output of both photodiodes was checked at the ISS box. They both indicated over 10V output

as they should. We measured the same output at the end of the cables going to the ISS servo card.

We injected a DC voltage into the cable going to the AA filter chassis and the MEDM screen reported

no real change.

Fil noticed that one of the power LEDs of the AA/AI boards was dim, so he power cycled the chassis.

Afterwards all the readings were as they should be. The chassis was pulled and and frequency response

and noise tests were performed on the board that the photodiode signals are connected to. The

measurements passed the test criteria. The chassis was re-installed.

The strange thing is that the system still thought the ISS was locked. The only difference on the

display is that the maximum and minimum diffraction percentage was quite separated from the mean value.

There was no sign of an oscillation. Both the PMC and ISS were happy throughout.

Fil, Peter, Jason

The projector0 computer ran out of memory, so it was rebooted. The Seismic FOM has been restarted.

The guardian log files are being backed up every hour to /ligo/backups/guardian This directory is available to all CDS workstations, so there is no need to log into the h1guardian0 machine to view the full set of logs. Remember that recent logs can be viewed by running the guardlog command

Actually *all* logs can be viewed with the guardlog command, but there's a bit less control over what you get, since it just cats out everything from the specified log.

I'm working on a more feature-full interface to the logs.

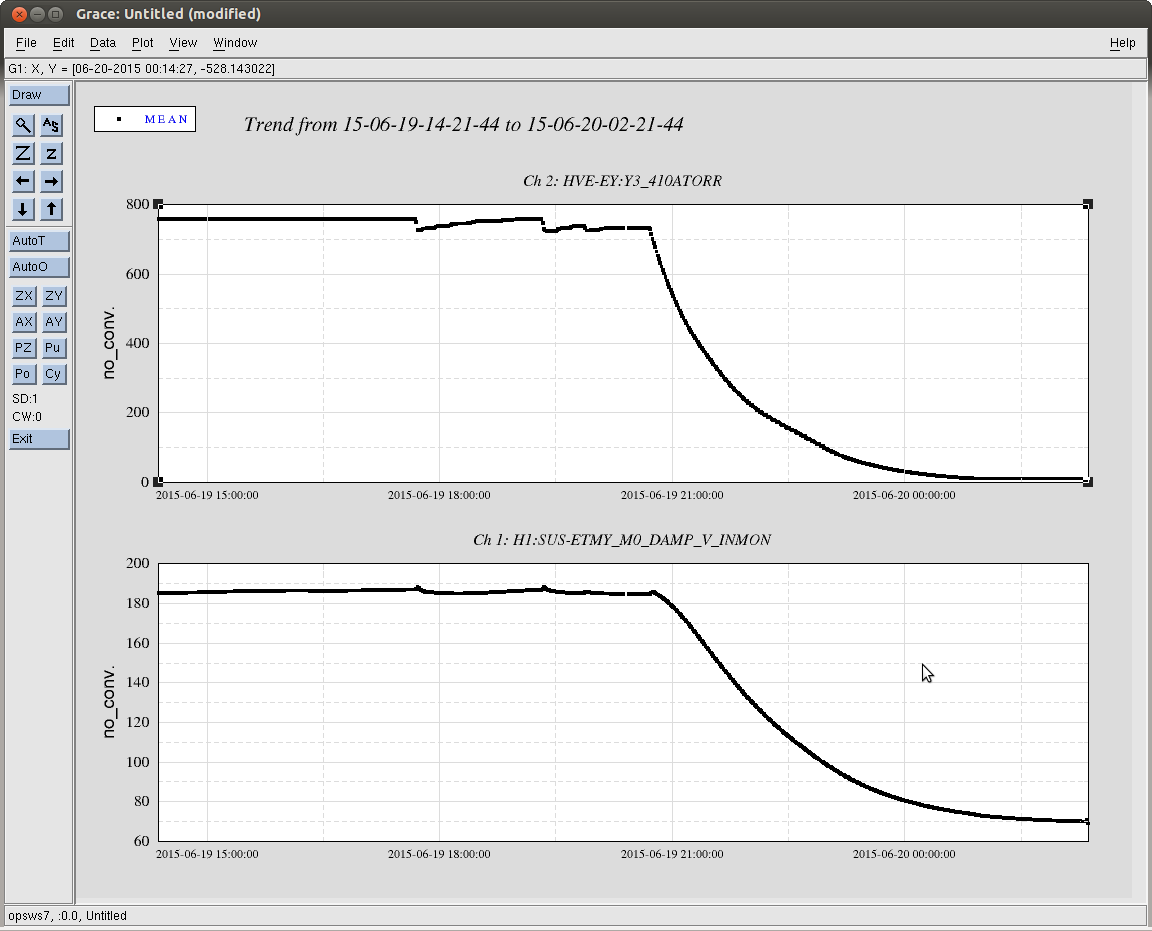

Shiela showed me that PR3 alignment in Pitch is different for in vs out of lock, and it's about 1.5urad.

I've been looking at other optics, and today found that PR2 Pitch also has two distinct alignments of in vs out of lock.

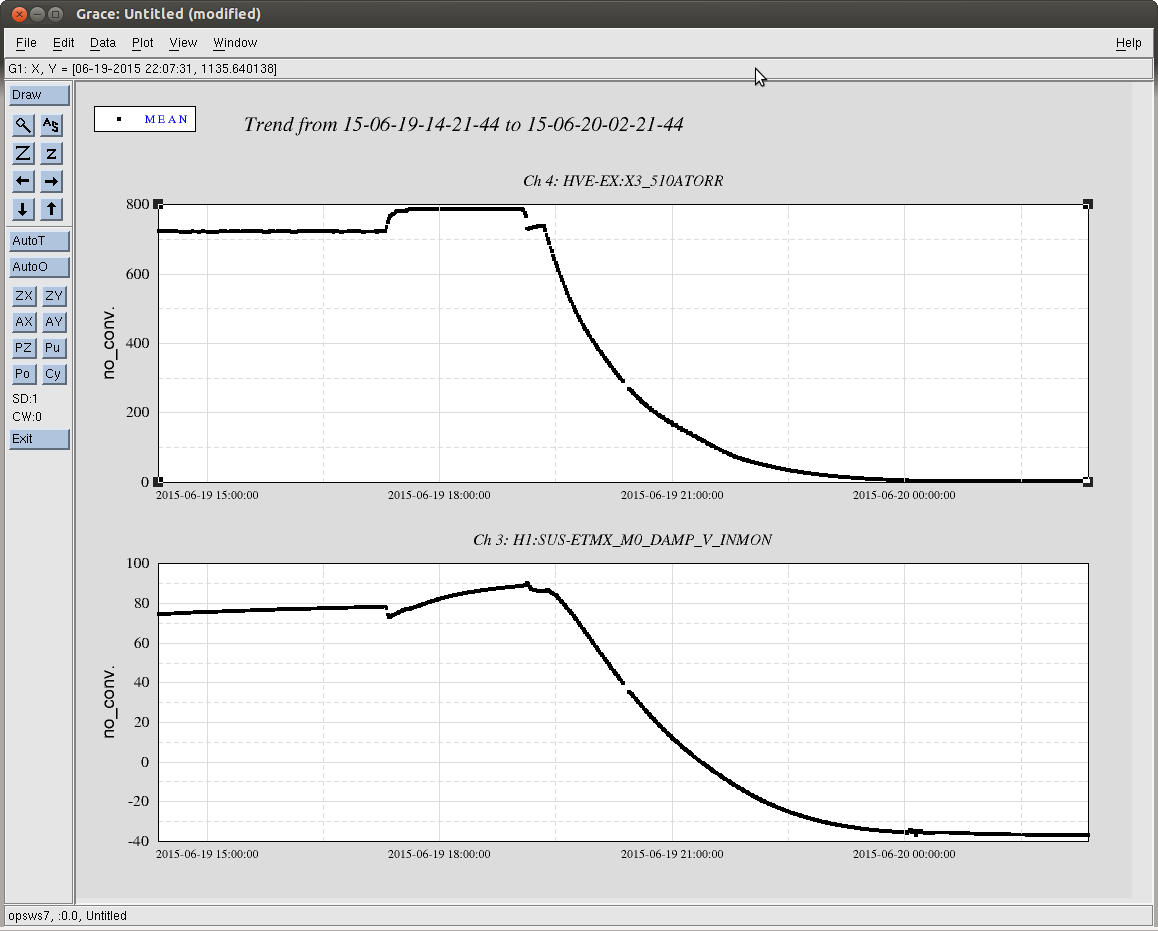

Attached is a trend of PR2 during ER7, pitch on the bottom plot, that shows the two states.

The trend of PR3 pitch is essentially PR2 pitch inverted, however due to techincal dificulties, the plot is forthcoming.

Kyle, Gerardo Finished replacing vibration cushions on Y-end and X-end Turbos -> Removed iLIGO RGA from BSC9 and replaced with 10" blank -> Finished torqueing flanges added yesterday -> Installed zero-length reducer and valve for aLIGO RGA -> Began pumping BSC10 and BSC9 annulus volumes Bubba Nearly completed 1" TMDS run at Y-end (still need one support) Danny S., Gary T. Hung door on BSC9 END STATION STATUS -> Friday we will remove the 1st generation ESD pressure gauges from BSC9 and 10 and install 4.5" blanks in their place. Next, we will install isolation valves and new style wide-range Inficon gauges on the domes of BSC 5 and 6 while leaving iLIGO gauges pairs as they are. Then we will begin pumping the Y-end and X-end. We will capture the pump down data using the existing gauge pairs while Richard M. arranges for the cabling of the new gauges into CDS.

During ER7 there was some anecdotal evidence that the DARM gain was changing by a few percent between the handoff to DC readout and our high-power, low-noise state. One place this could happen is in the power-up step, where we changed the DARM offset to maintain a roughly constant light level on the OMC DCPDs. As the DARM offset changes the gain in the DCPD_Sum --> DARM path is scaled following a quadratic curve, but we know our approximation for the response doesn't include small static offsets, and maybe this has an effect on our gain scaling. In this post I try to quantify this error - turns out it should be on the order of a few percent.

Stefan and I didn't explain this very well after we commissioned the OMC-READOUT path, so I wanted to document how the variables in this path are set by the OMC Guardian. The carrier power at the AS port is assumed to follow a quadratic dependence on the DARM offset (see T0900023 for more math):

P_AS = (P0 / Pref) * (x / xf)**2 [Eq 1]

...where P0 is the input power, Pref is a power normalization factor, x is the current DARM offset*, and xf is an arbitrary constant we call the "fringe offset".

Step 1. When the OMC is locked on the carrier, Pref is set such that when the DARM offset is equal to the "fringe offset", x = xf, the output of the power normalization step in the OMC-READOUT calculation will be equal to P0:

Pref = (P0 / P_AS) * (x / xf)**2 [Eq 2]

Step 2. Small variations in DARM (dx) will generate small variations in the carrier power (dP) on the DCPDs, like so:

dP = (P0/ Pref) * (2*x / xf**2) * dx [Eq 3]

The OMC-READOUT path calculates dx and sends the following to the LSC input matrix:

G * dx(x,dP) --> DARM_IN1 [Eq 4]

..where dx is an explicit function of dP and the DARM offset, x. The overall gain factor G converts dx into counts at the DARM error point. G is calculated by taking the transfer function between dx and DARM_IN1. It's set before the handoff to DC readout, and not changed. After this point, the reponse of the light on the DCPDs to small length fluctuations (the slope, dP/dx) is assumed to be a linear function of the DARM offset, following Eq 3.

When Stefan and I set up the OMC-READOUT path, Keita pointed out that a static DARM offset was making our lives more complicated. Before ER7 I swept the DARM offset and measured the photocurrent on the DCPDs - the quadratic dependence has a clear offset from zero, see Figure 1. The residuals for the simple quadratic fit (blue) have a linear dependence on the offset, while the residuals for the fit that includes a static offset (red) are well-centered around zero. The best-fit value for x0 is -0.25 picometers, +/- 0.01 pm. We're not sure where this comes from, whether it's changing, etc (on May 15 Stefan calculated 0.6pm).

If there's a static offset, x0, our model of the carrier power as a simple quadratic is incorrect, and Eq 1 needs to be rewritten as:

P_AS = (P0 / Pref) * (x + x0)**2 / xf**2 [Eq 5]

During the power-up step, we change the DARM offset to maintain ~20mA of photocurrent in DCPD_SUM, and we adjust the slope of dx(x,dP) accordingly, see Figure 2. If we're using Eq 1 instead of Eq 5, this gain-scaling is incorrect, and our DARM gain will change. For a negative static offset like we observe, the gain will be lower than it should be.

But, since the offset is very small, this effect is only 1-2%. Without going into exhaustive detail, the ratio of the slopes for a simple quadratic, Ax^2, and a quadratic that is offset from zero, A(x+x0)^2, is close enough to 1 for small values of x0 that I don't think we need to worry about it, see Figure 3. The error in the gain as we move from a DARM offset of ~42pm at 2.3W input power to ~16pm at 24W is 1% for x0=-0.25pm. For x0=-0.75pm the effect is 3%.

Summary: We have a small static DARM offset, this means our approximation of the DARM gain as a function of DARM offset is a little wrong. The effect should be small. Eventually we will account for the DARM optical gain in the calibration (the 'gamma' parameter) and small changes in the DARM loop gain will be absorbed into the calculation of CAL-DELTAL_EXTERNAL.

Edit: On further reflection, this isn't the case. We have a small static offset while locked on RF DARM, but after the handoff to DC readout this offset is absorbed into the power normalization (the Pref variable, Eq 2). Once DARM is locked on DC, a zero offset will result in zero carrier light at the dark port, by definition. A small offset might change the gain scaling at the handoff such that we observe a few percent difference from what we expect, but the mechanism is not what I describe here. Will have to think about it more.

* In the OMC-READOUT path, the DARM offset is referred to as OMC-READOUT_X0_OFFSET. Here I call it 'x', and refer to the static offset as 'x0'. Sorry.

Today we finished up the ETMx in-chamber activities adn closed the door. Sequence of events:

Filiberto checked for TMS QPD cable grounds at the feedthru. All ok there. (Actually did this yesterday afternoon.)

Kyle and Gerardo finished the port work (that was scheduled in the original vent plan).

Fil check the ESD pinout with aid from an "inside" guy multiple times in order to make clear the understanding of the pins on the air-side of the feedthru.

Jim unlocked the ISI.

Performed the discharge procedure on ETMx as per T1500101 - again, this took around 1 hour.

Swapped the vertical witness plates (1" and 4") that were mounted on the QUAD structure. 1" optic vertical on floor is now: SN 242.

Removed all equipment and re-suspended lower masses.

Dust counts taken throughout. Counts were nomnally 30-200 counts in all sizes of particles with few bursts up to 500 in th 0.3um size once in a while when 2 or more people were moving in chambelr. Counts were zero or 30 (max when entering chamber after lunch or in morning.

Measured ETMx main and reaction chain P and V TFs. All looked healthy with purge air on and soft cover over door.

Door being put on.

Checked EQ (earthquake) stops (Note this is the same as we found/set on ETMy):

Barrels of ERM ~2mm

Barrels of ETM ~1.5-2mm

12 o'clock stop ~3+mm

PenRe barrel ~1.5-2mm

PUM barrel ~2mm

All face stops 1-1.5mm

Purge air has been valved off.

Dang it - I didn't relogin - Betsy posted this, not Corey.

Discharge of ETMx WBSC9 (in chamber)

Using the ETM / ERM Gap discharge procedure https://dcc.ligo.org/LIGO-T1500101 we discharged the optics in chamber at ETMx. As before the procedure includes a swing back of the ERM i.e. an increase in the gap between the optics. Using the electrometer https://www.trifield.com/content/ultra-stable-surface-dc-volt-meter/ mounted centrally (on an X brace) 1" from the back of the ERM (i.e. between the ERM and TMS) we measured the following voltage: -

Note: - Prior to starting we zeroed the electrometer (10:28 am) with the cap on.

1) At start (after electrometer was mounted 1" from back of ERM and prior to swing back) = 21.9 V (10:30 am)

Note: During work on pinning ESD the reading went to = 17.2 V. Then the locking of the sus took it up to = 25.9 V. More to follow.

2) During mounting of swing back tooling = 26.6 V.

3) Electrometer was moved 5mm away from the ERM to allow for space during swingback = N/R V.

Note: Jim came into chamber to un-lock ISI. The ESD was re-pinned twice at this time. At this point (for saftey) we took off electrometer.

4) (Once electrometer was back on & Jim was finished) During swingback went up to peak of = 18.9 V.

5) Once Electrometer was moved back to being 1" from optic (and in chamber team in home position) = 21.1 V.

6) At this pont we broke for lunch. Once everyone out the chamber and soft door cover on = 20.6 V.

7) When we came back from lunch (2 hour later) = 9.5 V.

8) (When Danny entered chamber just after) the soft door cover came off = 8.8 V (1:38 pm)

Note: At this point humidity was 26%.

9) After completion of ETM / ERM Gap discharge procedure (inc 5 min after home) = 4.0 V (and decaying).

10) After swing back of ERM (back to original position) and with electrometer reset to being 1" from optic = 4.9 V

Note: - At this point team started to suspend the chains and run (basic) transfer functions on both the main chain and the reaction chain. We left the electrometer in place during this time.

11) At end (prior to doors on) at pm = 3.6 V *.

12) Final number - At the end we put the cap back on the electrometer and it was now reading 1.4 V. Therefore we are calling the final number = 2.2 V (i.e. 3.6 V - 1.4 V).

Note: - The final number was not decaying as per previous on Y end. More to follow on this. Data was collected over 10+ minutes.

Note: - Humidity was 28% just before doors on. Doors were in place at around 4:03 pm.

Kate Gushwa, Gary Traylor, Danny Sellers, Travis Sadecki, Betsy Weaver and Calum Torrie

[sus crew]

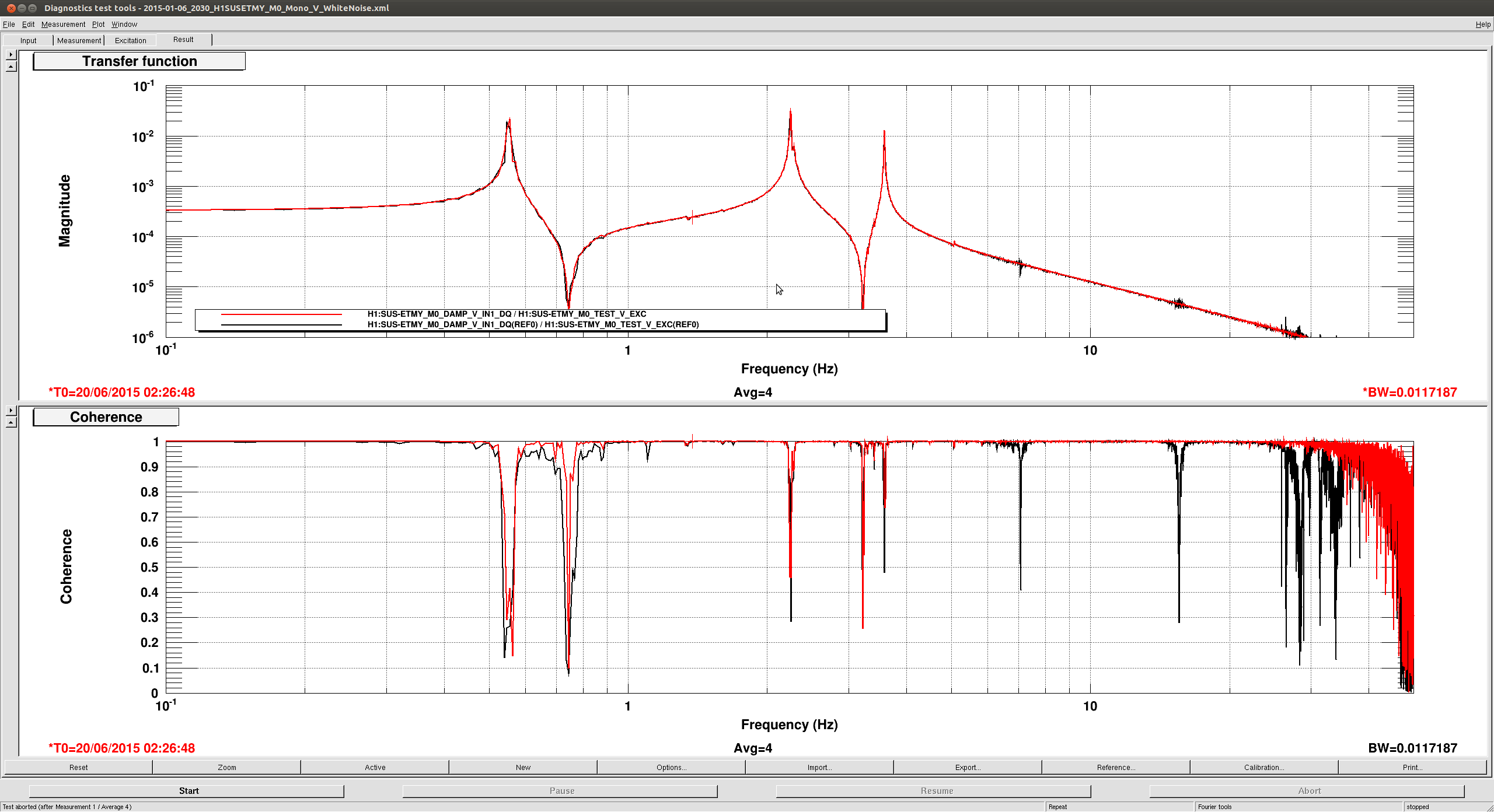

Following the in chamber work from today at end-Y we took quick TFs on the quad and the transmon. They look ok.

Since the pump won't happen before tomorrow I started matlab tfs for all DOF.

The measurement will be running for few hours.

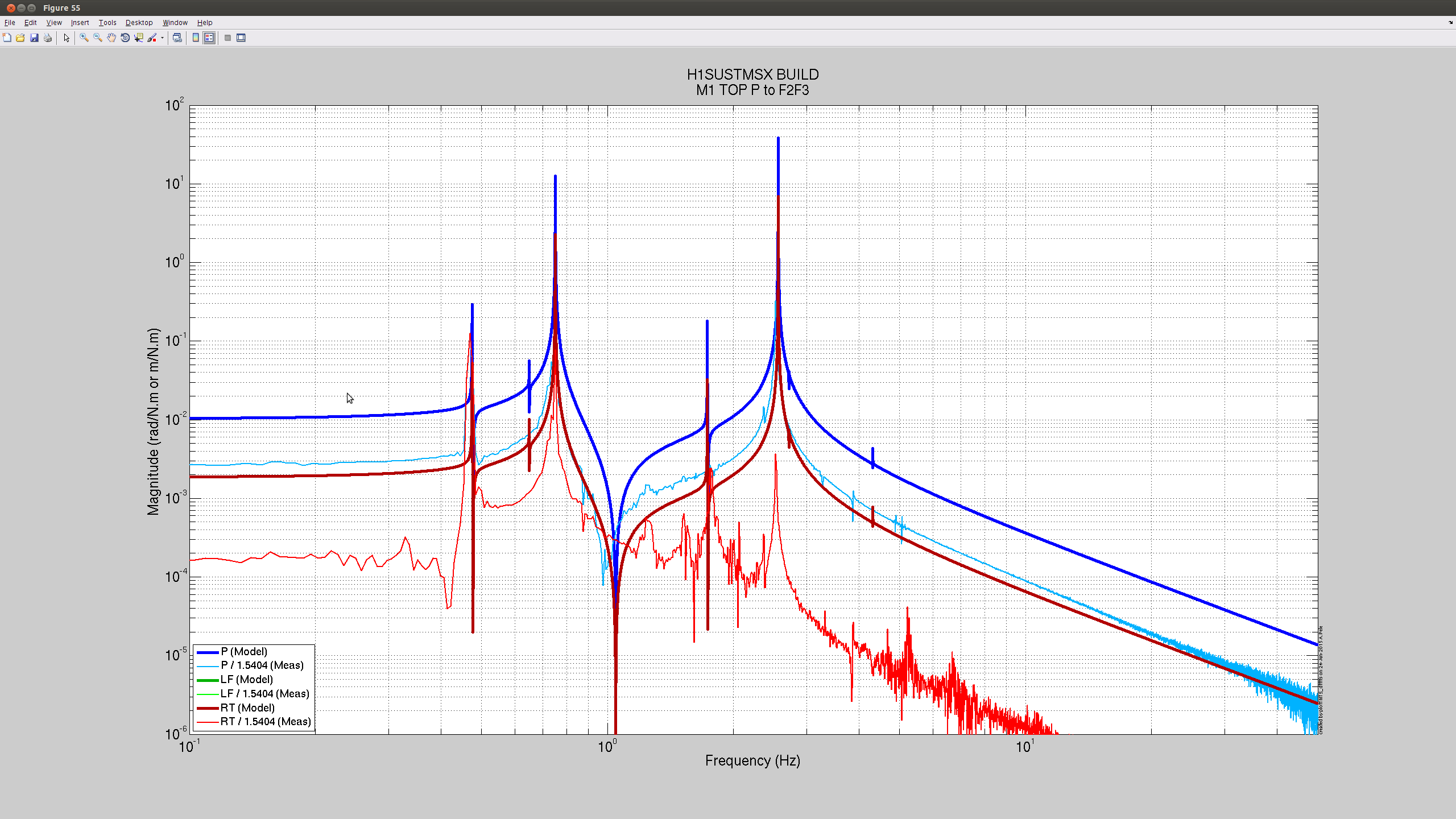

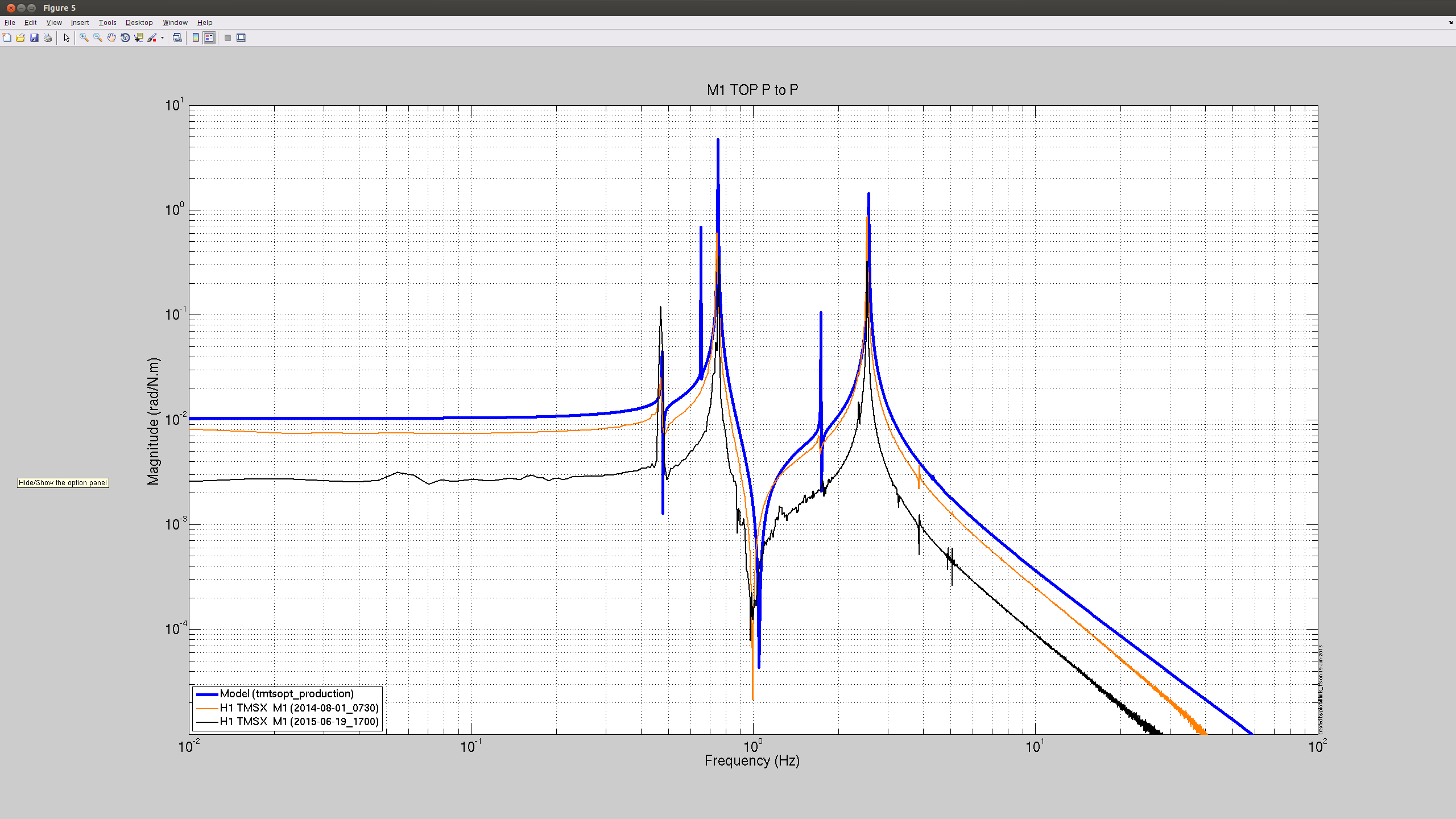

More clue on the TMSX measurement showing a different TF than before the vent (cf log below 19246). I looked at the response from pitch and vertical drive to the individual osems (LF, RT) and it looks like something is funny with the RT osem which should have the same response as the LF one, cf light red curves below

EDIT : Actually, LF and RT osem response are superposed on the graphs (green LF lies under red RT), so their response is the same.

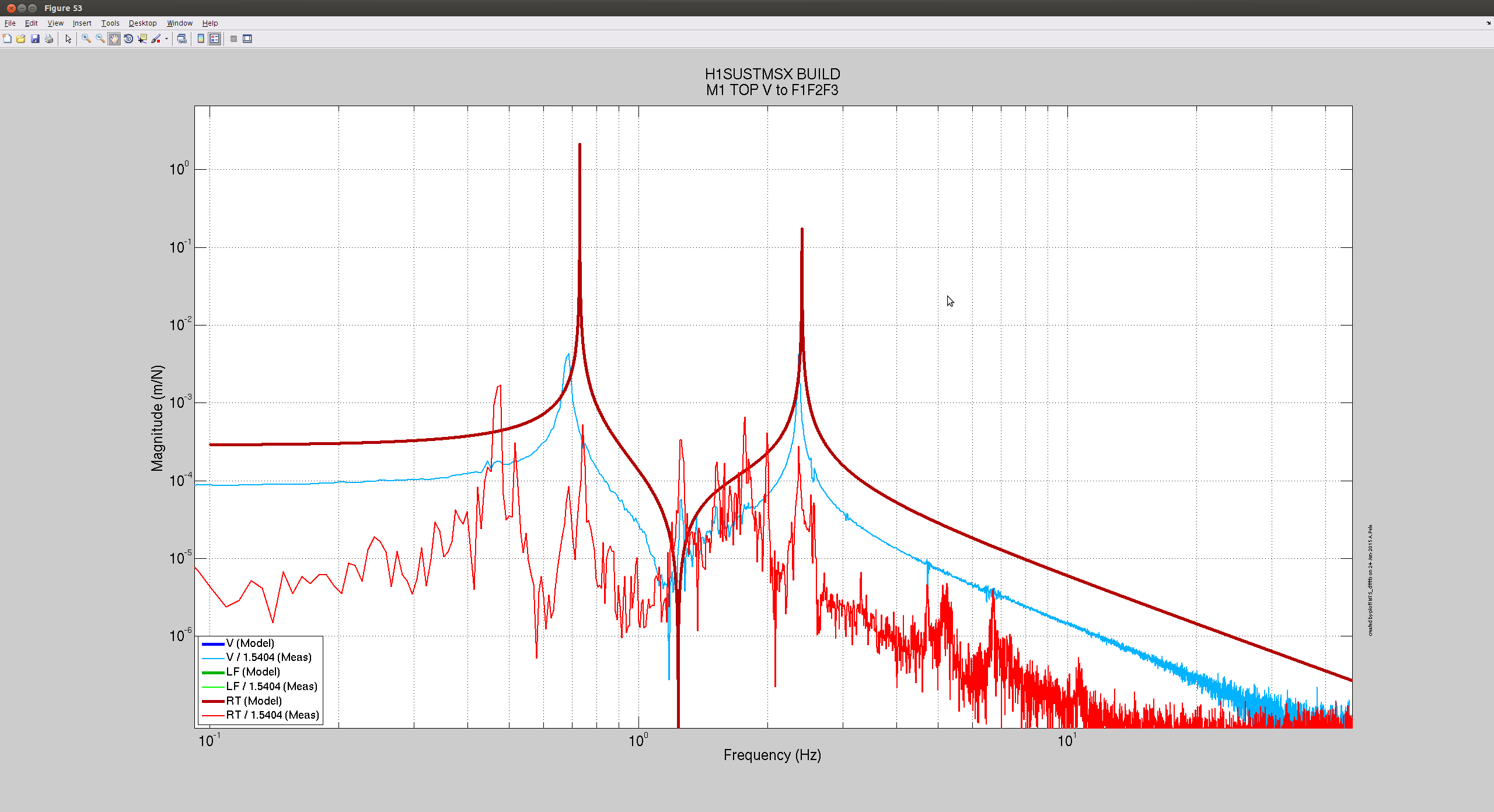

ETMY ant TMSY transfer functions were measured on thursday after the doors went on, with chamber at atmospheric pressure. They did not show signs of rubbing, cf the first two pdf attached showing good agreement with previous measurements.

Today, I remeasured the vertical dof, after the suspension sagged ~120um from the pressure drop, and it still looks fine, cf figures below.

ETMX and TMSX were measured today when pressure in chamber was about 3 Torrs, after the QUAD sagged by about 130um.

The second pdf attachment on the log above was supposed to show TMSY transfer functions. Attached is the correct pdf.

Kyle -> Soft-closed GV7 and GV5 -> Vented HAM6 Bubba, Hugh, Jim W. -> Removed HAM6 South and East doors Kyle, Gerardo -> Installed NEG and TMDS reducer nipple+valve+elbow at X-end Danny S., Gary T., Gerardo, Kyle -> Hung North door on BSC10 (NOTE: Noticed ~1 hour before hanging door on BSC10 that the purge-air drying tower had been left off since tying-in the 1" TMDS line on Monday afternoon -> Turned it back on and notified the ETMY discharge crew

Forgot to log -> Purge-air measured <-39 C just prior to venting HAM6

Dan Hoak gave us a clue on page 19067 and then Bas Swinkels ran Excavator and found that the channel H1:SUS-OMC_M1_DAMP_L_IN1_DQ had the highest correlation with the fringes. Andy Lundgren pointed me to equation 3 from “Noise from scattered light in Virgo's second science run data” which predicts the fringe frequency from a moving scatterer as f_fringe = 2*v_sc/lambda. Using the time from Dan and the channel from excavator and the fringe prediction, I wrote the attached m-file. Fig 1 shows the motion, velocity, and predicted frequency from OMC M1 longitudinal. Figure 2 shows the predicted frequency overlayed with the fringes in DELTAL. Math works.

PS. Thanks to Jeff and the SUS team for calibrating these channels and letting me know they are in um. Thanks to Gabriele for pointing out an error in an earlier draft of the derivative calculation.

Remember -- HAM6 is a mess of coordinate systems from 7 teams of people all using their own naming conventions. Check out G1300086 for a translation between them. The message: this OMC Suspension's channel, which are the LF and RT OSEMs on the top mass (M1) of the double suspension (where the OMC breadboard is the bottom mass), converted to "L" (for longitudinal, or "along the beam direction", where the origin is defined at the horizontal and vertical centers of mass of M1), is parallel with the beam axis (the Z axis in the cavity waist basis) as it goes into the OMC breadboard.

Jeff, thanks very much for that orientation; that diagram is extremely useful. The data leads me to think the scattering is dominated by the L degree of freedom though. Here's why - In raw motion, T and V only get a quarter of a micron or so peak-peak, while L is passing through 2 microns peak-peak during this time (see figure 1, y axis is microns). Figures 2, 3, and 4 are predicted fringes from L, T, and V. L is moving enough microns per second to get up to ~50Hz, whereas T and V only reach a few Hz. I'd be happy to follow up further.

Peter F asked for a better overlay of the spectrogram and predicted fringe Frequency. Attached 1) raw normalized spectrogram (ligoDV), 2) with fringe frequency overlay from original post above, 3) same but b/w high contrast and zoomed.

Also, I wanted to link a few earlier results on OMC backscattering: 17919, 17264, 17910, 17904, 17273. DetChar is now looking into some sort of monitor for this, so we can say how often it happens. Also, Dan has asked us if we can also measure reflectivity from the scattering fringes. We'll try.

I've taken a look at guardian state information from the last week, with the goal of getting an idea of what we can do to improve our duty cycle. The main messages is that we spent 63% of our time in the nominal low noise state, 13% in the down state, (mostly because the DOWN state was requested), and 8.7% of the week trying to lock DRMI.

Details

I have not taken into account if the intent bit was set or not during this time, I'm only considering the guardian state. These are based on 7 days of data, starting at 19:24:48 UTC on June3rd. The first pie chart shows the percentage of the time during the week the guardian was in a certain state. For legibility states that took up less than 1% of the week are unlabeled, some of the labels are slightly in the wrong position but you can figure out where they should be if you care. The first two charts show the percentage of the time during the week we were in a particular state, the second chart shows only the unlocked time.

DOWN as the requested state

We were requesting DOWN for 12.13% of the week, or 20.4 hours. Down could be the requested state because operators were doing initial alignment, we were in the middle of maintainece (4 hours ), or it was too windy for locking. Although I haven't done any careful study, I would guess that most of this time was spent on inital alingment.

There are probably three ways to reduce the time spent on initial alignment:

Bounce and roll mode damping

We spent 5.3% of the week waiting in states between lock DRMI and LSC FF, when the state was already the requested state. Most of this was after RF DARM, and is probably because people were trying to damp bounce and roll or waiting for them to damp. A more careful study of how well we can tolerate these modes being rung up will tell us it is really necessary to wait, and better automation using the monitors can probably help us damp them more efficiently.

Locking DRMI

we spent 8.7% of the week locking DRMI, 14.6 hours. During this time we made 109 attempts to lock it, (10 of these ended in ALS locklosses), and the median time per lock attempt was 5.4 minutes. From the histogram of time for DRMI locking attempts(3rd attachment), you can see that the mean locking time is increased by 6 attempts that took more than a half hour, presumably either because DRMI was not well aligned or because the wind was high. It is probably worth checking if these were really due to wind or something else. This histogram includes unsuccessful as well as successful attempts.

Probably the most effective way to reduce the time we spend locking DRMI would be to prevent locklosses later in the lock acquisition sequence, which we have had many of this week.

Locklosses

A more careful study of locklosses during ER7 needs to be done. The last plot attached here shows from which guardian state we lost lock, they are fairly well distributed throughout the lock acquisition process. The locklosses from states after DRMI has locked are more costly to us, while locklosses from the state "locking arms green" don't cost us much time and are expect as the optics swing after a lockloss.

I used the channel H1:GRD-ISC_LOCK_STATE_N to identify locklosses in to make the pie chart of locklosses here, specifcally I looked for times when the state was lockloss or lockloss_drmi. However, this is a 16 Hz channel and we can move through the lockloss state faster than 1/16th of a second, so doing this I missed some of the locklosses. I've added 0.2 second pauses to the lockloss states to make sure they will be recorded by this 16 Hz cahnnel in the future. This could be a bad thing since we should move to DOWN quickly to avoid ringing up suspension modes, but we can try it for now.

A version of the lockloss pie chart that spans the end of ER7 is attached.

I'm bothered that you found instances of the LOCKLOSS state not being recorded. Guardian should never pass through a state without registering it, so I'm considering this a bug.

Another way you should be able to get around this in the LOCKLOSS state is by just removing the "return True" from LOCKLOSS.main(). If main returns True the state will complete immediately, after only the first cycle, which apparently can happen in less than one CAS cycle. If main does not return True, then LOCKLOSS.run() will be executed, which defaults to returning True if not specified. That will give the state one extra cycle, which will bump it's total execution time to just above one 16th of a second, therefore ensuring that the STATE channels will be set at least once.

reported as Guardian issue 881

Note that the corrected pie chart includes times that I interprerted as locklosses that in fact were times when the operators made requests that sent the IFO to down. So, the message is that you can imagine the true picture of locklosses is somewhere intermediate between the firrst and the second pie charts.

I realized this new mistake because Dave asked me for an example of a gps time when a lockloss was not recorded by the channel I grabbed from nds2, H1:GRD-ISC_LOCK_STATE_N. An example is

1117959175

I got rid of the return True from the main and added run states that just return true, so hopefully next time around the channel that is saved will record all locklosses.