In 88046 the OFI temperature slowly scanned while we were in observing, we lost lock while the temperature was still scanning but we got through the minimum of power sent to HAM7.

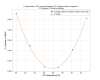

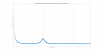

The first attachement shows the time series. The second shows that the AS power drifted during the test but the ratio of OFI PD A (pick off of TFP reflection towards HAM7) to AS_C (power into HAM6) shows the OFI temperature dependence nicely.

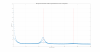

In the third attachment, the blue dots are averages of the last 10 minutes at each OFI temperature. This is fit to a model for the constant leakage, temperature coefficient and temperature that minimizes leakage: leakage ratio = constant_leakage + 1* sin^2( (pi /180 )* angle_coefficent * (temp - best_temp)

The impact of the misrotation of the OFI is sitting on top of 0.34% constant leakage that doesn't change with OFI temperture, presumably the reflection of the thin film polarizer. The OFI seems to rotate the polarization by 0.12 degrees of rotation per degrees C, which can be compared to the estimate of 0.2 deg / deg C from 57965.