ALS build against RCG2.9.2 [WP5183]

Jeff, Jim:

h1alse[x,y] models were built against RCG Tag2.9.2 and restarted. No DAQ restart was required. RCG version number on the GDS_TP screens is 4002 for this build.

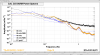

DAQ reconfiguration [WP5184]

Jeff, Jim, Dave:

The DAQ was restarted at the following local times: 07:24, 07:25, 09:58, 11:39

The first two restarts were to apply the new INI files if they had been updated (they had not). The first raised DAQ errors on certain front ends, the second was to see if they could be cleared.

The third was to apply the new H1BROADCAST.ini and H1EDCU_HWS.ini files. Again certain front ends needed their mx-streams restarted.

It was then found that dataviewer would not show any fast channels in the signal selection list, and the slow channels were not quite correct. Jim tracked this down to a badly formed line in my updated H1EDCU_HWS.ini file. I have forgotten to put a close-square-bracket at the end of one channel. This was corrected and the fourth DAQ restart applied the fix (this time no frontends were DAQ glitched).

Take home message, always run inicheck on new ini files.

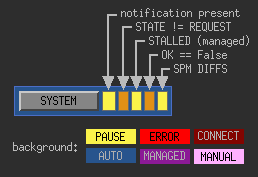

Guardian Reboots:

Jamie, Betsy, Jeff, Dave:

we rebooted the h1guardian0 machine twice this morning. First was to see what the load averages start with after a reboot (answer, 7 after 1 hour running). The second was to apply Jamies newer version of guardian.

prior version (guardian, ezca) = 1390,443

new version = 1445:474