TITLE: 11/24 Day Shift: 1530-0030 UTC (0730-1630 PST), all times posted in UTC

STATE of H1: Lock Acquisition

OUTGOING OPERATOR: TJ

CURRENT ENVIRONMENT:

SEI_ENV state: CALM

Wind: 4mph Gusts, 2mph 3min avg

Primary useism: 0.02 μm/s

Secondary useism: 0.30 μm/s

QUICK SUMMARY:

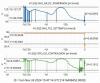

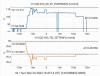

H1 had an almost 16hr lock with the recent lockloss (having some SQZ issues due to the ISS), but NO IMC or FSS tags (link). H1 has been having troubles with green arms (with repeated INCREASE FLASHES seen yesterday), so starting an initial alignment.

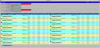

There is a RED VACstat box on the CDS Overview. It corresponds to: 1 glitch detected and "multiple" state--which I'm assuming is because it sees a glitch at LVEA's BSC3 and EX (see attached).

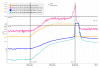

Environmentally, microseism is between the 50th -95th percentile and winds are low.