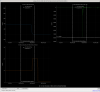

When Ibrahim relocked the IFO, the fast shutter guardian was stuck in the state "Check Shutter"

This was because it apparently got hung up getting the data using cdsutils getdata.

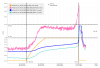

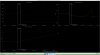

The first two attachments show a time when the shutter triggered and shows up in the HAM6 GS13s, and the guardian passes. The next time shows our most recent high power lockloss, where the shutter also triggered and shows up at a similar level in the GS13s, but the guardian doesn't move on.

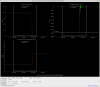

The guardian log screenshot shows both of these times, it seems that it was still waiting for data and so the test neither passed nor failed.

To get around this and go to observing, we manualed to HIGH_ARM_POWER.

Vicky points out that TJ has sovled this problem for other guardians using his timeout utils, this guardian may need that added.

Another thing to do is make things so that we would notice that the test hasn't passed before we power up.

Looks like I had left the PSL computer in service mode after work on Tuesday; fortunately this doesn't actually effect anything operationally, so no harm done. I've just taken the computer out of service mode.

I also added 100mL of water into the chiller since Ibrahim had gotten the warning from Verbal today.