Lockloss at 17:52 UTC, potentially from a 6.2 from Costa Rica.

Relocking is going to be tough, given the rising microseism (getting above 90th percentile), and the winds creeping up.

Lockloss at 17:52 UTC, potentially from a 6.2 from Costa Rica.

Relocking is going to be tough, given the rising microseism (getting above 90th percentile), and the winds creeping up.

Sat Oct 12 10:11:39 2024 INFO: Fill completed in 11min 35secs

TC-A looks good (-184C), TC-B only dropped to -154C.

TITLE: 10/12 Day Shift: 1430-2330 UTC (0730-1630 PST), all times posted in UTC

STATE of H1: Observing at 153Mpc

OUTGOING OPERATOR: Oli

CURRENT ENVIRONMENT:

SEI_ENV state: CALM

Wind: 3mph Gusts, 2mph 3min avg

Primary useism: 0.03 μm/s

Secondary useism: 0.38 μm/s

QUICK SUMMARY:

I adjusted the OPO temp from 14:31 to 14:32 UTC and gained ~ 5 Mpc in range

We dropped Observing overnight from 11:47 -> 11:48 UTC and 12:00 -> 12:17 UTC from the ITMY_CO2 laser having to relock.

h1pslcam1 isn't connecting on NUC21

TITLE: 10/12 Eve Shift: 2330-0500 UTC (1630-2200 PST), all times posted in UTC

STATE of H1: Observing at 154Mpc

INCOMING OPERATOR: Oli

SHIFT SUMMARY: Very quiet shift tonight; H1 was locked and observing for the duration with the current lock stretch up to 8 hours. One high profile GW candidate and a couple small earthquakes came through, but not much else to report.

After finding that the source of the broad 60 Hz shoulders in L1 appears to be a problem with line subtraction, as reported in LLO alog 773549, we have checked for possible related issues in H1. We have found that unlike in L1, the broad shoulders appear after October 1st instead of after September 17th as can be seen by checking at L1:GDS-CALIB_STRAIN vs L1:GDS-CALIB_STRAIN_NOLINES vs L1:GDS-CALIB_STRAIN_CLEAN at different points in time during these dates, where the shoulders appear in NOLINES and CLEAN. It would also appear like before September 17th the substraction was not ideal either, since there are still some small shoulders

September 17:September 26:

October 1st:

October 2nd:

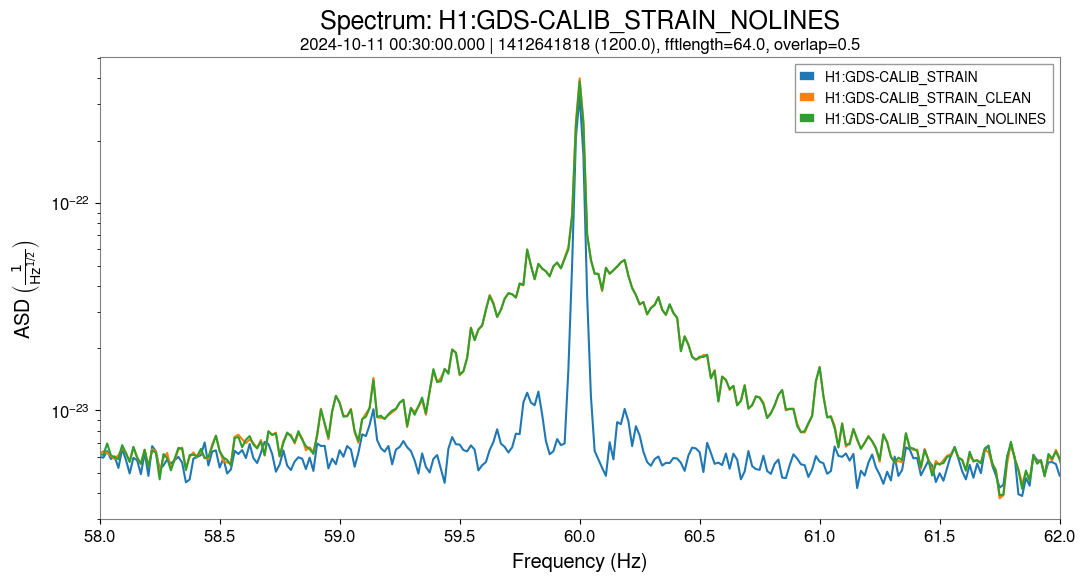

October 11th:

the current gstlal-calibration was installed at both sites on September 3rd (not September 5th as I've been telling people). At LHO at 17:52 UTC and at LLO at 17:19 UTC.

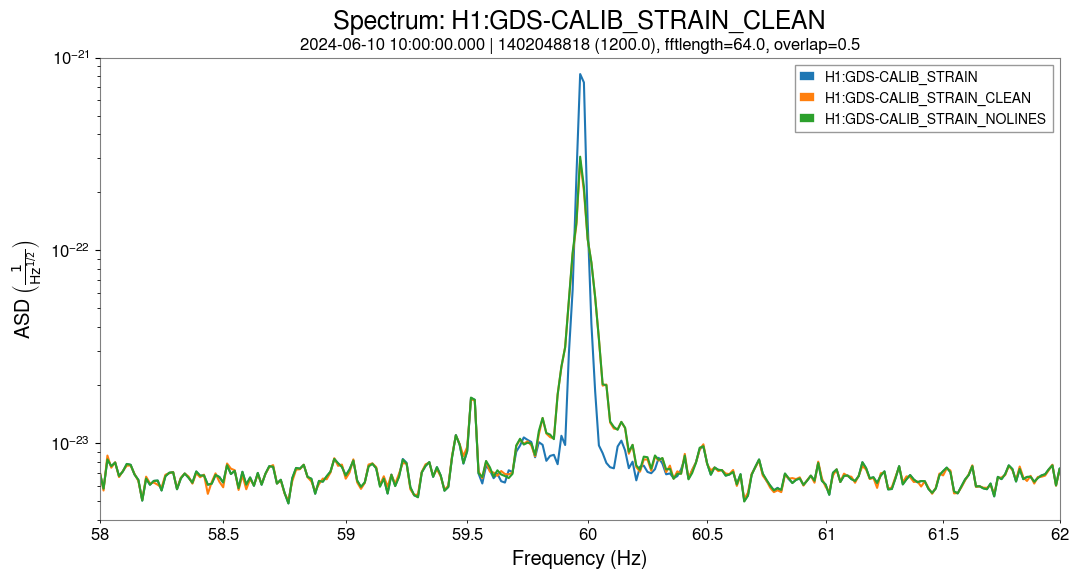

As in LLO, the shoulders seem to get worse getting closer to September 17th, but before then h(t) values for GDS_CALIB_STRAIN_NOLINES and GDS_CALIB_STRAIN_CLEAN shouldering 60 Hz still appear to be higher than GDS_CALIB_STRAIN, including before and after maintenance on September 3rd. Adding additional plots before and after September 3rd showing this behaviour. Example from June 10th:

TITLE: 10/11 Day Shift: 1430-2330 UTC (0730-1630 PST), all times posted in UTC

STATE of H1: Observing at 157Mpc

INCOMING OPERATOR: Ryan S

SHIFT SUMMARY: The FSS transmitted power has been dropping the past few (~4?) days, TPD notification has been flashing on OPS_OVERVIEW. 2 locklosses today, automated relocks. We've been locked for 2.5 hours.

LOG:

| Start Time | System | Name | Location | Lazer_Haz | Task | Time End |

|---|---|---|---|---|---|---|

| 18:13 | FAC | Tyler | Mids | N | 3IFO checks | 18:46 |

TITLE: 10/11 Eve Shift: 2330-0500 UTC (1630-2200 PST), all times posted in UTC

STATE of H1: Observing at 157Mpc

OUTGOING OPERATOR: Ryan C

CURRENT ENVIRONMENT:

SEI_ENV state: CALM

Wind: 7mph Gusts, 4mph 3min avg

Primary useism: 0.03 μm/s

Secondary useism: 0.20 μm/s

QUICK SUMMARY: H1 has been locked and observing for 2 hours. Sounds like it's been a relatively quiet day so far.

We get another false positive VACSTAT alarm for PT132 due to a noise spike. Alarm was raised at 14:29, I cleared it by restarted vacstat_ioc on cdsioc0 at 15:41

The AIP for GV10 is red, system railed about 2 hours ago. System will be assessed next Tuesday or at the next opportunity, we may need to replace the ion pump for GV10.

FAMIS 26333, last checked in alog80540

All fans within noise range and largely unchanged compared to last check.

No clear reason why, the lockloss website isn't updating "online last update: 237.1 min ago (2024-10-11 09:23:15.156027)"

1412712883 Not tagged as an IMC lockloss.

Fri Oct 11 10:12:04 2024 INFO: Fill completed in 12min 0secs

Gerardo confirmed a good fill curbside. TCs looking much better today.

Lockloss at 16:21 UTC (9:47 lock)

18:13 UTC Observing

I added a tag to the locklost tool to tag "IMC" if the IMC looses lock within 50ms of the AS_A channel seeing the lockloss: MR !139. This will work for all future locklosses.

I then ran just the refined time plugin on all NLN locklosses from the emergency break end 2024-08-21 until now in my personal lock loss account. The first lockloss from NLN tagged "IMC" was 2024-09-13 1410300819 (plot here), then got more and more frequent: 2024-09-19, 2024-09-21, then almost every day since the 2024-09-23.

Oli found that we've ben seeing PSL/FSS glitches since before June 80520 (they didn't check before that). The first lockloss tagged FFS_OSCLATION (where FSS_FAST_MON >3 in the 5 seconds before lock loss) was Tuesday September 17th 80366.

Keita and Sheila discussed that on September 12th the IMC gain distribution was changed by 7dB (same output but gain moved from input of board to output): 79998. We think this shouldn't effect the PSL but it could possibly have exasperated the PSL glitching issues. We could try to revert this change if the PSL power chassis swap doesn't help.

On Wednesday 9th Oct the IMC gain redistribution was reverted: 80566. It seems like this change has helped reduce the locklosses from a glitching PSL/FSS but hasn't solved it completely.

20:37 UTC lost it at LOWNOISE_LENGTH_CONTROL, the IMC and ASC_AS lost lock 150ms inbetween. 1Hz oscillation in MICH

Towards the end of TRANSITION_FROM_ETMX, a 1Hz oscillation started building up mainly in DHARD_P before we lost lock right when getting to LOWNOISE_LENGTH_CONTROL

H1 finally back to observing after 9.5 hours of downtime fighting several earthquakes and high microseism.