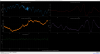

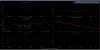

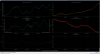

The IFO has been trying to relock for a bit now, but we were having multiple locklosses, from DHARD_WFSx3, BS_STAGE2, and CARM_OFFSET_REDUCTION. Most of the locklosses looked sudden (based on quick look at buildups ndscope), but the last attempt, we started getting SRM saturations and the PR gain was moving all over the place. I remembered that Jenne and RyanS had been saying that SRC1 wasn't being cooperative yesterday (88298), so I turned off the SRC1 and SRC2 loops and that stopped the oscillation. Unfortunately, we lost lock soon after in DHARD_WFS. I was (am) about to go home so I've put the detector in IDLE for the night.