Sheila, Jennie W

We lost some range with the hot OM2 so we thought to see if we could move SRM and raise the coupled cavity pole to regain this range as we did before in this entry.

Took 5 minutes no squeeze time.

Opened POP beam diverter to monitor POPAIR

Following the procedure in this entry we opened the SRC1 ASC loops by turning off input and offsets simultaneously after changing ramp to 0.1s.

Set the ramp to 10s, turn gain to 0, clear history, gain back to 4.

Then we started to move SRM alignment sliders.

The starting alignment sliders are here.

started yaw steps down on SRM, wrong way as f_cc avg went down, changed to up in yaw, then noticed POPAIR_RF18 was going down so switched to pitch steps down. Found a poitn at which it no longer chnaged fcc, went back to maximum found for f_cc. The trends can be seen here where the first vertical cursor is when we opened the beam diverter and the second is when we thought we had the highest coupled cavity pole (middle bottom trace). So we put the SRM sliders to their values for this time after finishing.

I then changed offsets in SRC1 ASC to -0.0417 for ASC-SRC1_P_OFFSET and 0.0977 for ASC-SRC1_Y_OFFSET but didn't turn them on while we grabbed 5 minutes no squeeze time.

17:05:30 UTC closed loops and switched on new offsets.

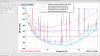

Sheila used the second period of no squeeze time to plot the how the range compares to June 24th when we had a cold OM2. This plot shows the DARM comparison on the top plot (Yellow is cold OM2, blue is hot OM2) and the range difference on the bottom. This plot shows the cumulative range comparison on the top (yellow is cold OM2, blue is hot OM2) with the same difference in range plotted on the bottom. From the bottom plot it looks like with hot OM2 we gain range between 25 and 40Hz, don't gain between 40 and 90 Hz and lose range for hot OM2 above this point.

So summary is, we didn't completely recover the range by raising the coupled cavity pole. Hot OM2 is good for low frequency range but not for high frequency. We still need to do some PSAMS tuning today so this might regain us range at higher frequencies.