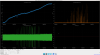

Since we've got OM2 warm now, I've updated the jitter cleaning coefficients. It seems to have added one or two Mpc to the new SENSMON2 calculated sensitivity [Notes on new SENSMON2 below].

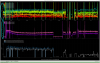

The first plot shows the SENSMON2 range, as well as an indicator of when the cleaning was changed (bottom panel, when there's a spike up, that's the changeover).

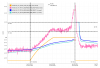

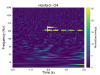

The second plot shows the effect in spectra form. The pink circle is at roughly the same frequencies in all 3 panels. The reference channels are data taken before the jitter cleaning was updated (so, coefficients we've been using for many months, trained on cold OM2 data), and the live traces are with newly trained jitter coeffs today.

- Top left is a comparison of old vs. new _CLEAN, showing that the blue trace is a little lower than red around one of our main jitter peaks.

- Top right is a comparison of pre-cleaning (blue _NOLINES) vs. cleaned (red _CLEAN) , for the updated coefficients. Here you can see that the cleaning is improving that jitter peak.

- Bottom right is a comparison of pre-cleaning (blue _NOLINES) vs. cleaned (red _CLEAN) , for the old coefficients. Here you can see that the cleaning isn't really doing much.

- Bottom left is a comparison of the estimated noise due to jitter. With OM2 hot, we seem to have less jitter noise.

I've saved the previous OBSERVE.snap file in /ligo/gitcommon/NoiseCleaning_O4/Frontend_NonSENS/lho-online-cleaning/Jitter/CoeffFilesToWriteToEPICS/h1oaf_OBSERVE_valuesInPlaceAsOf_24June2024_haveBeenLongTime_TraintedOM2cold.snap , so that is the file we should revert Jitter Coeffs to if we turn off the OM2 heater.

Notes on SENSMON2, which was installed last Tuesday:

- SENSMON2 has different overlap parameters than 'normal' SENSMON that we're used to, so the absolute value is lower. From JohnZ: Classic Sensemon calculated the noise psd with non-overlapping hann windows. Sensemon2 gives many options for the psd calculation but because the non-overlapping windows would miss loud glitches when they landed near a 4s boundary, I chose to use the 50% overlap. This affects the leakage of low frequency noise but I haven't quantified this effect.

- SENSMON2 is calculating range based on the GDS-CALIB_STRAIN_CLEAN channel. It does not have an equivalent calculation for other versions (eg pre-cleaning) CALIB_STRAIN channels.

- The nice thing about SENSMON2 is that it is calculated more of the time. Previous SENSMON would only calculate range from GDS channels when the IFO guardian node was OK (i.e. we were in NomLowNoise and had no SDF diffs), which made it challenging to use during commissioning. Now SENSMON2 should be calculated any time the ISC_LOCK guardian state is above some value (chosen, I think, to be roughly when we've got 2W full IFO locked).