Jenne, Elenna, Jennie W., Camilla, Ryan S., Austin, Matt, Gabriele, Georgia.

Going to offload DRMI ASC and try and offload things by hand as build-ups are decaying.

Got to Offload DRMI ASC.

Elenna changing PRM to improve build-ups. LSC-POPAIR_B_RF90_I_NORM and LSC-POPAIR_B_RF18_I_NORM.

PR2 helped.

SRM and SR2.

All of these pitch needed changing.

DHARD hit some limits at CARM offset reduction and we lost lock. First image.

DHARD YAW and DHARD PITCH rung up.

Annother lockloss (image 2) from state 309 but lockloss is reporting state number incorrectly.

We think its losing lock around DHARD WFS.

SRM yaw changed. Improves things.

Noticing some glitches on DHARD Pitch out - not sure what is causing them. Third image.

Changing CHARD alignment to improve ASC.

Lost lock. Possibly from state 305 (DHARD WFS)

PRM pitch being changed helps with build-ups (OFFLOAD DRMI ASC state).

Then stepping the guardian manually up the states making tweaks.

Tried to go from CARM offset reduction to Carm 5 picometers and LSC channels rung up at 60 Hz and we lost lock.

One of the LSC loops might have too much gain.

Elenna measuring TFs to check the loops, but DARM measurement is not giving good coherence and increasing the gain.

Power Normalisation of some loops uses IM4_TRANS_QPD and this is very mis-centred so may be adding in noise. (image 4)

Lockloss due to excitation?

Reached OFFLOAD DRMI ASC again and Elenna moving IM3.

Moved IM4 and less clipping on IM4_TRANS.

We lost lock.

DARM is normalised by X_ARM_TRANS and BRS X has been taken out of loop so Jenne changed the normalisation to be with Y-ARM in ISC_LOCK guardian.

Jenne aligning BS and we are in FIND IR.

Lost lock again.

Elenna doing went to MANUAL Initial Alignment state.

Had to undo the changes Jenne made to IM3 and IM4.

Gabriele is going to measure DARM with white noise measurement.

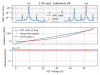

This shows the UGF is 15Hz instead of what we think it should be (50Hz).

Increased DARM gain by 50%.

Lockloss.

Problem comes with DHARD gain increase during DHARD WFS state (305). Georgis is commenting this out in line 2337 and 2338 of ISC_LOCK.

Keep losing LOCK from LOCKING GReen ARMS State.

When we lose lock the power normalisation for DARM should reset to 0 but it did not and so was causing noise on the ALS DIFF input. Probably because the DOWN STATE of the guardian is not set to do it.

Elenna updated prep for locking to set this state to 0 even with using Y ARM for the power normalisation instead of X.

We fell out at CARM to TR.

No changes in ALIGN_IFO, ALS_ARM (ALS_XARM/YARM), ALS_COMM, ALS_DIFF

ISC_DRMI changes since 2023-12-21

ALS_DIFF changes since 2023-11-07

IMC_LOCK changes since 2023-11-21

Some notes on sqz guardian changes that were made, then reverted: 76154

The change to use TRY as the DARM normalization was reverted back to the formerly-nominal TRX.

When we were using TRY, we had also made some changes in PREP_DC_READOUT_TRANSITION, but those have now been reverted.