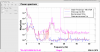

We managed to track down the source of the 0.6 Hz oscillation that we see at move spots and full power. It seems to be the result of the new pitch and length estimators on PR3 and SR3 that were installed on Tuesday. We tested this by sitting at move spots (after the spots converged), and turning on and off the estimators for a few minutes at a time. When turning ON the estimators, the 0.6 Hz ringing is not immediately apparent- it will slowly grow. However, once the 0.6 Hz oscillation is visible in the controls, turning OFF the estimator immediately stops the ringing. Therefore, we think this is the culprit for this instability. Oli will be turning off the length and pitch estimators for PR3 and SR3 for now. The yaw estimators are still on because they have been operating stably for a long time and were not changed this Tuesday.

We have also put the factor of 2 increase of MICH2 gain into the guardian, since we kept forgetting to add it in. Several locklosses that we thought were due to various other things were actually due to the MICH loop ringing up.

Finally, we just had a lockloss after moving to camera servos because the 1 Hz oscillation began to grow. Here is everything I tried to stop the ringing (none of it worked):

- CSOFT P gain was already at 30. I dropped it to 25 and re-increased to 30 several times, no change either way

- INP1 P gain was at -1, I doubled it and then tripled it, no change

- INP1 Y gain was at -0.1, doubled it, no change

- DSOFT P gain was at 5, I increased to 10, then 15, no change

- DSOFT Y gain was at 5, increased to 10, no change

I then had an idea to go to high bandwidth ASC using the seismic script, but we lost lock as soon as I thought of that.

Next plan: go to lownoise length control, skip lownoise asc, go to the final spots and camera servos, stay in HBW ASC and wait for the 1 Hz ring up and see if we can ride it out this way.

Attached scope screenshot shows the 0.6 Hz oscillation disappearing as soon as Oli flipped the length and pitch estimator switches OFF.