[Tony, Jenne, Sheila]

It's been a struggle to lock this morning. We've made it up to some moderately-high locking states, but then the PRG looks totally fuzzy and a mess, and we lose lock before increasing power from 25W. Since we've had some temperature excursions in the VEAs (due to the more than 25 F outside temp change in the last day!!), I ran the rubbing check script (instructions and copy of the script in alog 64760). Everything looked fine, except MC1 (and maybe MC2). RyanC notes that MC1 also looked a little like it had increased noise back in early December, in alog 74522.

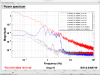

In the specrtra in the first attachment, the thick dashed lines are how the MC1 RT and SD OSEMs looked when things weren't going well this morning. They looked like that both when the IFO was locking and we were at 25W, as well as when the IMC was set to OFFLINE and was not locked. The other 4 OSEMS (SD, T1, T2, T3) all looked fine, so I did not save references. The vertical didn't look like there was a significant DC shift, but I put in a vertical offset anyway into the TEST bank (10,000 counts), and saw that those 2 OSEMs looked more normal, and started looking like the other 4. I also took away the vertical offset, expecting the RT and LF OSEM to look bad again, but they actually stayed looking fine (the 6 live traces in that first attachment).

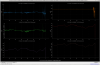

The ndscopes in the second attachment show the rough DC levels of the 6 MC1 OSEMs over the last week, to show that the signficant motion in the RT OSEM over the last 12 hours is a significant anomoly. I'm not attaching it, but if you plot instead the L, P, Y degrees of freedom version of this, you see that the MC1 yaw has been very strange over the last 12 hours, in the exact same way that the RT OSEM has been strange. It seems that when I put in the 10,000 count offset, that got RT unstuck, and MC1's OSEM spectra have looked clean since then.

We're on our way to being locked, and we're now farther in the sequence than we've been in the last 4.5 hours, so I'm hopeful that this helped....

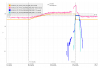

Glitch at 21:33:08.36 UTC is when ESD_EXC_ETMX changes H1:SUS-ETMX_L3_DRIVEALIGN_L2L from DECIMATION, OUTPUT to INPUT, DECIMATION, FM4, FM5, OUTPUT. This confused me as the gain was zero at the time but 2 seconds before, gain was changed from 1 to zero at the same time the tramp was increased to 20s, meaning the gain wasn't really zero. I've added a 2 second sleep between the gain change to zero and the tramp increase to avoid this in future and reloaded.

Analyzed 75362