TITLE: 03/23 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Earthquake

OUTGOING OPERATOR: Ryan S

CURRENT ENVIRONMENT:

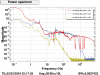

SEI_ENV state: EARTHQUAKE

Wind: 7mph Gusts, 4mph 5min avg

Primary useism: 0.56 μm/s

Secondary useism: 0.21 μm/s

QUICK SUMMARY:

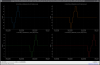

H1 Down due to a series of earthquakes up to magnitude 6.9.

We have been holding in down for a while and earthquake mode may be deactivated shortly and I will begin to relock.