alog for Tony, Robert, Jim, Gerardo, Mitchell, Daniel

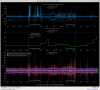

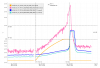

At 22:16UTC the HAM1 HEPI started "ringing", Robert heard this when he was in the LVEA as a 1000Hz "ringing" that he tracked to HAM1. plot attached.

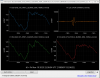

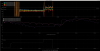

Geradro, Mitchell and Robert investigated the HEPI pumps in the Mechanical room mezzanine and didn't find anything wrong. Robert physically damped the part of HEPI that was vibrating with some foam around 22:40UTC and the "ringing" stopped, readbacks going back to nominal background levels. Can see it clearly in H1:PEM-CS_ACC_HAM3_PR2_Y_MON plot as well as H1:HPI-HAM1_OUTF_H1_OUTPUT channels, plots attached. It must be down converting to see it in HEPI 16Hz channels. HAM1 Vertical IPSINF channels also looked strange, plot.

Jim checked the HEPI readbacks are now okay.

Don't know why it started. Current plan is that it's okay now and more through checks will be done on Tuesday.

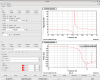

Snapshot of peak mon right after a lockloss during this time.

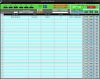

Lockloss page from the lockloss that happened during this event.

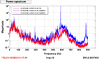

Rober reports at 1khz, but is seems there are a number of features at 583, 874 and 882. Can't tell if there are any higher, because HEPI is only a 2k model. Attached plot shows the H1 L4C asds, red is from a couple weeks ago, blue is when HAM1 was singing, pink is after Robert damped the hydraulic line. Seems like the HAM1 motion is back to what it was a couple weeks ago. Not sure what this was, I'll look at the chamber when I get a chance on Monday or Tuesday, unless it becomes an emergency before then...

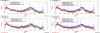

Second set of asds compare all the sensors during the "singing" to the time in October. Red and light green are the Oct data, blue and brown are the choir, top row are the H L4Cs, bottom row are the V. Ringing is generally loudest in the H sensors, though H2 is quieter than the other 3 H sensors.