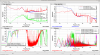

I made a comparison of DARM_IN1_DQ and CAL-DELTAL_EXTERNAL_DQ in nominal VS new DARM offloading scheme (the new scheme itself is explained in alog 74887). Data for the NEW_DARM configuration was taken from Dec/21 (alog 74977) when Louis and Jenne successfully transitioned but with calibration that did not make sense.

The main things you must look at are the bottom left panel red and blue, i.e. the coherence between DARM_IN1 and CAL-DELTAL_EXTERNAL in the NEW (red) VS the old (blue) configuration. Blue trace is almost 1 as it should be, but the red drops sharply between 20Hz and 200Hz.

This does not make any sense because CAL-DELTAL_EXTERNAL is ultimately a linear combination of DARM_IN1 and DARM_OUT (see https://dcc.ligo.org/G1501518). Since DARM_OUT is linear to DARM_IN1, no matter where and how the noise is generated and no matter how you redistribute the signal in the ETM chain, CAL_DELTAL_EXTERNAL should always be linear to DARM_IN1, therefore coherence should be almost 1.

So what's the issue here?

The only straightforward possibility I see is that somehow excessive numerical noise is generated in the calibration model even with the frontend's double precision math. Maybe something is agressively low-passed and then high-passed, or vice versa, that kind of thing.

It is not an artefact of the single precision math of DTT. Both CAL_DELTAL_EXTERNAL and DARM_IN1 is already well whitened, and they're entirely within the dynamic range of single precision. For example, RMS of red CAL-DELTAL_EXTERNAL_DQ trace is ~7E-5 cts. From that number, I'd expect that the noise floor due to single precision is very roughly O(7E-5/10**7 /sqrt(8kHz)) ~ O(1E-13) cts/sqrtHz if it's close to white, give or take some depending on details, but the actual noise floor is ~10E-8 cts/sqrtHz. Same thing can be said for DARM_IN1.

It's not the numerical noise in DARM filter as the coherence between DARM_IN and SUS-ETMX_L3_LSCINF_L_IN1 (which is the same thing as DARM_OUT for coherence purpose) is 1 from 1Hz to 1kHz for both configurations (old -> brown, new -> green). (It looks as if the coherence goes down above 1kHz for the old config, but that's irrelevant for this discussion, and anyway it's an artefact of DTT's single precision math. See e.g. the top left blue (old config DARM_OUT) with RMS of 20k counts, corresponding to O(2E-5)/sqrtHz single noise floor due to single precision, give or take some. See where the actual noise floor is.)

It's not a glitch, noise level of CAL_DELTAL_EXTERNAL spectrum didn't change much from one fft to the other for the entire window (I used N=1 exponential to confirm this).

Note that there's also a possibility that excessive noise is generated in the SUS frontend too, polluting DARM_IN1 for real, not just for calibration model. I cannot tell if that's the case or not for now. The difference between the green (new) and brown (old) DARM_IN1 spectrum in the top left panel could just be a difference in gain peaking due to different DARM loop shape.

I'll see if double precision channels (recorded as double) in calibration model are useful to pinpoint the issue. Erik modified the test version of DTT so it handles the double precision numbers correctly without casting into single, but it's crashing on me at the moment.

some more time windows to look into while we were in the NEW DARM state are listed at LHO:75631.