Rahul, Camilla, Jonathan, Erik, Dave:

At 07:33 PST during a measurement this morning the ETMX test mass was set into motion which exceeded the user-model, SWWD and HWWD RMS trigger levels. This was very similar to the 02 Dec 2023 event which eventually led to the tripping of the ETMX HWWD.

The 02 Dec event details can be found in T2300428

Following that event, it was decided to reduce the time the SUS SWWD takes to issue a local SUS DACKILL from 20 minutes to 15 minutes. It was this change which prevented the ETMX HWWD from tripping today.

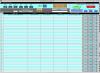

The attached time plot shows the details of today's watchdog events.

The top plot (green) is h1susetmx user-model's M0 watchdog input RMS channels, and the trigger level (black) of 25000

The second plot (blue) is h1susetmx user-model's R0 watchdog input RMS channels, and the trigger level (black) of 25000

The lower plot shows the HWWD countdown minutes (black), the SUS SWWD state (red) and the SEI SWWD state (blue)

The timeline is:

07:33 ETMX is rang up, M0 watchdog exceeds its trigger level and trips, R0 watchdog almost reaches its trigger level, but does not trip.

At this point we have a driven R0 and undriven M0, which was also the case on 02 Dec which keeps ETMX rung up above the SWWD and HWWD trigger levels

The HWWD starts its 20 minute countdown

The SWWD starts its 5/15 minute countdown

+5min: SEI SWWD starts its 5 minute countdown

+10min: SEI SWWD issues DACKILL, no change to motion

+15min: SUS SWWD issues DACKILL, R0 drive is removed which resolves the motion

HWWD stops its count down with almost 5 minutes to spare.

We have opened a workpermit to reduce the sus quad models' RO trigger level to hopefully always have M0 and R0 trip together which will prevent this is the short term. Longer term solution requires a model change to alter the DACKILL logic.