Louis and I have been looking at increasing the offloading of the signal from the ESD to the PUM and UIM, in light of 73913.

I have attempted a few times to make measurements by exciting at the L2 LOCK L filter, this results in poor coherence even for amplitudes that are quite large in DARM, and turning up the amplitude slightly has caused a lockloss by saturating the ESD.

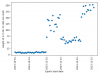

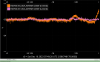

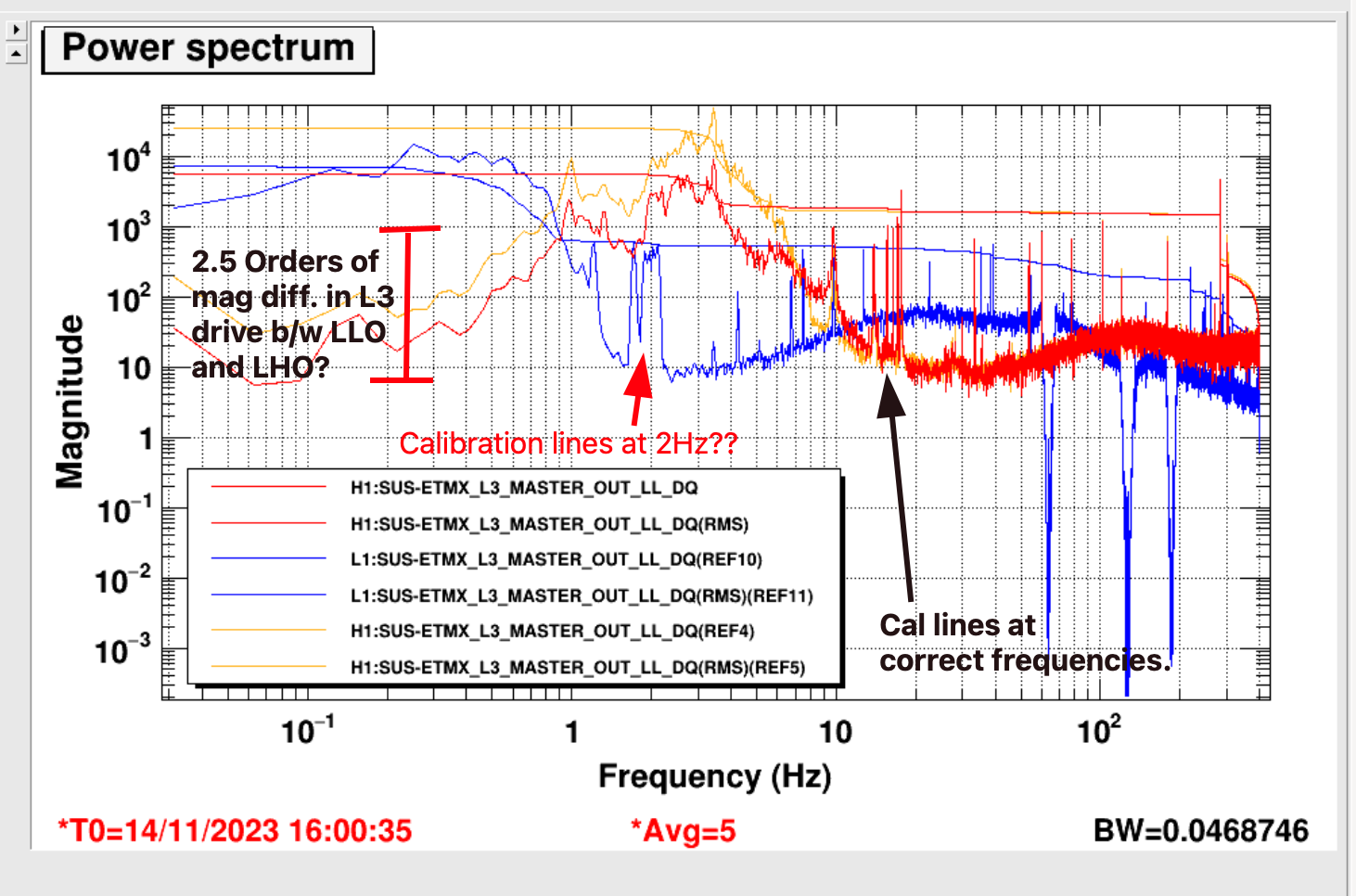

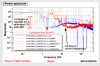

On Tuesday morning I engaged the PUM boost (L2 LOCKL FM2, boost4.5 which is a boost with a little resG aaround 4.5Hz). Some history (with links to old alogs about this filter is here: 48767) we used to run with this boost but turned it off in early O3 because angle to length cross couplings were causing instabilities of the DARM loop. On Tuesday morning I engaged this boost, and we stayed locked. The first attachment shows a comparison of our ETMX drives to LLO's (LLO also uses ETMY L1 for DARM control, but I haven't plotted that here), H1's usual configuration is in gold and the time with the PUM boost engaged is in red. The ESD drive RMS is reduced by more than a fator of 2 with the PUM boost engaged. I quickly tried a swept sine injection, and saw that the coherence was somewhat better than earlier measurements with a very small amplitude injection, so it seemed promising that we might be able to get a better measurement of the cross over with the PUM boost engaged.

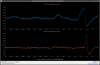

Today I engaged this boost again during commisoning time and we immediately lost lock. The third attachment shows that turning on the boost certainly seems to be the cause of the lockloss.

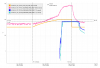

We can probably rely on the pyDARM model for the PUM crossover, since the calibration measurements validate the model above 10Hz. Today I was able to make a measurement of the UIM crossover, which Louis can use to compare to pyDARM from 1-10Hz.