Midshift Update: Still troubleshooting (but finding issues and chasing leads)

Here are the main stories, troubleshooting ideas and control room activities so far...

TIME: 18:08 UTC - 19:00 UTC

Codename: Ham 1 Watchdog Trip

Ham 1 Watchdog tripped due to Robert hitting the damping since it started “audibly singing”. We untripped each other. At 18:41 UTC, Jim suggested going down to Ham 1 to investigate the watchdog trip and the singing. See Jenne’s alog 74476 for more. This is not the cause of our locking issues.

TIME: 18:09 UTC - 18:33 UTC

Codename: No Name Code

There was an ALS_DIFF naming error in a piece of code that TJ pushed in this morning. Jenne found it, we edited the code, reloaded (via stop and exec) the ALS_DIFF guardian and it was fine. Initially thought to be as a part of the watchdog trip but was not. This is not the cause of our locking issues.

TIME: 17:30 (ish) UTC - 21:30 UTC

Codename: Fuzzy Temp

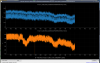

The temperature excursion that was caught and apparently fixed yesterday has not been fixed. While the temperatures have definitely returned to “nominal” and are within tolerance, the SUP temperature monitor is reporting extremely noisy temperatures that plateaued at a higher level (1.5 degrees) than it was prior to the excursion. In addition, the readings are extremely fuzzy. I went down with Eric into the CER and we confirmed that both the thermostats are correct in their readings, eliminating that potential cause. There are a few reasons this may be happening per Fil and Robert’s investigation.

- It could be the temperature sensor itself causing excess noise and potentially contributing to its own maybe erroneous report of temperature.

- It could be a faithful reading of another nearby source moving up and down in temperature quickly, causing this fluctuation (the noisiness oscillates at 15-min periods generally). This would mean it is a controls and/or external CER machine issue and there’s nothing wrong with the temp readout itself.

The proposed plan for this was to switch the cables between the more stable CER temp readout in the same room and the fuzzy SUP readout to determine if this issue was upstream (Beckhoff error) or downstream (temperature sensor). The cables were switched and upon trending the channels, we found that the noisy/fuzzy SUP readout (now plugged into CER channel) became stable and vice versa. This meant that the noise was related to the equipment being plugged in i.e. the temperature sensor and/or its cable.

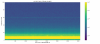

Fil switched the sensor out but the fluctuation did not change. Robert had the idea that it could be a nearby air conditioner (AC5) that was turning on and off and thus causing the temperature fluctuations. He turned the AC off 20:30 UTC and and we waited to see the temperature response. We found that indeed, the AC was the cause of the fluctuation (Screenshot 4).

This tells us that the AC behavior changed during yesterday’s maintenance, causing it to be more noisy. This noisiness was only perceived after the temperature excursion, and only appeared to be changed after the excursion was fixed.

Unfortunately, this would means that the issue is contained to faulty equipment rather than faulty controls, which means this is not the cause of our locking issues.

See screenshots (1 → 4) to get an idea of the overall pre-switch noise and the post-switch confirmation.

TIME: 16:30 UTC - Ongoing

Codename: Mitosis

There is a perceived “cell splitting” jittering in the AS AIR camera during PRMI’s engage ASC loop that takes place after PRMI is locked. This jittering, given enough time, causes swift locklosses in this state, and definitely worse with presence of ASC actuation.

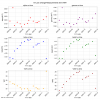

Jenne found no issues or glitches in the PRC optics (lower and higher stages) (Screenshot 5). Jenne did find a 1.18Hz ring up when PRMI is locked, and when that gets bad there's the glitches in POP18. Jenne found that the glitching seems to go away, and that the 1.18 Hz ringing went away when she lowered the LSC MICH locking gain from nominal 3.2 down to 2.5. (Screenshot 6).

Coil drivers: Checked during troubleshooting to see if these might have caused/exacerbated lock issues - confirmed by Rahul not to be the case.

An idea so far is that the SUS-PRM-M3 stage may be glitching, but we need to see if this glitch persists without the feedback that a locked PRMI would have. Confirmed not to be glitching. Sheila just checked the same thing for the BS and the ITMs. We are left with less of an idea of what’s going on now. The jittering in the AS AIR camera is, however, fixable this way. This was not changed in the guardian.

So this “Mitosis” issue is somewhat resolved (or at least bandaged as we investigate more).

Ideas of leads to chase are:

- IMC looking weird while not locked

- Power recycling gain looks terrible, worse when soft loops and move spots are turned on.

- Sheila found a potential OpLev Glitch (being investigated)

Stay tuned.

It seems that the "mitosis" is BS optical lever glitching, which shouldn't prevent us from locking if we can get past DRMI. (and wouldn't be responsible for high noise and locklosses overnight).