Summary of the report:

- observing for 74.8% of the week

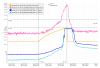

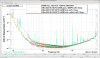

- many summary page plots are not displaying properly for this week, especially the glitchrate plots and hveto

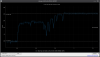

- range was mostly around 150-160 Mpc except for fuziness on Thursday and Friday. A cause was not identified.

- there were a couple of locklosses with unidentified causes. EQs, wind, and microseism may be factors.

00:40 11/28 Started relocking

02:25 Observing