As H1 made its first attempt at Squeezing at 2150utc, when H1 was at NLN, the SQZ_Manager was in an odd state. Althought it passed through the Inject Squeezing state, it hadn't even attempted squeezing (the SQZ ndscope fom had not action/changes on it). It looked like SQZ_MANAGER was requesting DOWN, but it was not able to complete the DOWN.

I've not seen this before. So was going to take the opportunity to follow the new SQZ Flow Chart....

All these nodes were already DOWN (SQZ_MANAGER was requesting DOWN, but not in the (green) DOWN state):

- ANG_ADJUST

- FC

- LO_LR

- CLF_LR

- OPO_LR

These nodes were LOCKED: PMC & SHG

Started going through SQZ Flow Chart Camilla passed on to opearators recently (T2500325). NOTE: I did not take all SQZ nodes to DOWN...PMC & SHG were nominal/LOCKED, so I kept them there since this was a first step of Flow Chart (but was this the reason the SQZ_MANAGER wasn't in the DOWN state?). In hindsight, I probably should have taken these down to truly follow the instructions, at any rate...

Going through this flow chart, I got to the step where we check the SHG Power to see if it is less then 90 (it was down at ~85). And at this point I adjusted the SHG TEC temp to increase the SHG Power...made it up to ~119, but at this point attention was switched to the SQZ_MANAGER and why it wasn't DOWN. Around this time Oli & Tony offered assistance.

At this point started relocking SQZ normally, and discovered the OPO_LR was going into "siren mode"---basically in a fast cycle of going to DOWN + it had the notification about "pump fiber rej power in ham7 high...". To address the latter "common notification" involved working with SQZT0 waveplates (this was very likley do the big adjustment UP in SHG power I made).

Tony & Oli (whom had experience w/ these waveplate adjustments) needed several iterations of adjusting SQZT0 waveplates (due to the increased SHG power): 1/2 & 1/4 waveplates downstream of the SHG Launch beamsplitter path (see image #1) AND 1/2-waveplate upstream of the SHG Rejected + Launch paths (see image #2). This took some time because of how big SHG power was increased earlier and because every waveplate adjustment unlocked the PMC & SHG.

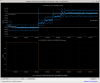

Eventually they were able to get SHG_FIBR_REJECTED_DC_POWERMON & SHG_LAUNCH_DC_POWERMON signals to their nominal values (these signals are also in Tony's screnshots, i.e. images #1 & #2). After 30+min of adjusting, the SQZ was able to lock & H1 was automatically taken to Observing as soon as the new SHG TEC SETTEMP was accepted in SDF (see image#3). H1 went to Observing just as Sheila was walking into the Control Room & able to assess and get a rundown of what happened.

Many thanks to Oli & Tony for the help and Tony's screenshots!

It looks like the issue was that SQZ_MANAGER was staying in DOWN rather than moving up to the requested FRS_READY_IFO as the beam divertor had been left open after the 87342 tests. Re-reqesting SQZ_MANGER to DOWN would have closed the beam divertor and allowed it to try to relock.

Corey correcty increased the available SHG power with the TEC, however this mean that there was too much power going into the SHG fiber for the OPO to lock. This is surprising to me, I would have expected it to lock with no ISS and then unlock when the ISS couldn't get the correct OPO trans power, but that wasn't the case. Oli then correctly adjusted the power control wave plate (PICO I #3) to reduce power going into fiber, this is annoying to do as unlocks everything each time the waveplate is touched, hence not being in the T2500325 flowchart.

This is an usual reason why squeezing didn't work so no changes have been made to anything.