I made focussed shutdowns yesterday of just one or a few fans. The range and spectra were not strongly affected, and I did not find a particularly bad fan.

Nov. 9 UTC

CER ACs

Off 16:30

On 16:40

Off 16:50

On 17:00

Turbine shutdowns

SF4 off : 17:10

SF4 on: 17:21

SF3 and 4 off: 17:30

SF3 and 4 back on: 17:40

SF3 off: 17:50

SF3 back on: 18:00

SF1 and 4 off: 18:30

SF1 and 4 on: 18;35

SF1 and 4 off: 19:00

SF1 and 4 on: 19:18

SF1 and 3 off: 19:30

SF1 and 3 back on: 19:40

SF1 off: 19:50

SF1 back on: 20:00

SF1 and 4 off 20:10

SF1 and 4 back on: 20:20

SF3 off: 22:50

SF3 on: 23:00

SF3 off: 3:41

SF3 on: 3:51

Nov 10 UTC

SF5,6 off 0:00

SF5,6 back on 0:10

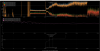

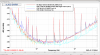

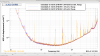

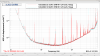

The latest settings are still working fine, both IY05 and IY06 are going down as shown in the attached plot (shows both narrow and broad filters along with the drive output)- however it will take some time before they get down to their nominal level.

ITMY08 is also damping down nicely.