This is an alog I started before the power outage, because we were worried that the filter cavity backscatter was the reason for our intermittent squeezer noise. (We now realize that the noise we are looking for is not from the filter cavity 87071.)

The overall message is that the filter cavity backscatter seems low compared to DARM, but there is a source of scattered light upstream of SFI2.

Filter cavity length

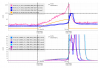

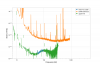

I've constructed a model of the filter cavity length loop using the foton filters for PRM in the CAL-CS model. As noted in 78728 we need to modify the analog gains for M3 for FC2. I've used a filter cavity pole of 34 Hz, and adjusted the sensor gain to get the model to match the measured open loop gain (plot). The measurement used in that plot has poor coherence below 5 Hz, which explains why the model doesn't seem to fit there. This model also matches the cross over measured by injected at M1 LOCK L well (plot).

The next plot shows the uncalibrated error signal (measured at LSC DOF2 IN1), with the loop correction applied (error_spectrum * (1-G)), and a line which I've added as a crude estimate of sensor noise. You can see that there seems to be a bump in sensor noise around 100 Hz that isn't included in my rough estimate, I am not sure what that is.

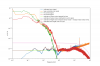

The next plot shows calibrated length noise.

- The blue trace is the measured error signal calibrated using the sensing function found by fitting the open loop gain error_spectrum/sensing function

- The purple trace shows how the estimated sensor noise would be expected to contribute to that: sensor_noise_estimate * 1/(sensing function *(1-G)). This is fairly close to the error signal above 8 Hz, so I've made an assumption that above 8 Hz the error spectrum is dominated by sensor noise, and that below 8 Hz the error spectum reflects the suppressed length noise that is not from this control loop (ie, ground motion, osems). We could check the osem noise by doing injections.

- The orange trace shows the loop corrected error signal, an estimate of what the noise would be if there were no loop: error_spectrum * (1-G)/ sensing function

- The green and red traces are the control signals for M1 and M3, calibrated by the plant models used in modeling the open loop gain and cross over measurements. Plotting these was usefull for debugging the loop model and gaining confidence in the modeled sensing function, because they now roughly agree with the loop corrected error signal. (but they won't be used again here).

- The chartruse trace is an estimate of the sensor noise imposed on the filter cavity by the control loop, sensor noise * G/(sensing function *(1-G)). This is roughly along the

- The brown trace is the awnser, an estimate of the filter cavity length noise with this loop running. This is the quadrature sum of the blue calibrated error signal below 8 Hz (asumming that is residual length noise where the loop is gain limited), and the chartruse estimated sensor noise imposed.

- The pink shows the estimated RMS length noise of the filter cavity, 0.13 pm, dominated by the imposed sensor noise. Page 21 of T1800447 states that 2.5 pm of RMS length noise would limit the squeezing at 50 Hz to 3dB, if we had 6 dB of squeezing and 12 dB of anti squeezing. This level of rms length noise is probably OK.

- section 8.2 of T1800447 estimates that the VCO noise is 1e-16 m/ rt Hz, small enough that we don't need to include it in this estimate. The sensing noise that I am estimating from looking at the error signal is nearly two orders of magnitude higher than the VCO noise which was used as the sensing noise in the loop modeling done in the design document.

Backscattered power

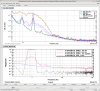

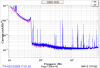

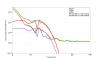

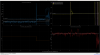

Using the measurement of excitations on ZM2 in 86778,we can estimate the amount of backscattered light that is reaching the filter cavity. The DCPD spectra, calibrated into RIN and with the DARM loop removed are plotted here and here with different FFT lengths. Next time if we do this measurement with a lower frequency and higher amplitude excitation we will be able to use a longer FFT length for the plot and still se

I've made a model of the noise caused by backscattered light using equation 4 (and 5) from P1200155. The excitation was a 1Hz 100 count excitation into test L, in the osems this showed a peak to peak amplitude of 0.37 um, and to go from optic motion to path length change we need roughly a factor of 4 since ZM2 is at a low angle of incidence and it is double passed. To match the shelf frequency in the measurement I had to increase the amplitude used in the model by a factor of 3.4. Using a QE of 100% gives a PD responsivity of 0.858 A/W, and 46.6mW of power on the OMC PDs. This model doesn't include any phase modulation from any other elements in the optical path, but the real measurement does, which is why the measurements shows a nice shelf but the model shows a series of peaks when I use a longer FFT. I think would be less apparent if we make the measurement with a lower frequency higher amplitude excitation next time.

The result of this shows that we have 12 pW of scattered light passing ZM2, since backscatter that reaches the filter cavity should all be reflected back towards the IFO along with the squeezing this means that we have 12 pW of scattered carrier from the OFI reaching the filter cavity. Comparing this to table 1 of T1800447 this is a lower scattered light power reaching the diodes than expected, for a similar level of carrier light reaching the DC PDs, which suggests that all three Faradays are providing the isolation level expected or slightly better. When driving ZM5, we get 12 nW of power scattered back to the interfometer, suggesting that there is a scattering source where we would not expect one to be. This seems most likely to be upstream of SFI2, since we only expect nW of total scattered light downstream of SFI2. If you are interested in looking at a diagram of possible scatters there is a VIP layout here, the beam which leaves B:M5 goes to a PD mounted on the ISI which is called B:PD1 and is intended to monitor light scattered from the OFI towards the squeezer.

Coupling and noise projection

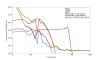

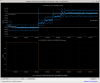

The last two plots here show the results of a filter cavity noise injection, similar to what Naoki did in 78579. This suggests that this noise is large enough to include in our noise budget, but not nearly large enough to explain the excess noise we see in DARM when the filter cavity error signal is seeing extra noise.

The code and data to produce this are in sheila.dwyer/SQZ/FilterCavity/fc_lsc_model.py