Sheila, Naoki, Camilla

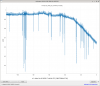

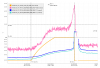

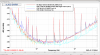

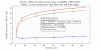

We took FDS, Anti-SQZ and Mean SQZ data at different NLGs: 14, 17, 43, 82 and 123. We think we see evidence of frequency noise. We see less squeezing at high NLGs: around 4.5dB of SQZ at NLG < 43.1 but at NLG 81.9 and 122.8, squeezing at 1kHz reduced to 3.6 and 3.1dB. Attached Plot.

Each time we optimized SQZ angle around 1kHz. Because of this, the low frequency increased noise at high NLGs could be due to incorrect rotation, rather than phase noise.

| |

Time (UTC)

|

Demod phase

|

DTT ref#

|

NLG |

SQZ dB at 900Hz

|

|

FDS

|

21:23:40-21:26:00

|

152.25

|

3

|

13.9

|

-4.3

|

|

FDAS

|

21:27:30- 21:32:30

|

228.10

|

6

|

13.9

|

15.2

|

|

Mean

|

21:33:47- 21:38:00

|

-

|

7

|

13.9

|

12.7

|

|

No SQZ

|

21:40:00 -21:44:50

|

-

|

0

|

-

|

|

|

FDS (not optimized)

|

21:51:20 -21:54:30

|

164.57

|

Deleted

|

43.1

|

-4

|

|

FDAS (Left ASC off)

|

21:56:00 -21:59:45

|

226.2

|

5

|

43.1

|

19.1

|

|

FDS (retake)

|

22:03:00 -22:06:13

|

167.42

|

4

|

43.1

|

-4.3

|

|

Mean

|

22:06:45 -22:09:52

|

-

|

8

|

43.1

|

16.9

|

|

FDS

|

22:37:50- 22:41:00

|

172.16

|

9

|

122.8

|

-3.1

|

|

FDAS

|

22:42:43-22:45:45

|

217.67

|

10

|

122.8

|

24.7

|

|

Mean

|

22:46:35 - 22:49:55

|

-

|

11

|

122.8

|

|

|

FDS

|

22:57:30- 22:59:40

|

169.31

|

12

|

81.9

|

-3.6

|

|

FDAS

|

23:01:20 - 23:02:55

|

219.56

|

13

|

81.9

|

22.7

|

|

Mean sqz

|

23:03:26 - 23:05:45

|

-

|

14

|

81.9

|

20

|

|

FDS (left IFO here)

|

23:19:38

|

154.14

|

15

|

17.1

|

-4.5

|

Data saved in /camilla.compton/Documents/sqz/templates/dtt/20231025_GDS_CALIB_STRAIN.xml

|

Time (UTC)

|

Unamplified OPO OPO_IR_PD_LF

(Scan Seed)

|

OPO trans (uW)

|

OPO Temp (degC)

|

Amplified (maxium) H1:OPO_IR_PD_LF

(Scan OPO PZT)

|

NLG

(Amplified / (Unamplified Peak Max - Unamplified Dark Offset))

|

| Peak Maximum |

Dark Offset |

|

21:15

|

786e-6

|

-16e-6

|

80.54 uW

|

31.446

|

0.0112

|

13.9

|

|

21:44

|

|

|

100.52

|

31.414

|

0.0346

|

43.1

|

|

22:17

|

|

|

120.56

|

31.402

|

0.160

|

199.5 (SQZ loops went unstable)

|

|

22:30

|

|

|

110.5

|

31.407

|

0.06617

|

82 (didn't use)

|

|

22:36

|

|

|

115.46

|

31.405

|

0.09853

|

122.8

|

|

22:52

|

|

|

110.5

|

31.407

|

0.0657

|

81.9

|

|

22:09

|

769e-6

|

-15e-6

|

80.5

|

31.424

|

0.0137

|

17.1

|

Vicky and Naoki did an NLG sweep on the Homodyne in 73562.