Naoki, Sheila, Camilla, Vicky

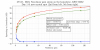

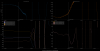

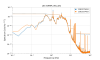

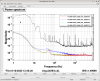

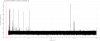

Summary: After yesterday's crystal move LHO:73535, we re-aligned SQZT7, and now see 8 dB SQZ on the homodyne, up to measured NLG=114 without a phase noise turnaround! This fully resolves the homodyne loss budget, there is 0 mystery loss remaining on the homodyne, from which we can infer 0 mystery losses in HAM7. Back to the IFO afterwards, after 1 day at this new crystal spot, squeezing in DARM is about 4.5dB - 4.8dB, reaching almost 5dB at the start of lock.

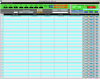

We first re-aligned the homodyne to the IFO SQZ alignment, which reached 4.8dB SQZ in DARM yesterday, so we are more confident the alignment back through the VOPO is not clipping. In yesterday's measurements, we had a sign error in the FC-ASC offloading script, which brought us to a bad alignment with limited homodyne squeezing, despite high 98% fringe visibilities. Attached is a screenshot of homodyne FC/ZM slider values with FC+SQZ ASC's fully offloaded (correctly), to which the on-table SQZT7 homodyne is now well-aligned. After Sheila re-aligned the homodyne to the screenshotted FC/ZM values, fringe visibilities are PD1 = 98.5% (loss 3.1%), PD2 = 97.8% (loss 4.2%).

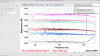

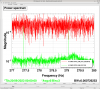

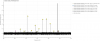

We then did an NLG sweep on the homodyne, from NLG=2.4 (opo trans 20uW) to NLG=114 (opo trans 120uW). Measurements below and attached as .txt, DTT is attached, plots to follow.

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

unamplified_ir = 0.0014 (H1:SQZ-OPO_IR_PD_LF_OUT_DQ with pump shuttered)

NLG = amplified / unamplified_ir (opo green pump un-shuttered)

@80uW pump trans, amplified = 0.0198 (at start, 0.0196 at end) --> NLG 0.0198/0.0014 ~ 14

@100uW pump trans, amplified = 0.0046 (at start, 0.0458 at end) --> NLG 0.046/0.0014 = 33

@120uW pump trans, amplified = 0.16 --> NLG 0.16/0.0014 = 114

@60uW pump trans, amplified = 0.011 (at start, 0.0107 at end) --> NLG = 7.86

@40uW pump trans, amplified = 0.0059 (at start, 0.0059 at end) --> NLG = 4.2

@20uW pump trans, amplified = 0.0034 (at start, --- at end) --> NLG = 2.4

| trace |

reference |

opo_green_trans

(uW) |

NLG |

SQZ dB |

CLF RF6 demod angles (+) |

| LO shot noise @ 1.106 mA, -136.3 dB |

10 |

80 |

14 |

|

|

| Mean SQZ |

11 |

|

|

+13 |

|

| SQZ |

12 |

|

|

-8.0 |

162.0 |

| ASQZ |

13 |

|

|

+16 |

245.44 |

| NLG=33 |

|

100 |

33 |

|

|

| Mean SQZ |

14 |

|

|

+16.7 |

|

| SQZ |

15 |

|

|

-8.0 |

170.5 |

| ASQZ |

16 |

|

|

+19.9 |

237.85 |

| NLG = 114 |

|

120 |

114 |

|

|

| Mean SQZ |

17 |

|

|

+22.5 |

|

| SQZ |

19 |

|

|

-8.0 |

177.98 |

| ASQZ |

18 |

|

|

+25.6 |

230.13 |

| NLG = 7.9 |

|

60 |

7.9 |

|

|

| Mean SQZ |

20 |

|

|

+9.7 |

|

| SQZ |

21 |

|

|

-7.7 |

154.28 |

| ASQZ |

22 |

|

|

+12.4 |

253.83 |

| NLG = 4.2 |

|

40 |

4.2 |

|

|

| LO SN check |

4 |

|

|

|

~0.1dB lower? |

| Mean SQZ |

23 |

|

|

+6.8 |

|

| SQZ |

24 |

|

|

-6.3 |

140.6 |

| ASQZ |

25 |

|

|

+9.6 |

262.64 |

| NLG = 2.4 |

|

20 |

2.4 |

|

|

| Mean SQZ |

26 |

|

|

+3.8 |

|

| SQZ |

27 |

|

|

-4.8 |

135.45 |

| ASQZ |

28 |

|

|

+6.3 |

-100.5 |

| LO shot noise @ 1.06 mA, |

29 |

|

|

|

|

All measurements had PUMP_ISS engaged throughout; we manually tuned the ISS setpoint for different NLGs. For low NLG (20uW trans) we manually engaged ISS. LO power (shot noise) drifted ~5% over the measurement, see trends.

NLG Sweep Procedure:

- Measure LO shot noise

- Measure amplified seed power, adjust OPO TEC temp to maximize NLG for given OPO GREEN TRANS

- Toggle Seed/CLF Back to CLF on SQZT0, shutter CLF, open HD path (Toggle HD Path Green). Measure Mean SQZ with CLF shuttered

- Unshutter CLF, "LOCKED CLF", "LOCKED HD". Set SQZ demod angle to minimize AHF on HD (aka minimize H1:SQZ-ADF_HD_DIFF_IQSUM_RMS). Measure SQZ dB

- Rotate H1:SQZ-CLF_REFL_RF6_PHASE_PHASEDEG to maximize ADF from same H1:SQZ-ADF_HD_DIFF_IQSUM_RMS. Measure ASQZ dB

- Toggle HD back to OPO IR PD (red). Toggle CLF --> Seed. Re-measure NLG. Change OPO GREEN TRANS by changing PUMP_ISS setpoint & repeat.

DTT saved in $(userapps)/sqz/h1/Templates/dtt/HD_SQZ/HD_SQZ_8dB_101823_NLGsweep.xml