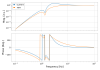

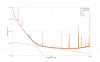

To see if the OM2/beckhoff coupling is a direct electronics coupling or not, we've done A-B-A test while the fast shutter was closed (no meaningful light on the DCPD).

State A (should be quiet): 2023 Oct/10 15:18:30 UTC - 16:48:00 UTC. The same as the last observing mode. No electrical connection from any pin of the Beckhoff cable to the OM2 heater driver chassis. Heater drive voltage is supplied by the portable voltage reference.

State B (might be noisy): 16:50:00 UTC - 18:21:00 UTC. The cable is directly connected to the OM2 heater driver chassis.

State A (should be quiet): 18:23:00- 19:19:30 UTC or so.

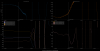

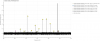

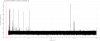

DetChar, please directly look at H1:OMC-DCPD_SUM_OUT_DQ to find combs.

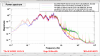

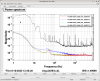

It seems that even if the shutter is closed, once in a while very small amount of light reaches DCPDs (green and red arrows in the first attachment). One of them (red arrow) lasted long and we don't know what was going on there. One of the short glitches was caused by BS momentarilly kicked (cyan arrow) and scattered light in HAM6 somehow reached DCPDs, but I couldn't find other glitches that exactly coincided with optics motion or IMC locked/unlocked.

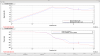

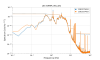

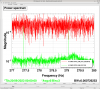

To give you a sense of how bad (or not) these glitches are, 2nd attachment shows the DCPD spectrum of a quiet time in the first State A period (green), strange glitchy period indicated by the red arrow in the first attachment (blue), a quiet time in State B (red) and during the observing time (black, not corrected for the loop).

FYI, right now we're back to State A (should be quiet). Next Tuesday I'll inject something to thermistors in chamber. BTW 785 was moved in front of the HAM6 rack though it's powered off and not connected to anything.

Looks like zotvac0 has gone offline and the EDC is now disconnected from all 27 channels. We'll leave it like this overnight and work on it first thing tomorrow.